What is RAG (Retrieval-Augmented Generation) & how it works?

A complete guide to RAG (Retrieval-Augmented Generation). Learn what it is, how it works, the different RAG types, the components of a RAG system & more.

In this article

RAG (Retrieval-Augmented Generation) refers to a process where artificial intelligence models use external databases to obtain relevant information and generate more accurate responses.

The key benefits of RAG are better accuracy and reduced costs, since there is no need to retrain models.

RAG is used to improve search engines, build smarter chatbots, and power up-to-date Q&A systems.

There are different types of RAG, such as agentic RAG, graph RAG, multimodal RAG, adaptive RAG, speculative RAG, correlative RAG, modular RAG, and hybrid RAG.

The components of a RAG system are the retrieval module, the knowledge base, the integration layer module, and the generator.

Some tools used to create RAG AI applications are Meilisearch, LangChain, Pinecone, ChromaDB, Weaviate, and FAISS.

RAG goes a step beyond semantic search by not just retrieving relevant documents, but using that retrieved information to generate new, contextually accurate responses. In contrast, semantic search stops at surfacing the most relevant existing documents without generating new content.

RAG is also different from CAG (Context-Augmented Generation) because RAG actively retrieves external data during processing, while CAG works with whatever context it receives upfront.

Let’s now discuss RAG in more detail.

What is RAG?

RAG is a process where a generative AI model accesses external datasets to find relevant, up-to-date information before returning its response.

It can be likened to a student who checks for answers in a textbook during an exam instead of relying solely on memory.

For example, if you ask a customer service chatbot about a company's return policy, traditional AI systems might hallucinate, meaning they may confidently generate incorrect information. The chatbot might say returns are accepted within 60 days when the actual policy is only 30.

But with RAG, the system first checks your current policy documents, isolates the relevant information, and then generates an answer based on that.

RAG can help address limitations in how LLMs (Large Language Models) work. LLMs are trained to learn writing patterns from text, but their training data can quickly become outdated. RAG fills this knowledge gap.

Let’s see just how important RAG is.

Why is RAG important?

RAG is important because it helps reduce AI hallucinations.

If they do not have an answer to a user query, regular AI models present false information, outdated responses, or return responses from unreliable knowledge sources.

RAG fixes these problems by forcing the AI to verify information before returning a response. Instead of guessing or using old training data, AI is forced to search through reliable and updated knowledge bases. This means you get better answers and can see exactly where the accurate information comes from.

Let’s see the benefits of using RAG.

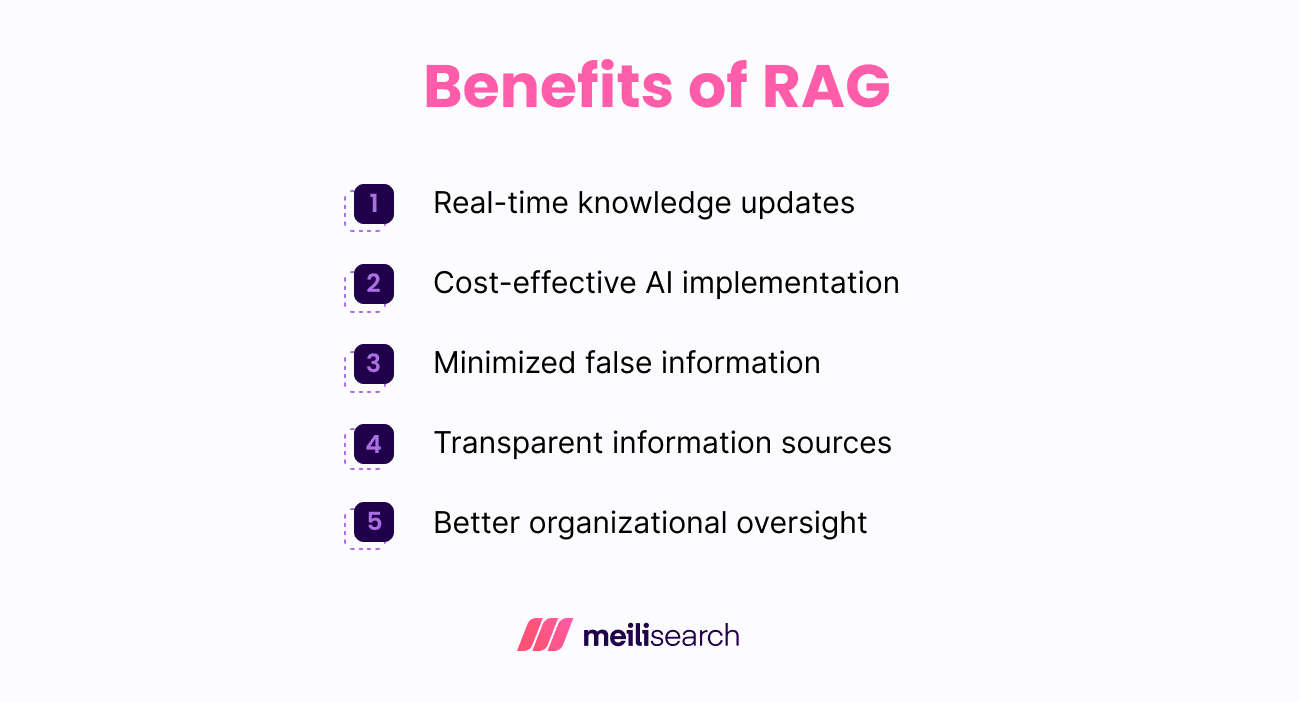

What are the benefits of RAG?

The benefits of RAG include real-time knowledge updates, cost-effective AIs, and minimized false information, among others.

The key benefits are shown in the image below:

- Real-time knowledge updates: Traditional AI models are stuck with the information they learned during training, which can become outdated. RAG solves this by connecting models to live data sources, allowing AI to gather real-time information, such as breaking news, current market data, or internal customer data.

- Cost-effective AI implementation: Rather than spending more on retraining AI models to update them, RAG connects AI models to external databases, allowing them access to new data. This saves massive training costs and still delivers the benefit of a domain-specific model.

- Minimized false information: Hallucination of AI models is not uncommon. RAG minimizes this by anchoring their generated text to actual and verifiable sources.

- Transparent information sources: RAG systems can provide citations, letting users verify information and dig deeper if needed.

- Better organizational oversight: Companies are careful about exposing their repositories. RAG allows organizations to control exactly which documents or data sources AI can retrieve information from. This gives businesses better oversight while ensuring the AI stays useful to their business needs.

Let’s see some essential use cases of RAG.

What are RAG’s use cases?

RAG enhances generative models by using trusted data sources. This makes it valuable across industries where accuracy, context, and control over information are critical.

The main use cases of RAG are listed below:

- Smart customer support: Instead of leaving customers frustrated with generic responses, RAG allows AI customer support chatbots to tap into real company resources. For example, you can use Meilisearch to index internal resources such as product manuals, troubleshooting guides, and policy documents so that the chatbot returns accurate answers with clear citations to your company documentation.

- Internal company search: Most organizations have information scattered across different systems, making information retrieval difficult. RAG can be used to develop a conversational question-answering engine where staff members ask natural questions and get precise answers from natural language processing (NLP) models. Employees can ask questions like, “How many vacation days do I have left this year?” The answer will depend on their information in the PTO database.

- Content creation assistant: Many writers and marketers spend too much time digging for specific information. RAG can create an intelligent research assistant that pulls information from multiple sources (such as market reports, past campaigns, and industry articles).

- Personalized recommendations: E-commerce and content platforms can use RAG to create smarter recommendation systems that don’t suggest items just based on a simple algorithm. For example, you can use Meilisearch with embedding models to combine user behavior data, product information, and reviews to create recommendations that the users can trust.

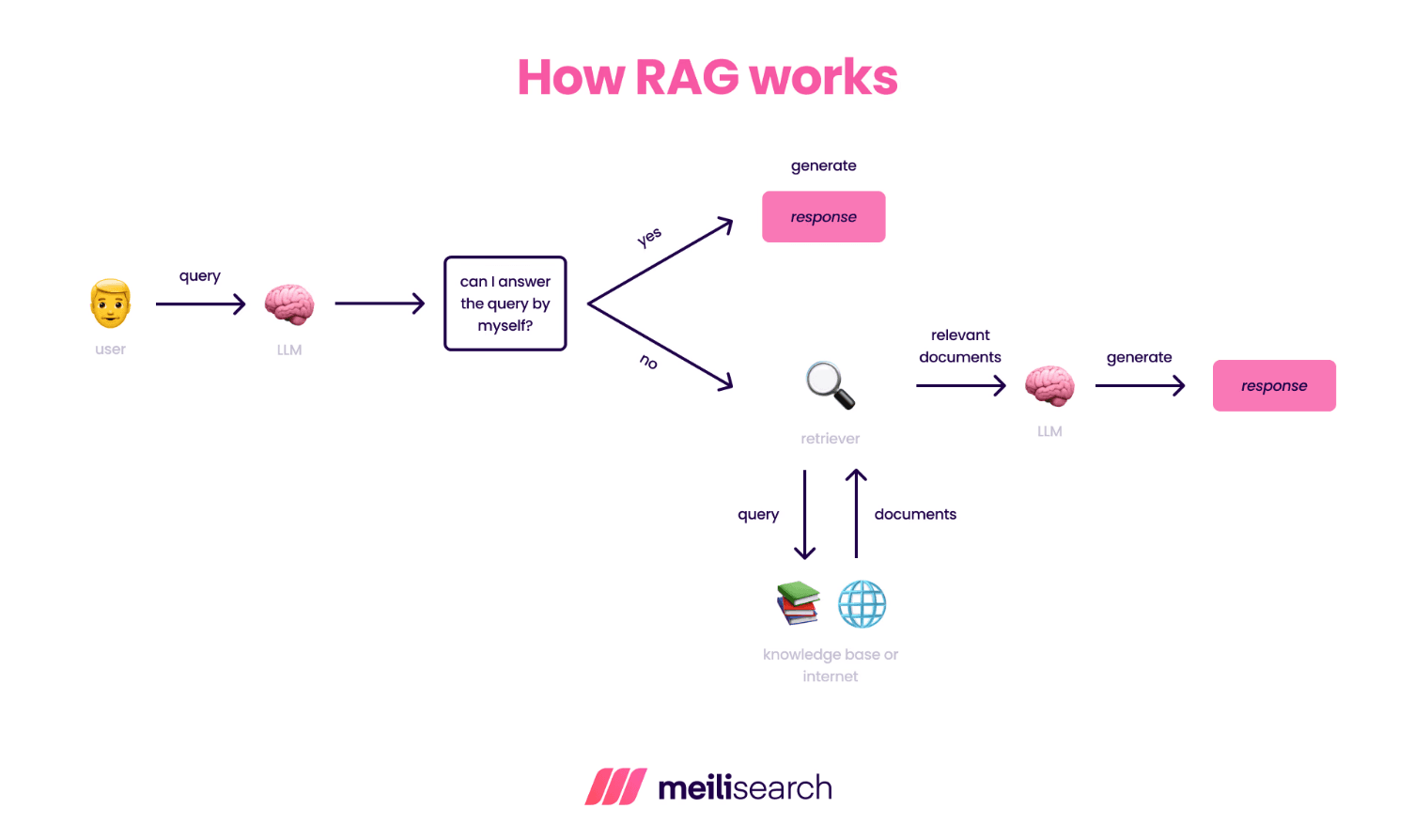

How does RAG work?

RAG searches through documents, finds the relevant information, and uses that to create an accurate response.

The way RAG applications work is shown in the image below:

1. Indexing all your content

Before implementing RAG, you need to prepare and split all the information you want to use into smaller chunks – a process called chunking. This can include internal documents, manuals, emails, PDFs, etc.

Each information segment is then turned into an embedding, which is stored in a special database built to understand meaning, not just words (a vector database).

2. Understanding the user query

When a user asks a question – like “What’s the process for booking a work trip?” – the query is also turned into an embedding using the same model as in step 1.

3. Retrieving relevant information

The system compares the query embedding with the stored embeddings and retrieves the most similar ones, even if the wording is different.

For example, it can connect “trip booking” with “travel request” based on meaning, not exact terms.

4. Building the augmented prompt

RAG combines the retrieved text snippets with the user’s question to build a context-aware prompt. This gives the language model fresh, relevant context that it didn’t have during training.

5. Generating the response

The large language model uses this augmented prompt to generate an answer. Because it’s grounded in real, up-to-date information (courtesy of RAG) the output is more accurate and less prone to hallucination.

Let’s walk through the various types of RAG systems.

What are the different types of RAG?

Several types of RAG models exist due to their varying architecture and broad range of use cases.

Some key types of RAG are shown in the image below:

- Agentic RAG: Agentic RAG is a type of RAG that gives a generative AI model the ability to think and plan before retrieving results. It breaks down a complex question into steps, determines what information is needed in each step, and uses web searches or APIs to obtain more accurate answers. This makes it great for research and solving complex problems. For example, it can help a product manager explore market trends.

- Graph RAG: Graph RAG uses knowledge graphs to connect ideas. Instead of just pulling out documents, it locates structured relationships between concepts – for example, how ‘malaria’ is related to ‘mosquitoes’ and ‘treatment options.’ It is useful in technical fields such as biomedicine or academic research where facts or terminologies are interconnected.

- Multimodal RAG: Multimodal RAG is designed to handle not just text, but also images, audio, and video. This is useful in product support that involves screenshots or diagrams, or even in education platforms that combine explanations with visuals. For example, it could guide a user through explaining a complex chart in a financial report.

- Adaptive RAG: Adaptive RAG changes how it retrieves information based on the context or user. For example, if a user asks many follow-up questions, it can adjust information retrieval to be more focused or broader depending on the pattern. It may even switch retrieval methods (from keyword to vector) based on what works better. It is built to learn from interactions and improve over time without reprogramming.

- Speculative RAG: In speculative RAG, the AI model first guesses what the answers might look like, then retrieves information to support those guesses. For example, when a user asks a question, speculative RAG generates different drafts, retrieves supporting information for each one, and then chooses the most accurate one. It is often used where multiple interpretations of a query are possible.

- Corrective RAG: Corrective RAG is designed to detect and fix problems after retrieval. If the model finds irrelevant or misleading documents, it can flag or remove them. It also double-checks the facts before answering. It is useful for financial assistants where accuracy is vital. For example, it could ensure investment answers are backed by the most recent and relevant data.

- Modular RAG: Modular RAG treats each system component as a module, meaning you have separate modules for query rewriting, retrieval, reranking, summarization, and answer generation. This design makes it easier to test and upgrade individual parts without rebuilding the whole system. For example, you could switch from BM25 to vector retrieval without touching the LLM.

- Conversational RAG: This is typically used for dialogue. It focuses on remembering what has been said earlier in a conversation. So when a user asks, ‘What year was that?’, the system knows what ‘that’ refers to. It is great for chatbots and tutorial platforms.

- Multi-hop RAG: This is designed for questions that require several steps to answer. It retrieves documents step-by-step, with each step refining the next. This is useful in academic research tools. For instance, it could answer, ‘What is the growth rate of the largest logistics company in the US?’ by first identifying the company and then retrieving financial data to calculate its growth.

Apart from the types of RAG listed above, you can also categorise RAGs based on their level of maturity in terms of implementation. There are naive RAG and advanced RAG systems.

- Naive RAG: The most basic form of RAG. It chooses the top search results (often using simple keyword matching) and feeds them straight to the language model. There is no reranking, filtering, or understanding of context. It works for straightforward questions but struggles with complex ones. It is quick to build but not very smart.

- Advanced RAG: It can use vector search, reranking, query rewriting, or feedback loops to get better results. It is built to be smarter, faster, and more accurate. You can use custom tools, keep a memory of past chats, and even check if the answer is correct. A good example of an advanced RAG is a customer support tool.

What are the components of a RAG system?

If you are wondering how to build a RAG system, it helps to first understand its key parts.

The components of a RAG system are:

- Knowledge base: The knowledge base is where all the reference information is stored. It can include content such as articles, PDFs, websites, manuals, wikis, reports, etc. This content is processed and stored in a way that makes it easy to search. The more organized and relevant the knowledge base, the better the system can retrieve accurate answers.

- Retriever: The retriever searches the knowledge base and finds the most relevant content based on a user’s question. It often uses vector similarity (which focuses on meaning rather than exact words) to find similar embeddings.

- Integration layer: The integration layer brings everything together. It is the center of the RAG architecture. It takes the user’s prompt, sends it to the retriever, collects the results, and organizes them into a format the generator (the language model) can use. It handles tasks such as formatting, prompt construction, or combining context. In more advanced systems, this layer may include query rewriting or reranking to improve accuracy.

- Generator: The generator is the final component of the RAG architecture. It is the language model that writes the final answer. It takes the retrieved information and combines it with the user’s input to produce relevant responses. Instead of making things up, it uses the actual documents pulled from the knowledge base. Common examples are GPT and Claude.

What tools are used for RAG applications?

RAG tools make it possible to connect your data with powerful language models.

Depending on your goals, these tools fall into different categories: storing and searching documents, generating responses, or managing prompts.

Some RAG tools to consider are the following:

- Meilisearch: Meilisearch is a super-fast search engine that fits right into RAG setups. It can combine keyword and vector search to get exact matches and smart, meaningful results. You can even plug in embedding models and handle everything through one simple API.

- LangChain: LangChain is an open-source Python package that acts as an orchestration layer. It helps connect retrievers, embeddings, and generators and stitches them all into a complete RAG pipeline. It also handles the messy integration work, so you do not have to write custom connectors for every database, API, or file format.

- Weaviate: Weaviate is a full-featured vector database that supports hybrid search. It also includes filters, metadata, and scalable querying. It is ideal for production-level RAG, where flexibility and real-time responses are needed.

- FAISS: FAISS is a vector search library built by Meta. It allows you to index embeddings at scale and do semantic similarity lookups. It is ideal when you need your RAG to understand the meaning, not just keywords.

- Haystack: Haystack is an end-to-end framework that combines document retrieval, question answering, and generation components. It is a good tool for building search and QA systems where everything needs to work together smoothly.

What advanced techniques are used in RAG?

Advanced RAG techniques refer to smarter ways of improving how a RAG system retrieves and uses information to answer queries.

Instead of just searching documents and generating a response, these techniques help the RAG system think deeper. This could be by choosing better sources, adjusting how it responds based on the question, or digging deeper when simple answers are not enough.

The goal is to make RAG systems faster and more accurate, especially in real-world AI applications.

Some advanced RAG techniques include hybrid search, reranking and contextual compression, query expansion, multi-hop reasoning, contextual retrieval, adaptive retrieval, and self-reflection.

What is the difference between RAG and semantic search?

The main difference between RAG and semantic search is that RAG is a complete system that retrieves relevant information and then uses an LLM to generate answers based on that context.

Semantic search is focused on the retrieval part alone. It finds documents conceptually similar to the query and returns them as is.

For instance, imagine you're an engineer asking, ‘How do we handle user authentication in our system?’

- A semantic search system might return three internal docs: one about OAuth, one about token storage, and one about login flows. You’d need to read through them to piece together the answer.

- A RAG system would retrieve the same documents, extract the relevant sections, and then generate a direct, cohesive answer like: ‘Your system uses OAuth 2.0 for authentication, stores access tokens in encrypted Redis, and handles login through a custom API gateway.’

What is the difference between RAG and fine-tuning?

The main difference between RAG and fine-tuning is that RAG allows the AI model to reach out to a knowledge base at query time. It does not change the model weights, just the knowledge it has access to.

Fine-tuning teaches the model new things by training it on focused data. In this case, it helps the model learn and store new knowledge.

RAG vs. fine-tuning comes down to borrowing knowledge when needed versus building it into the model from the ground up.

What is the difference between RAG and prompt engineering?

The key difference between RAG and prompt engineering is that RAG pulls external knowledge to help an AI model respond better. In contrast, prompt engineering focuses on what the user sends directly to the model.

RAG provides access to fresh, relevant information.

Prompt engineering relates to how you ask the model questions.

RAG improves what the model knows at the moment, and prompt engineering primarily shapes how it responds.

They are different tools, but together they optimize the GenAI workflow.

What is the difference between RAG and CAG (Cache-Augmented Generation)?

The main difference between RAG and CAG is that RAG pulls fresh information from a live knowledge base every time a question is asked, while CAG reuses previous cached results.

CAG is faster and cheaper, but RAG handles new or unexpected questions better.

What is the difference between RAG and vector databases?

The main difference between RAG and vector databases is that RAG is a method for improving how language models generate answers by retrieving relevant context before responding, while vector databases are tools used within that process to store and search information efficiently using embeddings.

RAG is the overall technique, whereas vector databases are one of the key components that make it work.

RAG's role in building a trustworthy search

As demand for generative AI systems grows, RAG will play an increasingly central role in bridging the gap between static data and dynamic human queries.

Whether you're powering an internal knowledge base assistant, improving customer support, or creating a smarter content workflow, RAG offers a real-world solution that reduces hallucinations and improves user experience.

Easily build RAG systems with Meilisearch

Meilisearch is a lightweight search engine that is a good choice for building RAG systems. It is clean, fast, and easy to work with. Its support for both keyword search and vector database search, along with easy integration, helps developers quickly implement high-quality retrieval – an essential step for any RAG implementation.