Semantic search vs. RAG: A side-by-side comparison

Explore the differences between semantic search and RAG. Learn when to use each, common trade-offs, benefits, evaluations, and more.

In this article

Semantic search and retrieval-augmented generation (RAG) are crucial for how today's AI systems handle information and find relevant answers to user queries.

Think of it this way: semantic search is all about ranking information based on what it means, while RAG takes it further by actively retrieving knowledge to provide the answers users need.

In this article, we're going to explore:

- What semantic search and RAG are and how they work.

- The contrast between their capabilities.

- The potential for overlap and collaboration, as well as whether or not they can work without each other.

- How to figure out when semantic search is enough, when RAG is essential, and when combining them gives you the best results.

- The pros, cons, and limitations that come with both so that you can evaluate them properly.

By the time you're done, you'll have a clear framework for choosing the best approach for your data and users.

Ready? Let’s get into it.

What is semantic search?

Semantic search is a technique that helps AI systems understand the intent behind user queries instead of relying on exact keyword matching. It uses embeddings, vector representations, and natural language processing (NLP) to map words by meaning.

By doing this, semantic search retrieves the most relevant documents even if the query phrasing isn’t perfect. Unlike traditional information retrieval methods, semantic search ranks results based on context and relationships, and produces answers that genuinely make sense.

Teams can also explore choosing the best model for semantic search to refine their search architecture.

What is RAG (retrieval-augmented generation)?

Retrieval-augmented generation (RAG) combines information retrieval and generative AI to create more factual and contextually relevant responses. It retrieves relevant documents from an external knowledge base or vector database using embeddings before passing them over to the large language model (LLM).

From there, the LLM uses the retrieved context and ensures that the response accuracy is high and the hallucinations are nonexistent.

In short, RAG augments the model’s knowledge with real data, making responses more trustworthy and domain-specific.

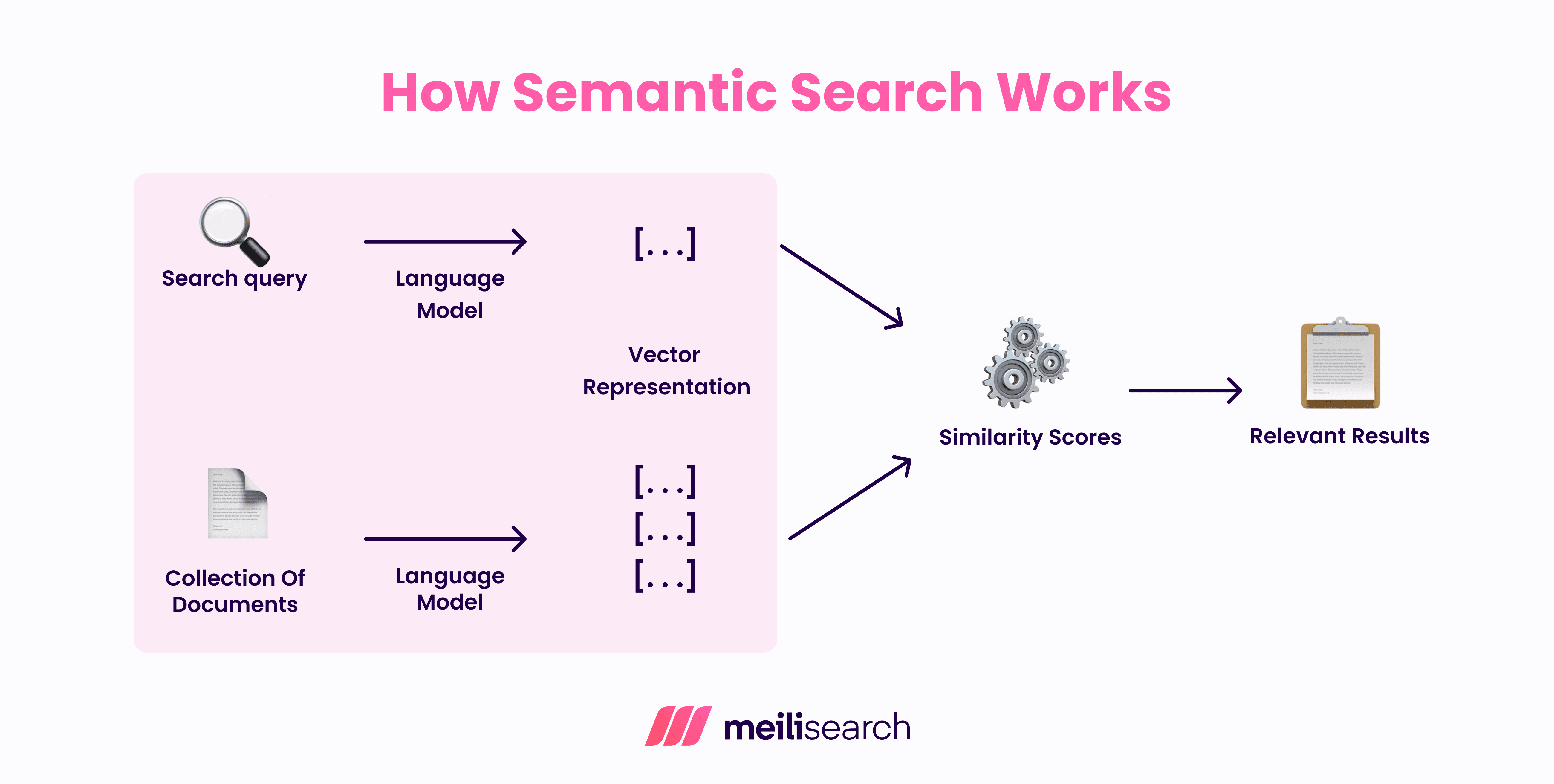

How does semantic search work?

Semantic search goes beyond keyword matching by understanding the meaning and intent behind those keywords through contextual relationships.

- Step 1: Semantic search converts text into embeddings. Each query and document is transformed into dense vector representations that capture semantic meaning.

- Step 2: Then, it compares embeddings. The system measures cosine similarity or Euclidean distance between the user query and stored vectors to find the most relevant results.

- Step 3: Finally, a retriever ranks documents not just by word overlap but also by conceptual closeness, bringing up the absolute best matches.

This process helps users find relevant information even when they aren’t sure of the exact wording needed to get what they’re looking for.

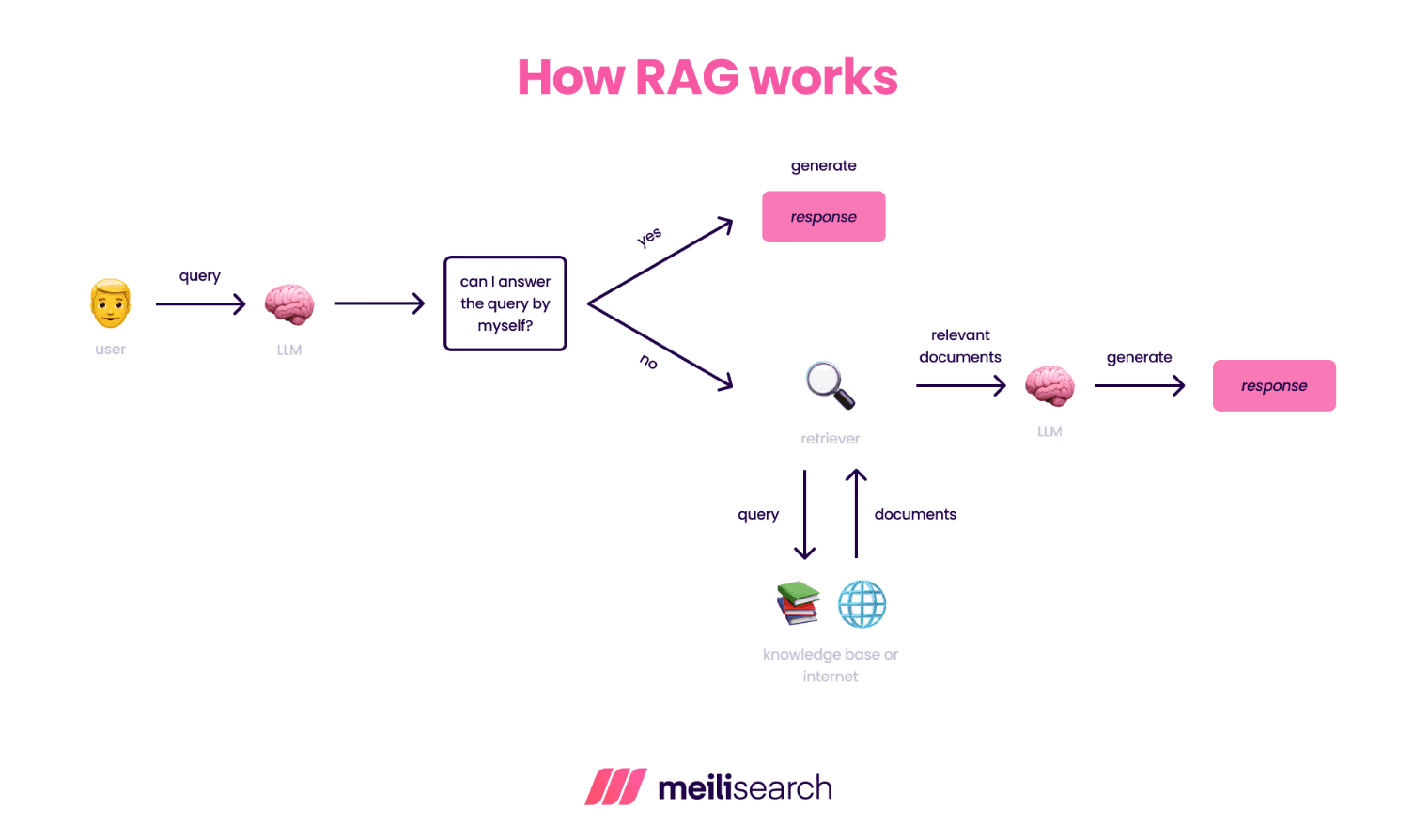

How does RAG work?

RAG connects advanced retrieval systems with LLMs to produce answers that the users are looking for.

Let’s see how it works:

- Step 1: First, the data is embedded and indexed. Documents from various data sources are split into chunks, converted to vector embeddings, and stored in a vector database.

- Step 2: When a user query is submitted, the LLM first evaluates whether it can answer the query on its own. If it needs additional context to provide a satisfactory answer, this is where RAG comes into play.

- Step 3: The RAG retriever doesn’t search through the internal vector database. It can access external knowledge bases or even search the internet to find relevant documents.

- Step 4: Once RAG provides more context, the LLM uses both the query and the retrieved context to generate more relevant answers.

As a result of this whole cycle, the answers you get are not only relevant but up-to-date as well.

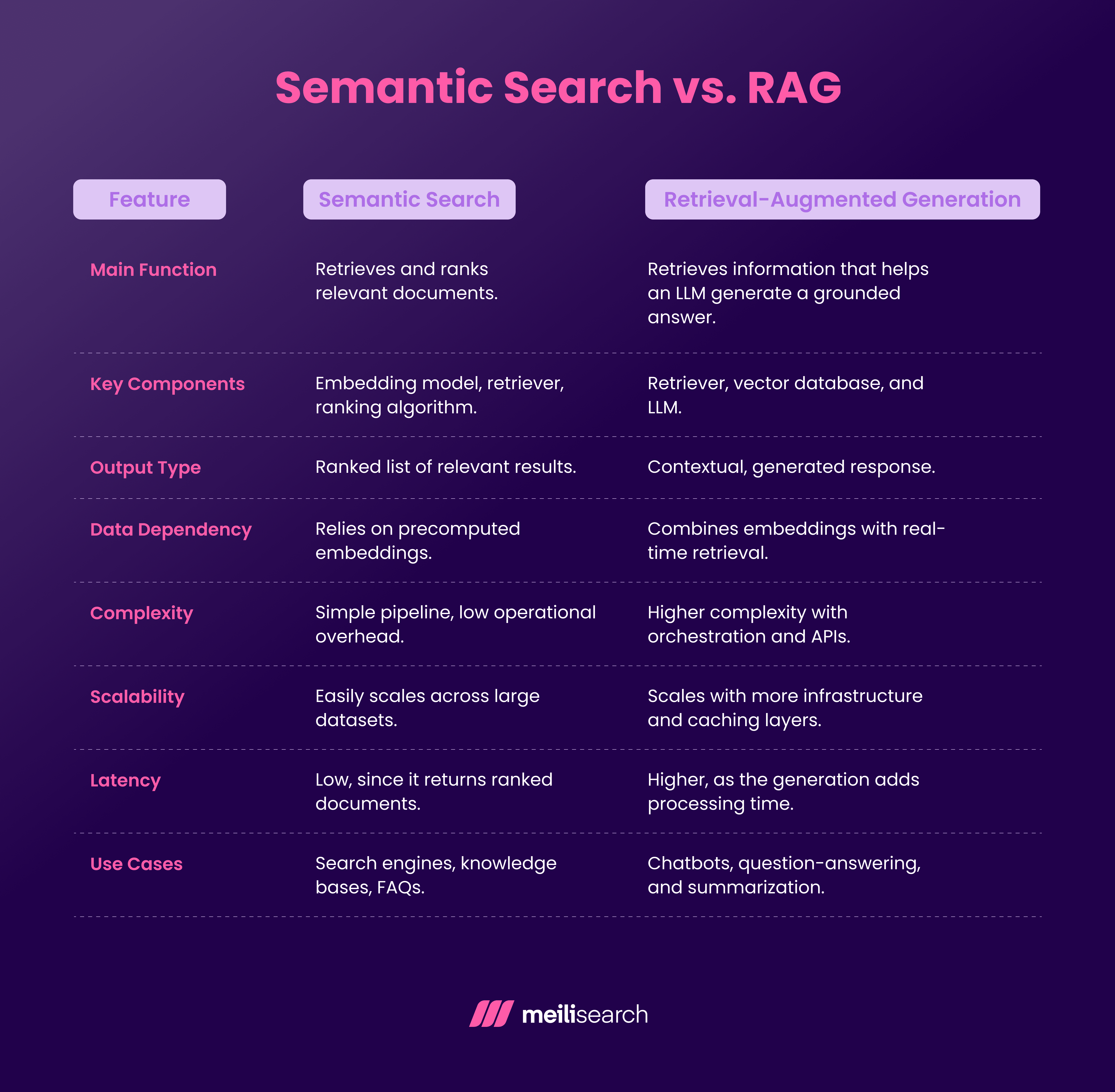

What is the difference between semantic search and RAG?

The main difference between semantic search and RAG is what they return. Semantic search only finds specific information, while RAG provides an explanation, enhancing the response to the user query.

Semantic search works by matching user queries to document vector embeddings using similarity metrics such as cosine or Euclidean distance. It's super-efficient, fast, and great for providing direct answers closely related to the user query.

RAG, on the other hand, feeds retrieved context to a large language model to compose a more elaborate answer. This added step aims to reduce AI hallucinations and improve accuracy.

The table below highlights other key distinctions between the two:

You can also explore different RAG types to understand how architectures vary in retrieval and generation workflows.

What problems does semantic search solve?

Keyword-based retrieval has always had limitations. Semantic search addresses this limitation by covering a broader domain than just literal keywords.

Here’s what it solves:

- Intent detection: It interprets what the user means, not just what they type.

- Disambiguation: Semantic search removes ambiguity and distinguishes between words with multiple meanings by analyzing their embeddings.

- Better recall and precision: It retrieves relevant documents even when queries use different phrasing.

- Multilingual and domain-specific results: Diversity plays a huge role in getting the correct answer to the user. Semantic search adapts across knowledge bases and domain-specific datasets without manual rules.

- Improved user experience: Users love semantic search because it ranks relevant information intuitively, helping them find what they need faster.

By combining natural language processing (NLP) and vector embeddings, semantic search ensures users get the results they need.

What problems does RAG solve?

RAG addresses the main challenges of retrieval and generation by grounding large language models in real data:

- Hallucinations: RAG retrieves relevant documents before generation, reducing the risk of made-up or unsupported answers.

- Outdated knowledge: It connects to external knowledge bases to keep responses accurate and up to date.

- Context limitation: It provides retrieved context beyond the LLM’s memory, thereby improving factual grounding.

- Complex reasoning: It enables the model to combine multiple information sources to produce multi-step answers.

- Trust and explainability: It cites its sources, ensuring responses are transparent and verifiable.

By combining semantic retrieval with generative models, RAG helps users get precise, context-aware answers rather than vague summaries, using techniques described in our RAG techniques article.

Can semantic search be used without RAG?

Yes, semantic search can work perfectly well without RAG in many real-world applications. It’s often used when retrieving relevant information is sufficient and generating new text isn’t necessary.

For instance, knowledge bases, search engines, and customer support chatbots rely on semantic search to quickly find the most relevant documents. It’s also used in question-answering systems, e-commerce search, and internal document retrieval, where users want direct access to existing content rather than a generated summary.

Because it operates only on vector embeddings and retrieval algorithms, it’s lightweight, scalable, and cost-efficient.

However, when users expect natural language answers or context synthesis across multiple documents, semantic search alone falls short. That’s when RAG becomes the logical next step.

Can RAG work without semantic search?

Yes, it can, but it’s not ideal. RAG can technically operate using keyword retrieval or metadata filtering instead of semantic search, but the results are less precise.

Without vector embeddings or semantic similarity, the retriever may fetch documents that match words but miss intent. And intent is the key in any retrieval.

Some teams use BM25 or hybrid search engines as the retrieval layer for smaller or domain-specific datasets where context is already well structured. This approach simplifies infrastructure and lowers cost, but limits recall accuracy and context coverage.

Skipping semantic search means the model depends more on the generator to interpret data. This is a risky tradeoff that can lead to hallucinations or shallow grounding.

For most real-world RAG systems, semantic search is the foundation that makes retrieval meaningful before generation is even a thought.

What are the pros of semantic search?

You already know the accuracy aspect of semantic search. Let’s look at some more pros:

- Understands user intent way better: Semantic search interprets user queries based on meaning, so the retrieved results are better than in plain keyword matching.

- Deals with ambiguity like a pro: Through NLP, it can distinguish between different meanings, such as ‘Apple,’ the company, versus ‘apple,’ the fruit.

- Enables scalability: Semantic searchcan retrieve results quickly and accurately, even across large datasets.

Semantic search is the foundation for both modern search technologies and RAG systems.

What are the pros of RAG?

RAG provides language models with a way to remain factual, grounded, and context-aware without retraining every time new data arrives.

Let’s look at some pros of RAG:

- Has access to real-time knowledge: It retrieves relevant documents from external knowledge sources.

- Goodbye hallucinations: By relying on answers from the retrieved context, RAG minimizes the introduction of made-up or outdated information.

- Adapts to various domains easily: It easily plugs into domain-specific datasets, from medical records to legal documents, without the need to fine-tune the LLM.

Overall, RAG bridges semantic search and generative AI to help teams create applications that not only find data but explain it in natural language.

What are the limitations of semantic search?

Let’s look at some semantic search limitations.

- Complex queries might result in ambiguity: Useful as it is, semantic search can falter when queries are too extensive.

- Costly if you want to handle massive datasets: Creating and updating vector embeddings for large datasets demands increasing amounts of resources, which can be expensive.

- Potential for low-quality results: If the stored data is sparse or of poor quality, you might get low-quality results.

- Generative reasoning is lacking: It can’t produce detailed answers, which makes it less helpful in question-answering or chatbot use cases.

These limitations make semantic search excellent for discovery, but not enough when users expect complete, natural-language answers.

What are the limitations of RAG?

RAG is renowned for its accuracy, but the cost of this accuracy is the added layers of complexity.

Let’s explore some other limitations of RAG:

- Higher latency and cost: Multiple API calls that enable RAG to be so effective come with hefty charges.

- Variable retrieval quality: If relevant documents are missing or poorly embedded, the LLM will still hallucinate.

- Complex infrastructure: With increasing overhead from vector databases, chunking, and retrieved context pipelines, the RAG system can become really complex.

- Difficult to evaluate: You need continuous assessment of the RAG system to maintain retrieval quality and accuracy.

If you’re working in high-stakes domains that involve knowledge-based systems, RAG is perfect. But it might be a bit too much for simpler applications.

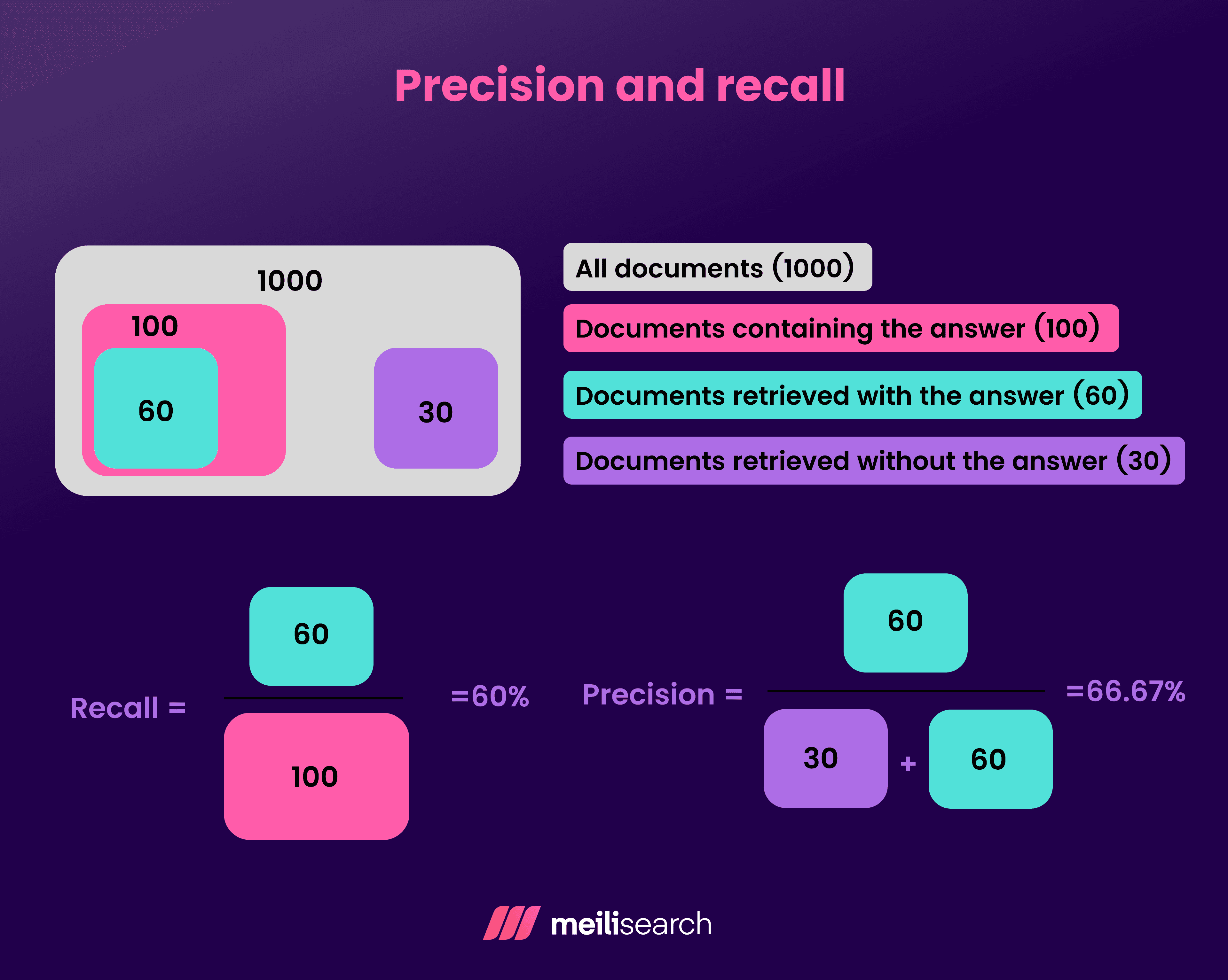

How is semantic search evaluated for effectiveness?

Semantic search effectiveness is measured by how accurately it retrieves and ranks relevant documents for a given user query. After all, that’s what it is for.

The primary metrics include precision (how many retrieved results are relevant) and recall (how many relevant results were retrieved).

Other factors, such as Mean Reciprocal Rank (MRR) and Normalized Discounted Cumulative Gain (nDCG) help assess ranking quality.

Teams also use user satisfaction surveys or click-through rates (CTRs) to measure success. These evaluations often compare how well embeddings, retrievers, and algorithms capture intent across datasets.

In short, good semantic search is about balancing accuracy, relevance, and scalability.

How is RAG evaluated for effectiveness?

Evaluating a RAG system requires measuring not only retrieval accuracy but also how well generated answers remain grounded in the retrieved context.

The key metrics for this include factual accuracy, grounding score, and hallucination rate (the frequency with which the LLM adds unsupported information). Teams also track context precision and coverage to assess how the retrieved chunks influence the model’s response.

Other evaluation methods test retrieval quality, latency, and user trust over time. These insights help teams balance precision and performance across different data sources.

For a deeper look into evaluation frameworks, read our guide on RAG evaluation, which breaks down real-world techniques for measuring grounded generation.

What are the latest trends in semantic search and RAG?

There’s an upward trend in the popularity of both semantic search systems and RAG. Especially now, when AI and LLMs are a core part of almost every enterprise application.

The two major trends you need to keep up with are:

- Integration between LLMs and retrieval systems: Modern retrieval systems have developed the capability to integrate LLMs and deliver answers that are not only more accurate but also sound conversational and almost human.

- Hybrid approach to retrieval: By combining keywords, vector embeddings, and reranking techniques, we can achieve significant improvements in precision and recall across both structured and unstructured data.

This set of trends, along with some others, contributes to improving RAG daily. In essence, they are all about making AI search engines more intelligent and user-friendly.

Frequently Asked Questions (FAQs)

The following FAQs should address any remaining confusion about semantic search and RAG.

Is RAG the same as semantic search?

No. Semantic search ranks documents based on their meaning. RAG retrieves the documents first, and then uses this retrieved context to generate answers the users are looking for.

Why is semantic search important for AI?

Semantic search improves how AI understands user queries. It makes the AI interpret the intent behind the search instead of just taking the keywords at face value. Naturally, this brings a significant boost in accuracy and the user experience.

Why is RAG used in generative AI?

Generative AI needs the right context to generate information. RAG helps LLMs combine retrieved information with the user query to generate the required answers. This significantly reduces AI hallucinations and lays the foundation for real-time AI agents or chatbots.

What is the role of embeddings in semantic search?

Embeddings are the backbone of semantic search because they convert text into vector representations that capture meaning. They enable the retriever to find semantically related content even when users don’t use the exact keywords in their search. You can think of them as interconnected neural networks.

What is the role of embeddings in RAG?

In RAG systems, embeddings serve as connectors. They connect the retriever and the generator. They help retrieve the most relevant documents that match a query’s meaning before passing them to the LLM to generate an informed answer.

How does semantic search compare to vector search?

Semantic search uses advanced algorithms to understand the meaning of words and sentences. It's like a map where similar ideas are close together. This helps it find answers that make sense, even if the exact words aren't there, because it understands what you're trying to find, as detailed in this semantic vs. vector search article.

Harnessing the power of precision

The main goal of most advancements today is to guide AI towards greater precision.

RAG and semantic search are both unique in their own ways, with distinct sets of pros and limitations. However, this does not stop them from being a force to be reckoned with when used together in a hybrid setting.