Modular RAG: What it is, how it works, architecture & more

A guide to modular RAG. Discover what it is, how it works, its advantages and disadvantages, how to implement it, and much more.

In this article

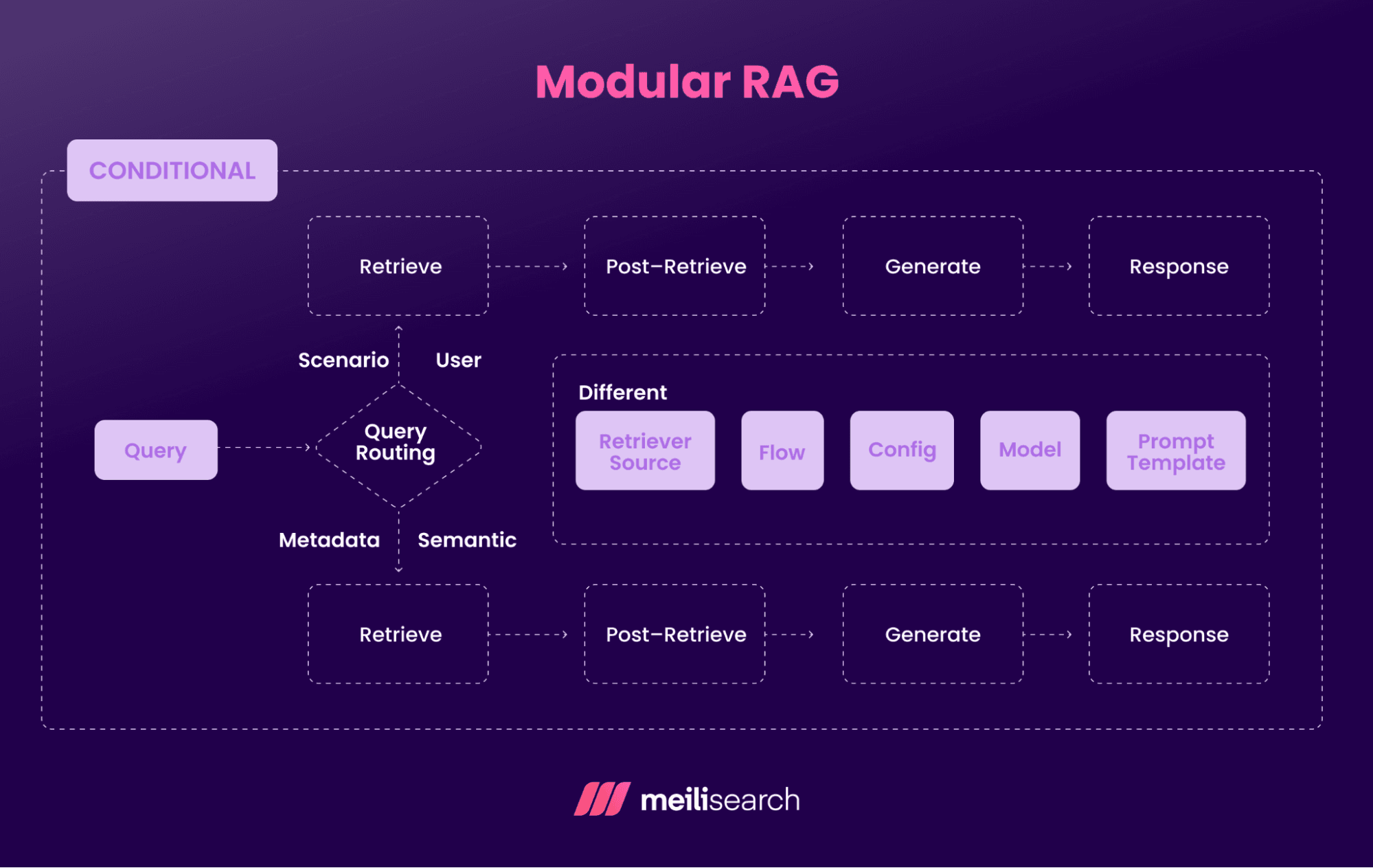

Modular RAG refers to a method of building retrieval-augmented generation (RAG) systems where the entire setup is divided into smaller and independent components. This makes designing, customizing, or upgrading the pieces easier.

The key components of modular RAG are the retriever, which finds useful information, the generator, which turns the information into responses, and additional modules such as rerankers, memory, or vector databases, which improve the accuracy of the artificial intelligence system. Each module handles a specific function that contributes to the overall quality.

Modular RAG works by retrieving relevant context from external knowledge sources, passing it through a generative model, and allowing different modules to handle tasks such as filtering, summarization, or reasoning, depending on the task's requirements.

The key advantages of modular RAG are increased flexibility, easier debugging, and better scalability. The key disadvantages of modular RAG are added complexity in system design and potential challenges in integrating the different modules.

You can implement modular RAG by combining retrievers and LLMs into a RAG pipeline that fits your use case.

This article will discuss everything you should know about the modular RAG framework.

What is modular RAG?

Modular RAG is a way of building RAG systems that treats each part of the process as separate but connected modules. Instead of having one rigid design, you get to choose the system build that works best.

In modular RAG, you can create a pipeline that adapts to your needs, be it faster retrieval or more accurate answers. Because you are not forced to use the same retrieval method, vector database, or language model every time, you get finer control over how information is retrieved, processed, and turned into answers.

For example, you might love how one company's embedding model works but dislike its retrieval system. With modular RAG, you can retain the embeddings and change to a different retrieval process. You can even experiment with multiple language models to see the best-performing one for your use case.

Modular RAG is subdivided into three different components:

- The retriever that gathers context

- The generator that creates responses

- Additional modules, such as filters, memory, or rerankers, that refine the output to suit the user’s needs

Now, let’s see the working mechanism behind modular RAG.

How does modular RAG work?

Modular RAG works by breaking the RAG process into separate components, with each component handling a specific task.

These components can include:

- Retriever: The retriever searches a knowledge base or vector database for relevant information.

- Reranker or filter: This is optional but recommended. Before sending the results forward, a reranker or filter can reorder, clean, or narrow down the retrieved data so that only the most useful content is passed along to the next component.

- Generator: The generator (typically a large language model such as OpenAI GPT) takes the refined context and uses it to produce a response.

- Additional modules: Depending on the use case, additional modules such as memory modules or summarizers can be slotted in to improve accuracy or break down complex queries.

- Output delivery: Finally, the GenAI system returns an accurate response that is grounded in the retrieved information.

Let’s see some examples of modular RAG.

What is an example of modular RAG?

An example of modular RAG in action can be an online shopping assistant.

Let’s say you search for ‘running shoes under $100.’ The router first decides the best way to handle that query: either through keyword search or by semantic similarity search.

The retriever then digs through the store’s product database.

Next, the processing modules clean up the results, filter out any irrelevant items, and then rank the best fits.

The generator takes that context and produces a useful reply such as, ‘Here are three running shoes under $100 that match your style.’

If the store later wants to add a recommendation setup or customer reviews as part of its process workflow, it can be done without changing the overall setup.

What are the advantages of modular RAG?

The key advantages of using modular RAG over traditional and one-piece RAG types are the following:

- Easy maintenance: You can fix, upgrade, or replace a single part without worrying that the entire system will break.

- Faster development: Different teams can work on separate modules in parallel, allowing for faster deployment and optimization of new features.

- Easy customization: Different industries have different needs for modular RAG. A legal assistant may add citation tracking, while an e-commerce chatbot may use recommendation modules. Modular RAG makes it easy to tailor the modules to your needs.

- Scalable performance: Modules that handle heavier workflow can be given extra resources, while lighter ones can run with less.

- Reliability: Since the AI system is divided into separate components, weak spots can be easily spotted and fixed, making it more reliable over time.

What are the disadvantages of modular RAG?

The disadvantages of implementing modular RAG are the following:

- Higher setup costs: More modules mean more infrastructure and skilled people are needed to run them, which drives costs up.

- Complexity overload: Managing several modules at once is not easy. There are more parts to manage individually and collectively. Troubleshooting the modules can also involve complex procedures, particularly in identifying the root cause of the issues.

- Learning curve: Teams using modular RAG must understand how each module works and fits together, which can slow down onboarding and often requires knowledge of data science and NLP.

What is the architecture of modular RAG?

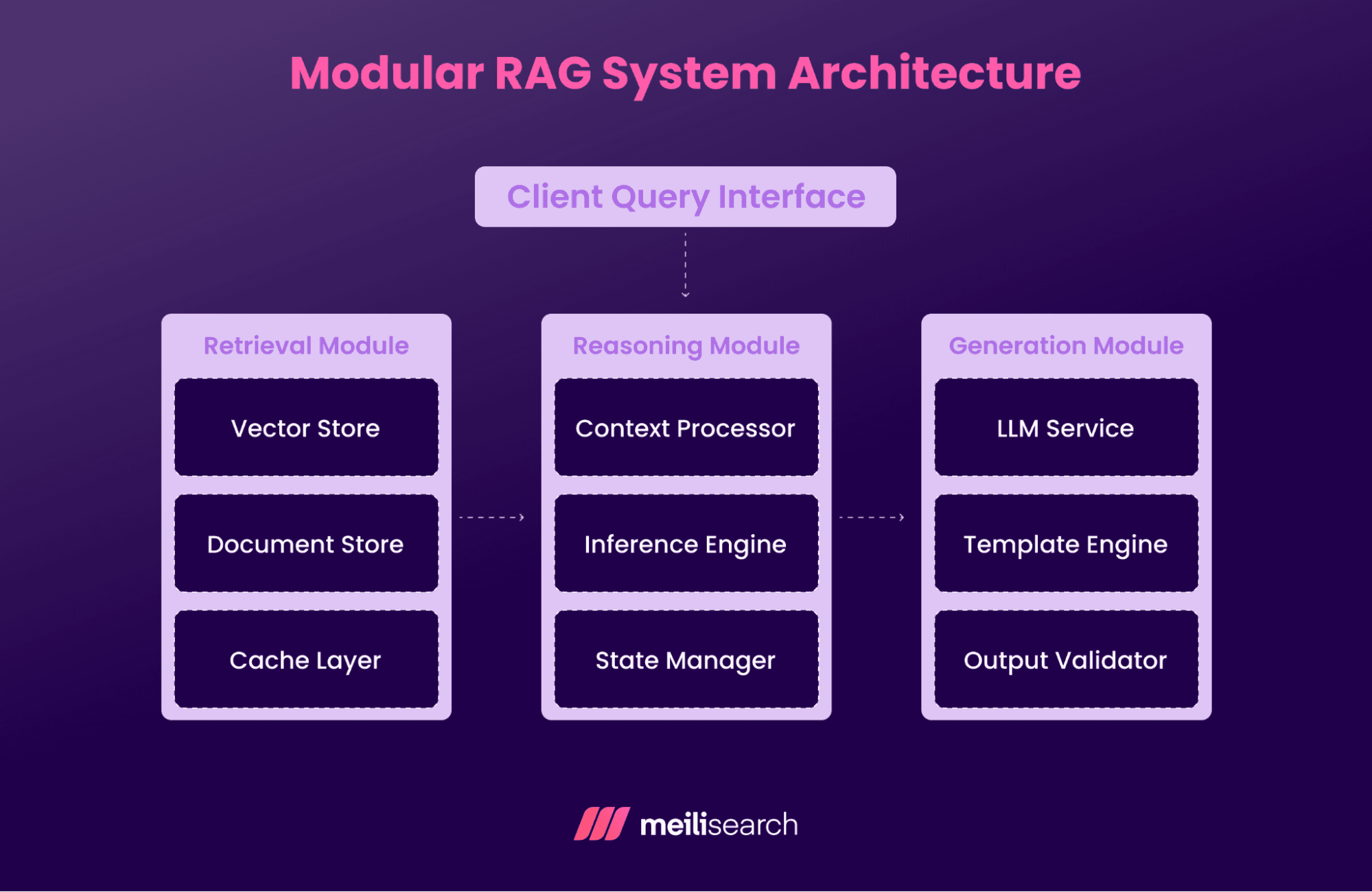

The architecture of modular RAG is built around breaking the whole system into smaller parts that work together. Here is how the typical architecture looks:

- Client query interface: This is where the user’s query first comes in.

- Retrieval module: The query from the client query interface is sent to the retrieval module. This module includes a vector search store (used for semantic search), a document store (used for traditional text search), and a cache layer (used to quickly retrieve recent or repeated queries). Together, they act like a library housing the important information.

- Reasoning module: Here, the context processor organizes what was retrieved, the inference engine helps the system reason through it, and the state manager keeps track of any history or ongoing context.

- Generation module: This is the last phase. The LLM generates a draft response, the template engine structures it neatly, and the output validator checks it for accuracy before delivering the final response to the user.

The architecture of modular RAG makes it easy to use for domain-specific use cases, such as customer support or enterprise knowledge graphs.

How can modular RAG be implemented?

Modular RAG can be implemented by combining several independent pillars. Each pillar addresses a different aspect of the system and can work on its own or alongside the others.

Let’s look at these pillars:

1. Data pipeline

The data pipeline handles how datasets are ingested and processed. You can create multiple data pipelines based on the type of content.

For example, an ETL pipeline that implements modular RAG for customer support documents and another that handles technical manuals. Each use case has its processing pipeline that knows how to handle that specific content.

Techniques such as chunking and carefully selecting the chunk size can significantly improve retrieval quality.

2. Microservices

The system is divided into independent services that communicate with each other via API calls. Your ‘retrieval’ service can handle document searches, while the ‘reasoning’ service processes the logic, and your ‘generation’ service crafts the final response.

Meilisearch can be used as the retrieval service because it can quickly index and retrieve documents without heavy infrastructure overhead.

3. Routing layer

The routing layer directs queries based on rules or classifiers and decides which module or pipeline should handle user queries using retrieval strategies or classifiers. You can use simple keyword matching for simple queries, but machine learning classifiers are better for complex queries.

4. Containers for technical implementation

Containers for technical implementation manage how services are packaged and deployed. They make it easy to scale specific modules up or down, swap one out if needed, or update a service without breaking the whole setup.

5. Monitoring and orchestration tools

Monitoring and orchestration tools ensure smooth coordination, error handling, and balancing of generation processes. They track how each module performs, catch errors before users find them, and coordinate complex queries that need multiple modules to work together.

How can modular RAG be evaluated?

Evaluating modular RAG requires tracking metrics across modules:

- Information retrieval quality via precision and recall.

- Post-retrieval validation to ensure the correct retrieved documents.

- End-to-end evaluation of speed and quality, compared against baseline pipelines.

The most comprehensive setup is a ‘sandboxed RAG playground,’ which includes static data, swappable modules, and an LLM to evaluate answers across different configurations.

You want to measure both individual module performance and overall system effectiveness.

For individual modules, you can track metrics like retrieval accuracy, response time, and resource usage. Each specialized module should behave like an expert in its field, meaning your legal module should confidently handle legal queries, while the technical one should handle engineering problems perfectly.

End-to-end RAG evaluation matters just as much as module benchmarks. Run complex queries and observe how well the modules work with each other. Check if the system returns an accurate answer.

In addition, compare the overall quality of information retrieval against a single-pipeline baseline to determine if modular RAG is an improvement.

Another consideration is comparing latency and quality trade-offs. Modular systems often introduce increased latency due to the coordination overhead, so you will need to balance response speed with answer quality.

Real-world testing with iterative feedback loops and augmentation scenarios is great for evaluating modular RAG. It is better to use actual user queries on your system than synthetic test cases.

Also, pay attention to edge cases where routing gets tricky or where the modules need to collaborate.

How does modular RAG compare to naive RAG?

Modular RAG uses multiple specialized components that capture domain-specific details and deliver more accurate results, while naive RAG relies on a single retrieval system that may overlook nuances.

Modular RAG is like a team of specialists, while naive RAG is more like relying on a generalist.

Compared to naive RAG, modular RAG is more powerful for complex queries, though it requires more effort to build and maintain.

Naive RAG, on the other hand, is quick and straightforward. Your query goes into one retrieval step, documents come back, and an answer is generated.

How does modular RAG compare to advanced RAG?

Modular RAG can be seen as an evolution of advanced RAG techniques, taking the same core idea but pushing it further by emphasizing modularity, scalability, and flexibility.

Advanced RAG represents an improvement over naive RAG by incorporating smarter algorithms, enhanced reranking, or multiple retrieval steps. But it is still often built as one big pipeline.

Modular RAG breaks this apart into smaller, specialized modules that can be swapped, upgraded, or scaled independently.

Both approaches are powerful, but modular RAG shines when you want control, scalability, and the freedom to adjust as your needs evolve.

Are there papers on modular RAG?

Yes, there are different papers on modular RAG:

- ‘Modular RAG: Transforming RAG Systems into LEGO-like Reconfigurable Frameworks,’ published July 2024 by Yunfan Gao, Yun Xiong, Meng Wang, and Haofen Wang (arXiv). It introduces the modular RAG paradigm and lays out a flexible, multi-module architecture that evolves beyond the classic linear RAG flow.

- ‘Retrieval-Augmented Generation for Large Language Models: A Survey’ by Gao et al., published December 2023 on arXiv. It covers the broader RAG evolution, including the various modular approaches.

- ‘RAG and RAU: A Survey on Retrieval-Augmented Language Model in Natural Language Processing’ by Hu and Lu, published April 2024 on arXiv. It examines modular components and their interactions.

- ‘A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models’ by Ding et al., published May 2024 on arXiv. It explores modular architectures and training strategies.

Start building intelligent RAG systems with Meilisearch

If you are looking to build smarter RAG systems, Meilisearch is your go-to tool.

It is easy to set up, meaning you can focus more on building intelligent retrieval workflows rather than battling with designing RAG infrastructure.

Meilisearch also shines at delivering highly relevant documents with low latency, making it ideal for feeding accurate context into your generative AI models.