RAG vs. prompt engineering: choosing the right approach for you

Explore the key differences between RAG and prompt engineering. See which approach best suits your needs, where to apply them, and more.

In this article

When working with large language models, achieving accurate, relevant results depends on several factors.

Two of them are Retrieval-Augmented Generation (RAG) and prompt engineering.

RAG helps generate better answers by allowing the generative AI model to retrieve information from external knowledge sources, keeping responses fact-based and up to date.

Prompt engineering, on the other hand, focuses on how you communicate with the AI to produce clear and context-aware answers.

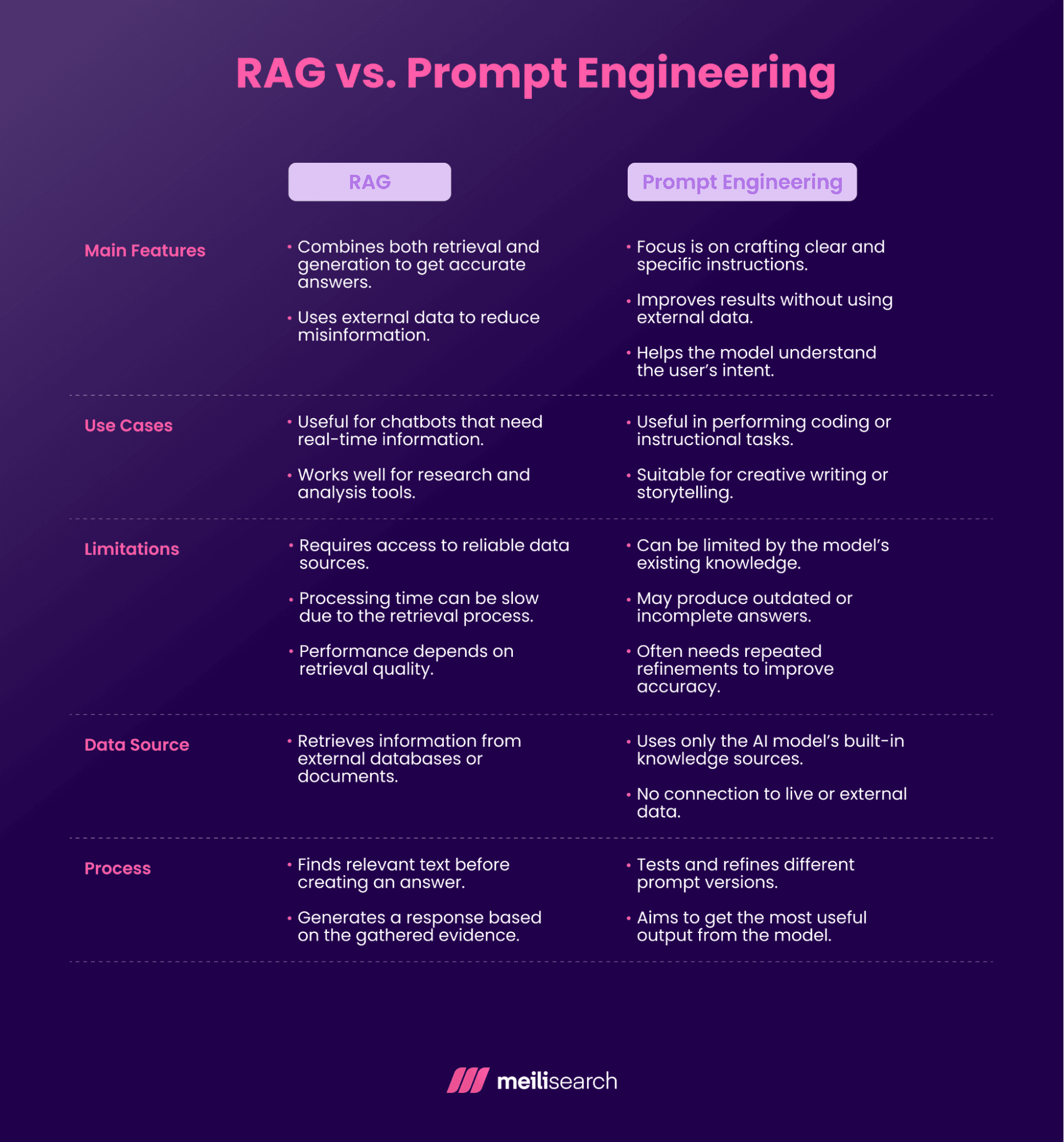

- RAG adds new knowledge without retraining, whereas prompt engineering improves the model’s use of its existing knowledge.

- RAG works well for research, legal, healthcare, and customer support; prompt engineering is well-suited for summarization, creative writing, Q&A, and data formatting.

- RAG is complex and can be slower, while prompt engineering is cheaper but requires skill.

- RAG requires more setup and maintenance, but prompt engineering is faster and cost-effective.

Choosing the right approach depends on what you want: accuracy, freshness, or speed. Often, combining both gives the best results. Let’s dig deeper into this.

What is prompt engineering?

Prompt engineering is the art of crafting clear instructions for large language models (LLMs) to achieve the best possible results.

Think of it like learning to ask the right questions. A vague prompt might produce a generic answer, while a carefully worded one can give you precisely what you need.

Prompt engineering is about understanding how the gen AI model interprets instructions and using that insight to guide it toward your desired output.

What is RAG (retrieval-augmented generation)?

RAG is a technique that improves LLM outputs by providing them with access to external resources. This could include databases, documents, and APIs. Instead of relying solely on what they were trained on, RAG allows AI models to access additional information when generating answers.

For instance, we can give an AI model both memory and internet search skills, so that it knows today’s date and searches the internet for the most recent data.

How does prompt engineering work?

Prompt engineering is about learning how to interact with an artificial intelligence model in a way that generates the best possible response.

As a rule of thumb, it is good practice to provide the LLM with enough context to answer your questions. LLMs are trained to follow user instructions, and they do so within the context they have.

For example, if you ask an LLM to act like a hip-hop artist, its response will sound like one. Ask it to write like a Nobel Prize–winning author, and it will adapt its responses accordingly.

Finding the right prompt involves experimentation. If the answer from an initial prompt is not accurate, you can refine your prompt to provide more context and make it clearer. You may also check for noise or contradictory statements and eliminate them.

For example, if you do not have a background in science, instead of asking the model to “Explain photosynthesis,” you could say, “You have over a decade of experience teaching biology. Explain photosynthesis like you are teaching someone in eleventh grade.” The goal is to give the AI enough context and direction to produce relevant responses.

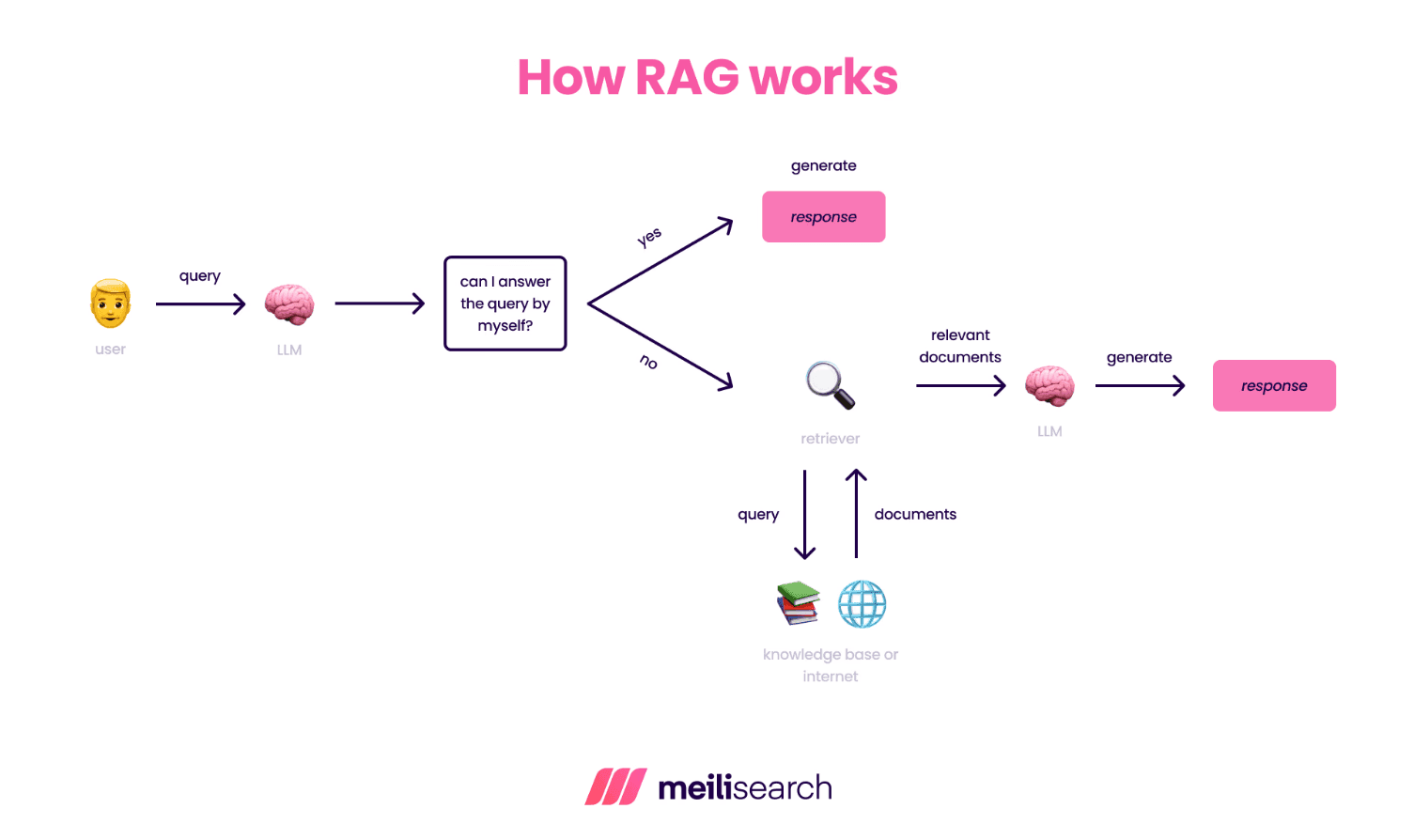

How does RAG work?

RAG works by combining retrieval and generation so that an LLM can answer using external facts.

In the retrieval phase, RAG searches external sources to find the most relevant information, and in the generation phase, the model uses that retrieved data to craft an accurate response.

Here is how the process goes, step-by-step:

- A user types in a search query.

- A retriever searches a document store or database for information that matches the query.

- The information is ranked, and the best is selected to form a context.

- The original question and the context are sent to the LLM.

- The model generates a response grounded in the retrieved information.

- Finally, a verifier or re-ranker checks the model's outputs before they are returned. This is optional.

What are the main differences between RAG and prompt engineering?

The main difference between RAG and prompt engineering is how they help AI models produce better answers.

Different RAG types improve accuracy by allowing the model to retrieve real-time data before generating a response.

Prompt engineering focuses on phrasing and optimizing questions to get the best result from the model’s existing knowledge.

To sum it up, RAG gets its information externally, while prompt engineering uses information already within the AI model.

Now, let’s discuss the benefits and challenges of using RAG and prompt engineering.

What are the advantages of prompt engineering?

Prompt engineering can help users get the most out of AI tools without requiring additional data.

Here are some of the advantages:

- Better control over responses: You can guide the AI model to produce more relevant outputs by learning how to phrase questions effectively. For example, a marketer can ask ChatGPT to ‘act like an expert marketer in a Fortune 500 company and write an email to promote a new product,’ and receive a good draft email immediately.

- Works across different tasks: Prompt engineering can be applied across various sectors. It is helpful for specific tasks such as coding, summarizing reports, writing essays, and even brainstorming ideas.

- Saves time and effort: Making your input prompts clearer reduces the need for multiple iterations. Instead of constantly reworking the answer, a well-crafted prompt helps the AI deliver the desired result in a single go.

- Encourages creativity and personalization: Prompt engineering gives users the freedom to set their own tone or style. This makes it an excellent tool for writers, designers, or educators who desire unique results.

What are the advantages of RAG?

RAG has several benefits that make AI responses more helpful in real-life situations.

- Improves accuracy: RAG reduces the risk of errors by pulling information only from verified external sources.

- Access to up-to-date information: RAG can retrieve current information from live databases or documents. This makes it perfect for fast-changing topics, such as the news.

- Better context understanding: RAG provides the AI model with additional background information before generating an answer, helping it better understand the question and respond more accurately.

- Flexibility across domains: Because it can connect to various data sources, RAG can easily adapt to resource-intensive, specialized fields like healthcare, agriculture, or finance while still delivering insights tailored to each domain.

- Reduced AI model training costs: Since RAG relies on retrieval rather than constant retraining, organizations can update knowledge from data sources rather than rebuilding the entire model for each process.

What are common challenges with prompt engineering?

Prompt engineering is a powerful skill, but it is not always straightforward. Generating the perfect response from AI often takes creativity and practice.

Here are some common challenges:

- Inconsistency in results: Just a slight change in wording can completely alter the AI’s answer. Also, because AI is non-deterministic, what works once may not work the same way next time, making it hard to achieve consistent responses.

- A need for expertise: Crafting a strong prompt requires some level of domain knowledge and an understanding of how the AI model interprets instructions. Newbies may give generic prompts, leading to generic AI responses.

- Can be time-consuming: Refining your prompts through trial and error can take a long time, especially when dealing with complex tasks. If not properly managed, this can slow down your workflow or even reduce your productivity.

- Model limitations: AI systems only know the training data on which they were built. Therefore, even the best prompt cannot fix a model whose knowledge base is lacking. Such situations lead to hallucinations or outdated responses.

What are the common challenges with RAG?

Some challenges can make RAG implementation difficult, even though it greatly improves accuracy and relevance.

Here are some of them:

- System complexity: RAG can be complex to set up and maintain, especially for non-technical teams. It requires connecting a retriever and a database to the LLM.

- Integration overhead: Integrating a RAG system into an existing application or workflow often requires extra resources. You have to set up the RAG infrastructure while maintaining the external data sources at the same time.

- Latency and speed issues: Balancing accuracy with performance can be challenging with RAG. Since it searches and retrieves data before generating a response, it can be slower than the traditional models, especially when handling large datasets.

- Information retrieval error: Sometimes a RAG system retrieves the wrong documents, leading to answers that sound confident but are based on inaccurate data. This makes proper data indexing and filtering critical when setting up the RAG system.

What are examples of prompt engineering use cases?

Prompt engineering can be applied in many real-world situations to make AI tools more valuable and efficient:

- Question answering: Businesses use prompt engineering to make chatbots and help desks smarter. With the right prompt, an AI agent can provide clear and accurate responses to customer or employee questions.

- Text summarization: It is excellent for turning long text into short but meaningful takeaways. For instance, you can say, ‘Summarize this report in three simple points,’ and save hours of reading time.

- Data conversion: Prompt engineering can help you quickly clean or restructure information. For example, changing your raw notes into a professional report.

- Creative writing: Writers use prompts to get innovative ideas, explore storylines, or overcome creative block.

What are examples of RAG applications?

RAG tools are powering many of the apps we use today. Here are a few ways RAG is being used in the real world:

- Customer support systems: Many customer service chatbots now use RAG to fetch real answers from company manuals. So when you ask your bank’s chatbot about your account details, it gives you the exact information instead of a generic reply.

- Legal research: Law firms use RAG to review thousands of case files and contracts in seconds. A lawyer can ask about precedents for a case and instantly get relevant examples instead of manually searching the archives.

- Healthcare and medical support: Doctors and researchers use RAG to find the latest studies, treatment guidelines, and patient data. This enables them to make more informed medical decisions.

- E-commerce product comparison: RAG is used by e-commerce companies to compare products. It retrieves product specs, reviews, or prices from the database and generates personalized recommendations.

Can RAG and prompt engineering be combined?

Yes, RAG and prompt engineering work better together.

For example, when someone asks, ‘Summarize this customer’s issue in three bullet points,’ a RAG setup might pull a customer’s record from the database, and a good prompt allows the LLM to summarize only the issues returned.

In legal work, RAG can find relevant cases, which are then combined with a prompt that says, ‘Compare these rulings and highlight the key differences.’ This provides a concise, actionable comparison rather than a long list that might be difficult to interpret.

In healthcare, RAG retrieves patient history and recent medical studies, while prompts ensure the results follow clinical reporting standards.

RAG handles the ‘what’ (or the facts), and prompt engineering handles the ‘how’ (or the presentation). When used together, they make answers both relevant and accurate.

What are the costs of RAG vs. prompt engineering?

The costs of setting up RAG and prompt engineering are different.

RAG can be expensive to implement because it requires additional infrastructure (such as a database, a retrieval system, and integration tools). There is also a maintenance cost for updating data sources, handling storage, and ensuring the retrieval system stays accurate and fast.

Prompt engineering, on the other hand, is far cheaper because it mainly involves using your time and skill. You have to learn how to design effective prompts and also test them for consistent results. There is no need for new servers, APIs, or databases, making it suitable for quick, low-cost projects.

RAG requires more upfront investment but delivers higher accuracy, while prompt engineering is cost-effective and easily scalable for smaller applications.

Want to decide whether to use RAG or prompt engineering? Let’s see the factors you should consider when choosing.

How do you choose between RAG and prompt engineering?

Choosing between RAG and prompt engineering depends on what you need from your system and the kind of resources you have.

Here is a simple decision guide to use:

- Check if you need fresh data: RAG is the way to go if your answers must include the latest information. But if you are working with general knowledge that doesn't change often, prompt engineering should be sufficient.

- Weigh your accuracy and reliability needs: For fields like healthcare, law, or finance, where mistakes can be costly, RAG is safer because it cites real sources. Prompts alone cannot always guarantee accuracy.

- Consider your time, budget, and skills: RAG takes more setup and maintenance, while prompt engineering requires creative thinking and practice. If you have limited resources, then go with prompt engineering.

- Balancing performance and workflow: RAG systems can be slightly slower since they search external data before responding, but the answers are usually more accurate. Prompt engineering is quicker and is perfect for lightweight applications where speed matters more than depth.

How does RAG compare to fine-tuning?

The main difference between RAG and fine-tuning is that RAG pulls in fresh information from external sources when needed, while fine-tuning actually trains the model to learn that knowledge internally.

With fine-tuning, you take a pre-trained model and feed it domain-specific data (such as legal documents or customer records) so that it learns the patterns and language of that field. This makes it faster and consistent.

The limitations are that it is expensive to train, requires lots of clean data, and must be redone whenever new information comes in.

RAG, on the other hand, does not retrain the model. Instead, it connects to a database or document store and retrieves the most relevant information whenever a question is asked. This ensures the answers are up to date and adaptable to new topics.

How does prompt engineering compare to fine-tuning?

The main difference between prompt engineering and fine-tuning is that prompt engineering focuses on how you ask the model your question to get the best response, while fine-tuning changes what* *the model actually knows.

With prompt engineering, you are not retraining anything. You are instead crafting the proper instructions to guide the model’s behavior. It is fast, affordable, and flexible, making it perfect for tailoring outputs to specific needs.

The catch is that results can vary greatly depending on how well the prompts were written.

Fine-tuning, on the other hand, takes things deeper. You train the model on new data so it permanently learns that knowledge. This gives you consistency and expertise but comes with higher costs, more setup time, and the need to continuously retrain to stay up to date.

RAG vs. prompt engineering: and the winner is...

The reality is that both techniques shine in their own way.

RAG is your go-to tool when accuracy and up-to-date information matter most, since it can retrieve from live data sources. Prompt engineering is the fastest, most flexible way to generate outputs without retraining any model.

If you are building something that needs freshness, go with RAG. For quick, creative control, prompt engineering takes the lead.

Ready to build your RAG pipeline?

If you are building a RAG pipeline, Meilisearch makes retrieval fast and effortless. With features like built-in vector search and lightning-fast indexing, Meilisearch keeps your RAG system efficient and reliable while giving your AI the right information exactly when it needs it.