GraphRAG vs. Vector RAG: Side-by-side comparison guide

A practical guide comparing GraphRAG and Vector RAG – how they work, key differences, pros/cons, top tools, and when to combine them for better answers.

In this article

Retrieval-augmented generation (RAG) can address several key pain points of current AI models, including hallucinations and missed context, weak precision on complex queries, and latency issues.

But with so many RAG types to choose from, it’s easy to become overwhelmed.

Your decision will depend on how well your system is fitted for handling complex relationships, accuracy, and scalability.

In this guide, we will compare two key RAG types: GraphRAG and Vector RAG. You will learn:

- The definition of RAG, vector RAG, and GraphRAG.

- How each RAG type works and the key differences between them, such as data structure, context retention, reasoning ability, scalability, and more.

- Real-world scenarios and use cases where GraphRAG and vector RAG excel.

- A look at Meilisearch and other tools best suited to build both architectures.

- How the database choice affects the final result, and when combining both approaches delivers the best of both worlds.

By the end, you’ll know exactly which RAG architecture will work best for your data and goals, and whether a hybrid of the two is the optimal move for you.

What is retrieval-augmented generation?

Retrieval-augmented generation is a method to enhance large language models (LLMs) by retrieving external knowledge or context before generating a response. We already know how pre-trained parameters work, but this is different.

RAG retrieves the context from an external database or document store, augments the user-provided prompt, and then generates output that is both smart and accurate.

It is primarily used to reduce hallucinations in LLMs.

What is vector RAG?

Vector RAG, as the name suggests, relies on numerical representation of unstructured data to retrieve relevant information and generate results based on similarity measures. Yes, like the cosine or Euclidean distance.

How does it work?

User queries are converted into vectors and matched against stored vector embeddings to find the most contextually relevant pieces of information.

Vector RAG is particularly useful when keyword search alone is insufficient for semantic search across large knowledge bases.

What is GraphRAG?

GraphRAG relies on knowledge graphs to structure information into nodes and relationships. As a result, it allows retrieval based on how the entities actually connect, instead of just the text.

One element to remember is that GraphRAG doesn’t embed chunks alone. It actually maps data into a graph database where queries can follow links between related entities to find precise and contextually rich answers.

This comes in handy when dealing with tasks beyond the surface level.

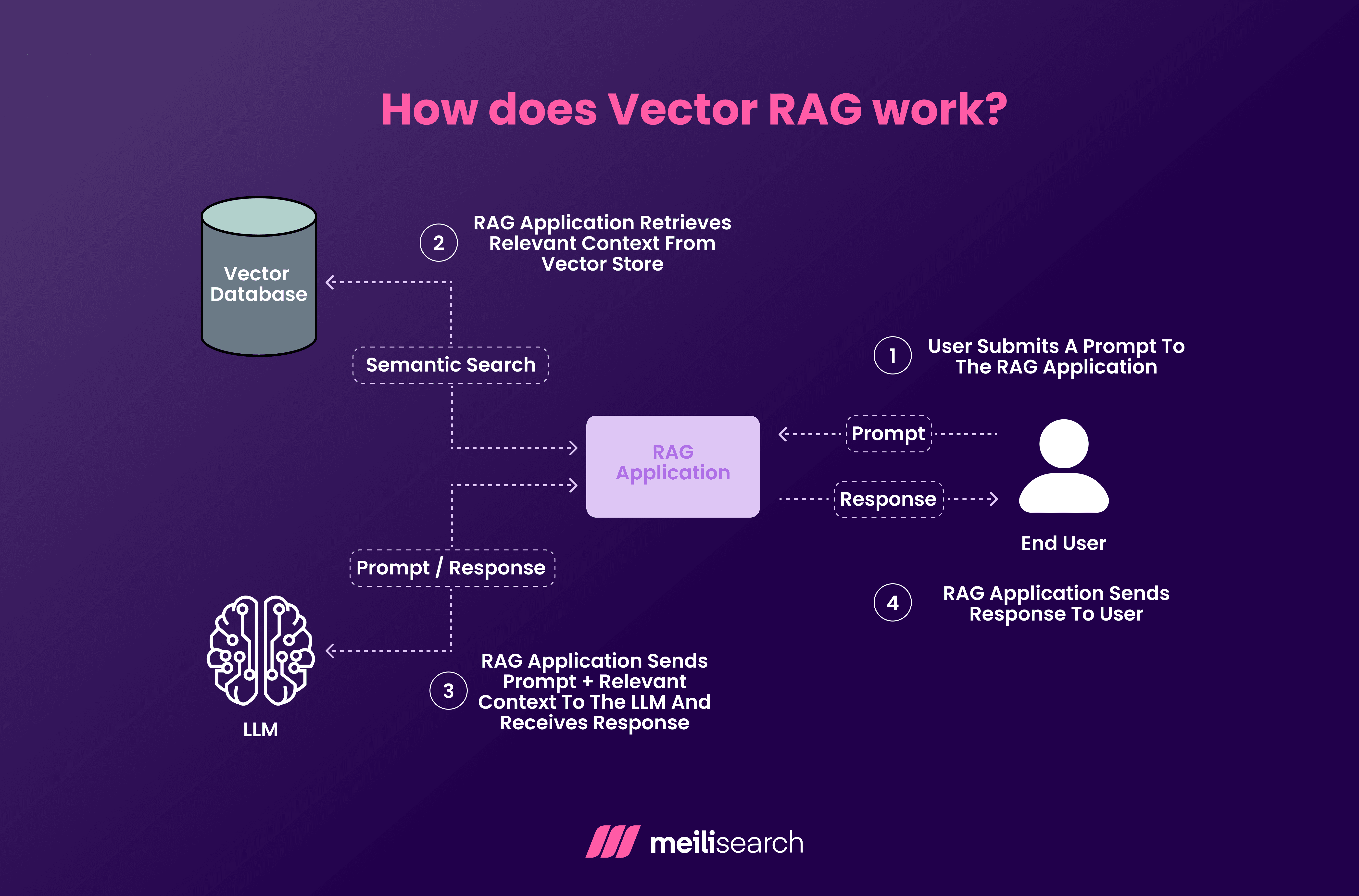

How does Vector RAG work?

Vector RAG connects data retrieval and language generation through a structured pipeline. Instead of relying on keyword matches alone, it uses vector embeddings to find semantically relevant content that matches a user’s query.

Here’s how the process typically unfolds:

- Data embedding: Vector RAG converts documents into dense vector embeddings using the same embedding model that will process queries.

- Vector storing: Next, it saves these embeddings in a vector database that supports similarity search using cosine similarity or Euclidean distance.

- Query embedding: When a user asks a question, the system generates a query vector using the same embedding model.

- Nearest vector retrieval: The database identifies the closest matches to the query vector, thereby locating relevant chunks of unstructured data.

- Response generation: The retrieved content is passed to a language model, which uses it as external context to produce accurate and relevant answers.

This flow gives RAG systems semantic recall that keyword-only methods can’t match. This is particularly powerful for extensive collections of unstructured text.

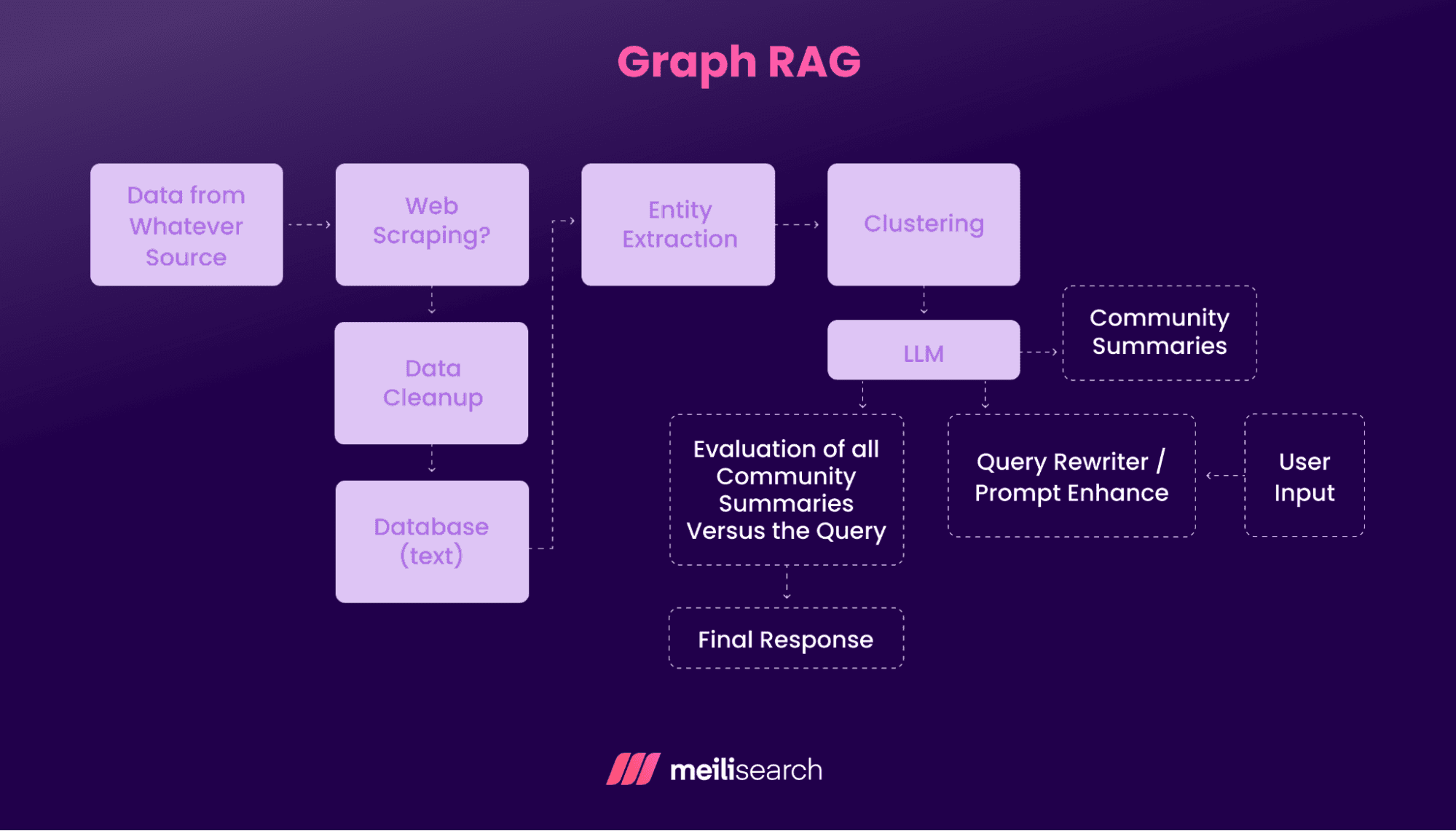

How does GraphRAG work?

GraphRAG constructs a knowledge graph where nodes represent entities and edges capture their relationships.

When a user submits a query, GraphRAG links the question to graph entities, explores relevant neighborhoods, extracts a supporting subgraph, and feeds structured facts to the LLM.

Here’s how it goes:

- Domain modeling: The first step is to define a schema that ingests sources as nodes and edges of a knowledge graph, along with their types, IDs, and timestamps.

- Entity linking: The system applies named entity recognition and entity resolution to identify and connect related entities, attaching attributes and citations to each node.

- Graph indexing and storage: The resulting graph is loaded into a graph database. Optionally, node and relationship embeddings can be computed to enable hybrid retrieval.

- Evidence retrieval: When a query is received, the system embeds the query, links it to relevant entities, and traverses k-hop paths under predefined constraints to collect a subgraph of supporting evidence.

- Ranking and compression: Retrieved paths are scored, deduplicated, and compressed into concise triples or statements, preserving provenance for traceability.

- Answer generation: The selected facts and citations are passed to a language model, which uses them as structured context to generate a grounded and accurate response.

This workflow supports deeper reasoning and can be combined with vector search for broader recall.

Whether you choose a graph-based or vector-based approach, the core implementation principles remain largely the same. Our guide on how to build RAG walks you through setting up a foundational retrieval and generation pipeline.

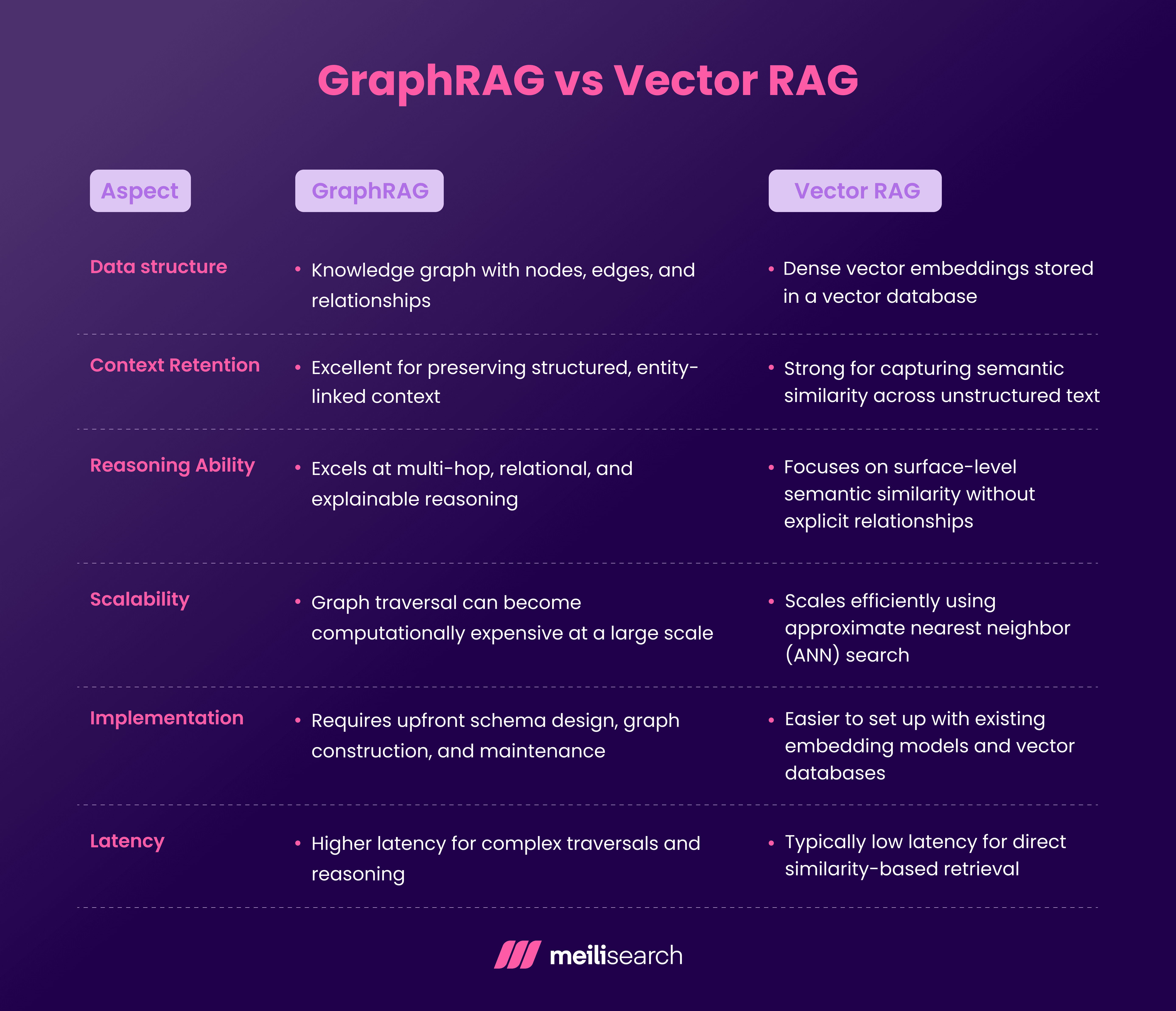

What are the key differences between GraphRAG and Vector RAG?

The main difference between GraphRAG and Vector RAG lies in how they represent and retrieve knowledge.

GraphRAG uses nodes and edges to explicitly model entities and their relationships, while Vector RAG relies on dense vector embeddings to capture semantic similarity between pieces of text.

This fundamental difference affects how each approach performs on various metrics, including context retention, reasoning, and scalability.

GraphRAG is handier when you’re dealing with queries that involve complex relationships. With the help of knowledge graphs, it can find you the relevant nodes even when the query phrasing varies.

Some industries find this particularly helpful, such as biomedical research, legal departments, and supply chain networks, where reasoning over links is essential.

Vector RAG, on the other hand, is a lot faster to set up and scales well for unstructured or semi-structured text. By embedding text into high-dimensional vectors and using similarity search, it retrieves context based on meaning rather than explicit structure.

It’s most useful in broad knowledge bases, user-generated content, or scenarios where structure is limited or expensive to maintain.

Below is a side-by-side breakdown of their key differences:

To compare both approaches properly, you need a clear way to measure accuracy, reasoning, and speed. For a comprehensive breakdown of how to test and benchmark retrieval systems, refer to our RAG evaluation guide.

What are the advantages of GraphRAG?

GraphRAG offers unique strengths that go beyond simple similarity search. The structured relationships between entities ensure that you get trustworthy outputs even in the most complex scenarios.

- Reason across chains, not chunks: Graph structures allow models to traverse multiple connected nodes, which is why they can answer multi-hop questions that vector-only systems often miss.

- Show your work with explainable paths: Transparency is key. Since knowledge graphs store information as nodes and edges, the reasoning trail can be visualized and audited. This transparency makes it easier to debug and build trust in the system’s answers.

- Keep context alive longer: Graphs preserve relationships over time, maintaining richer context compared to isolated vector embeddings. This leads to better handling of long or interconnected information without losing important details.

- Blend structured and unstructured data like a pro: GraphRAG works well with both relational data and unstructured text. It can connect business entities, taxonomies, or user data with vector-based search results to provide more comprehensive answers.

These advantages make GraphRAG a strong choice for applications that rely on complex relationships, explainability, or long-term contextual understanding.

What are the advantages of Vector RAG?

If you want speed along with straightforward implementation, Vector RAG is the way to go. It excels at fast retrieval in large, unstructured datasets:

- Blazing-fast lookups at scale: Since vector search engines are optimized for rapid similarity matching, you’ll find them ideal for low-latency use cases like chatbots, search bars, or even real-time assistants.

- Scale without breaking a sweat: Vector RAG is the ultimate option for scaling, as it handles millions of embeddings. You can grow your dataset while maintaining consistent response times, which is crucial for production workloads that serve large user bases.

- Plug-and-play setup: Vector RAG pipelines are relatively simple to implement. You embed your documents, store them, and query by similarity. This ease of setup lowers the barrier for teams to get started and iterate quickly.

- Flexible with messy data: Unlike graph structures that need well-defined relationships, vector approaches work well with unstructured or loosely structured text. This makes them a strong fit for knowledge bases and FAQs.

These strengths make Vector RAG a go-to choice when simplicity and scalability are more important than complex reasoning.

What are the limitations of GraphRAG?

We know GraphRAG is powerful, but it comes with certain trade-offs. These include:

- Up-front costs: Designing a knowledge graph requires careful schema planning and relationship modeling. This makes the initial implementation slower compared to vector pipelines, especially if your data isn’t already structured.

- Ongoing maintenance load: Maintenance is key for such high-powered reasoning capabilities. The graphs need continuous curation to remain accurate. As your data changes, relationships must be updated, new entities added, and outdated links removed.

- Scaling isn’t always smooth: Large graphs with dense relationships can become expensive to query at scale. Traversing many nodes and edges quickly hits performance bottlenecks, especially under real-time latency requirements.

- Not everyone can do it: Working effectively with graph databases often requires understanding graph theory, query languages like Cypher, and entity-relationship modeling, which not every team is equipped to handle.

While these challenges don’t rule out GraphRAG, it’s better suited for teams ready to invest in more complex infrastructure and specialized expertise.

What are the limitations of Vector RAG?

Right off the bat, Vector RAG lacks the retrieval accuracy and reasoning depth necessary for complex use cases. Other limitations include:

- Context slips through the cracks: Vector search focuses on similarity between embeddings, which works well for semantic matching but can miss the non-surface-level relationships or context.

- Weak at multi-step reasoning: Vector RAG doesn’t natively understand entity relationships or graph structures. To understand multi-hop questions or reasoning chains, you will need to orchestrate additional layers of retrieval.

- Harder to explain decisions: Because retrieval is based on mathematical similarity rather than explicit relationships, it’s more difficult to trace why a particular document was selected or how the final answer was formed.

- Embedding drift over time: Embeddings can become stale over time as models or data keep changing. This requires periodic re-embedding and updates, adding operational overhead.

While these limitations don’t make Vector RAG automatically unsuitable, they highlight why some teams explore hybrid or graph-augmented approaches for more complex reasoning tasks.

What are common use cases for GraphRAG?

GraphRAG is the go-to in domains where understanding complex relationships and multi-hop reasoning is essential.

- Medical diagnosis: GraphRAG can link symptoms, conditions, treatments, and patient histories to support diagnostic reasoning. It helps uncover relationships, such as drug interactions or rare comorbidity patterns, that pure vector search might miss.

- Legal research: Legal datasets often contain layered relationships between cases, statutes, and precedents. GraphRAG enables structured exploration across these references, allowing legal teams to uncover relevant but non-obvious connections.

- Recommendation systems: Recommendation engines benefit from GraphRAG by mapping user behavior to entities and relationships, like users, products, or categories.

- Scientific research: When dealing with aspects such as genomics, material science, and scholarly publishing, where knowledge is inherently relational, GraphRAG is extremely useful.

What are common use cases for Vector RAG?

Vector RAG is unmatched where speed and scalability are the ultimate aim. Let’s examine the use cases where Vector RAG is part of a routine process:

- Customer support: Vector RAG anchors intelligent support systems that quickly surface relevant documentation, FAQs, or ticket histories based on the user's query intent.

- Content summarization: For organizations handling large volumes of unstructured data, Vector RAG can retrieve semantically similar passages, cluster them, and feed them into summarization models. This is especially useful for news aggregation, research archives, or internal reports.

- Search engines: Modern search experiences rely on semantic retrieval to understand natural language queries. Vector RAG enhances recall and precision, returning results that align with user intent rather than just keywords.

- Chatbots and virtual assistants: Conversational agents get a considerable boost from Vector RAG as well. It grounds their responses in a searchable knowledge base so they’re accurate and more aware.

Now is the time for us to look at what actually makes GraphRAG and Vector RAG so great.

What are the top tools for building GraphRAG?

With GraphRAG, you need fast retrieval, structured reasoning, and orchestration. These tools cover different layers of that stack:

- Meilisearch: Meilisearch is the first retrieval layer before graph traversal. It surfaces relevant documents quickly using keyword or hybrid search so that the graph only operates on a focused set of entities. This added focus ensures that the traversal is sped up and the retrieval is more precise.

- Neo4j: Neo4j handles modeling and querying relationships. It excels at handling complex graph structures to power Cypher queries that language models can use to trace relationships across data.

- LangChain: LangChain is useful for orchestrating the different moving parts in a GraphRAG pipeline. It helps structure workflows where Meilisearch handles initial recall, Neo4j provides structured context, and an LLM completes generation.

- Weaviate: Weaviate is designed for teams that want to integrate semantic search and graph reasoning in a single platform. It can store embeddings and graph structures together, making room for hybrid queries.

- TigerGraph: A strong choice for large-scale, distributed graph workloads. It’s built for speed on massive graphs, making it suitable for enterprise knowledge graphs that require supporting complex queries with low latency.

Together, these tools provide the building blocks to design retrieval pipelines that are both context-aware and effective performers, rather than relying solely on vector search.

What are the top tools for building Vector RAG?

What are the tools that enable Vector RAG to be so fast and scalable? Let’s look at the stack below:

- Meilisearch: Provides a hybrid retrieval layer that pairs fast keyword search with vector search and faceted filtering. It’s ideal for teams that want semantic retrieval without having to manage complex infrastructure.

- Qdrant: You may think of Qdrant as the speedy workhorse. It’s open source, built to handle millions of vectors without breaking a sweat. If you need real-time search with filters, Qdrant’s got your back.

- FAISS: FAISS is the ‘build it yourself’ kit from Meta. It’s super-fast and perfect if you want full control of your own machine. It’s often used for local or offline indexing when developers need maximum control and minimal dependencies.

- Weaviate: Weaviate combines vector search with intelligent filtering, allowing you to set up schemas and use various embedding models. Perfect for teams that want everything in one neat place.

- Pinecone: Pinecone is the cloud wizard that takes care of the heavy lifting so your searches stay quick, even when your data grows huge. A top pick when you want smooth performance at scale.

Put them together, and you’ve got options for every kind of vector search dream. From small tests to big production setups, there’s a tool for it.

How does database choice affect RAG performance?

The database you choose determines your RAG system's speed, growth potential, and effectiveness across different types of information. Let's see what that actually means.

Vector databases are the speed champions here. They use approximate nearest-neighbor search to find relevant content in milliseconds, even when searching across millions of documents.

Graph databases take longer because they need to traverse relationships and follow connection paths, like tracing a web of linked ideas rather than doing a quick lookup.

Vector RAG scales more smoothly. Vector databases distribute embeddings across multiple servers without much fuss.

GraphRAG is trickier. As graphs grow with more nodes and connections, queries slow down, and spreading a graph across servers while keeping all those relationships intact requires serious architectural planning.

In practice, the best RAG systems often combine both: vector databases handle initial semantic retrieval for speed and scale, while graph databases provide relationship context for more complex queries.

Which is better: GraphRAG or Vector RAG?

Neither GraphRAG nor Vector RAG is inherently ‘better.’ The right choice depends on your data structure, query complexity, and application goals.

GraphRAG excels when relationships between entities matter. It’s ideal for scenarios that require multi-hop reasoning, explainable outputs, and maintaining long-term context.

This makes it a strong fit for domains such as legal research, healthcare analysis, and scientific discovery, where accuracy and traceability are crucial. The trade-off is higher implementation complexity and potentially slower query times at scale.

Vector RAG, on the other hand, shines in speed, scalability, and ease of setup. It’s ideal for unstructured data, fast semantic search, and user-facing applications such as chatbots, search engines, and support tools. It struggles, however, with complex relational reasoning since embeddings alone don’t encode explicit entity relationships.

In many production systems, teams don’t pick one over the other; they prefer a hybrid model. They combine both approaches, using vector search for broad recall and graph traversal for structured reasoning.

This hybrid setup strikes a balance between latency, depth, and accuracy, making it the most flexible option for handling complex real-world data.

Can you combine GraphRAG and Vector RAG?

Yes, you can combine both approaches to build hybrid RAG systems. These systems use vector search for fast, broad recall and graph traversal for structured reasoning, letting you get the best of both worlds.

A common strategy is to start with vector retrieval to quickly pull relevant chunks, then pass those results to a graph layer to enrich the context with relationships.

Another option is to use graph queries first to define a focused set of entities, then run a vector search within that subset to improve relevance.

This combination works especially well in large or heterogeneous datasets where speed and depth are both important, such as enterprise knowledge bases or research platforms.

By layering the two RAG techniques, teams can reduce hallucinations, improve explainability, and maintain performance at scale.

Choose the RAG your data deserves

Both GraphRAG and Vector RAG have clear strengths, but the right choice depends on your data, complexity, and performance goals.

Some teams thrive with the speed and scalability of vector retrieval, while others require the structured reasoning and explainability of graph-based approaches.

Many will benefit from a hybrid model that blends both.