What is GraphRAG: Complete guide [2026]

Discover how GraphRAG improves traditional RAG by using graph-based reasoning to deliver more accurate, explainable, and context-rich AI responses.

![What is GraphRAG: Complete guide [2026]](/_next/image?url=https%3A%2F%2Funable-actionable-car.media.strapiapp.com%2FWhat_is_Graph_RAG_Complete_guide_cbd31baee5.png&w=3840&q=75)

In this article

Traditional retrieval-augmented generation (RAG) is powerful, but it often treats information as isolated chunks, missing the connections between entities that give answers depth and context.

GraphRAG changes that by combining knowledge graphs with large language models, allowing AI to reason across linked data, trace relationships, and produce richer, more accurate responses.

Whether you’re building advanced chatbots, analyzing legal documents, or exploring healthcare research, GraphRAG offers explainable and scalable results.

In this article, we explain how GraphRAG works, why it matters, and the tools and steps needed to implement it effectively.

What is GraphRAG?

GraphRAG stands for Graph Retrieval-Augmented Generation. It is an advanced approach that follows the basic principles of RAG systems, but it also combines knowledge graphs with large language models to produce context-rich and accurate responses.

While the common assumption might be that it retrieves isolated chunks, GraphRAG actually uses the structure of the graph to map the relationship between the entities or documents. This allows the model to reason and provide more depth in answers.

Let’s look at how GraphRAG works in practice.

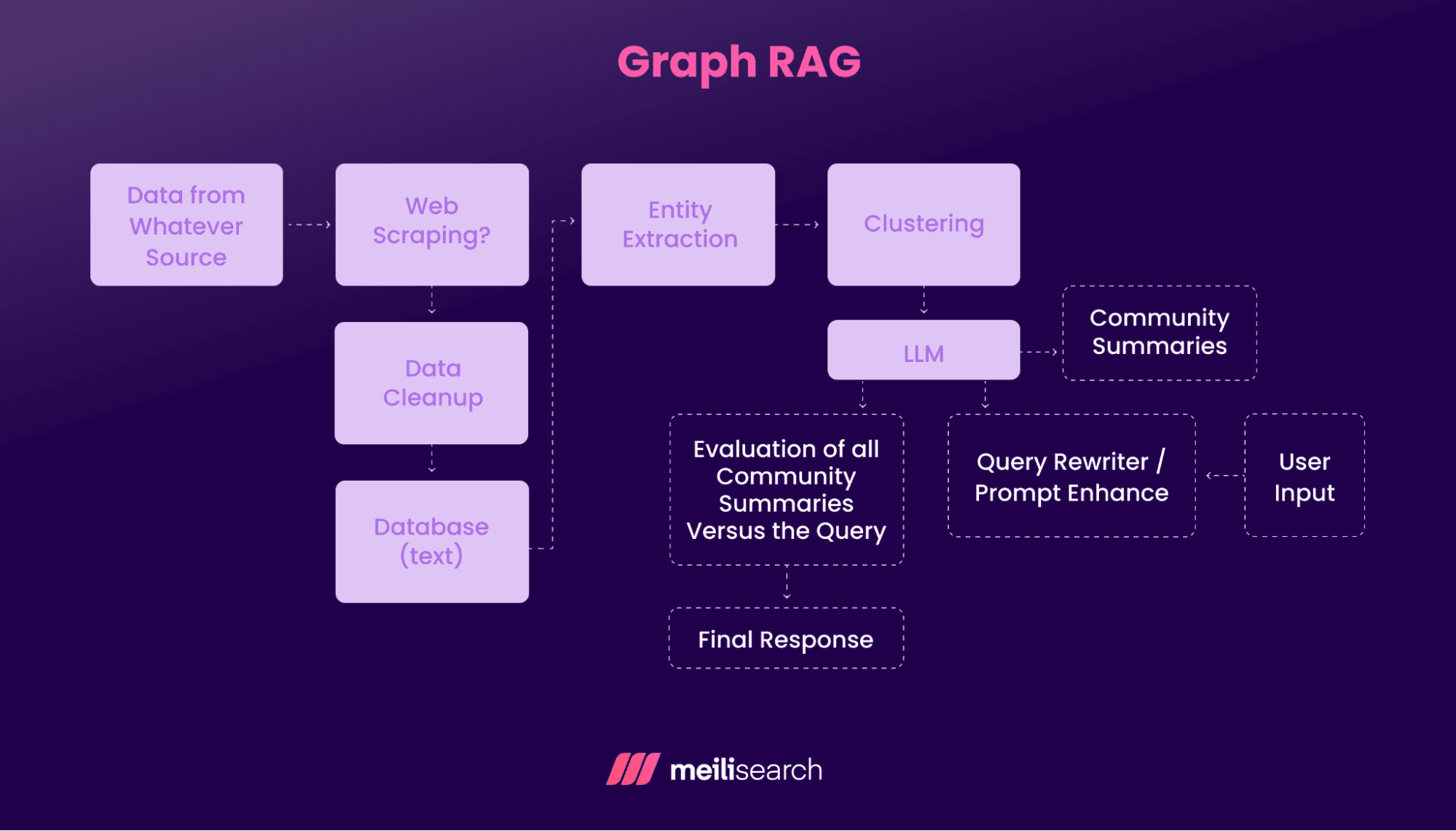

How does GraphRAG work?

GraphRAG works by blending structured data from a graph with language model reasoning.

The process starts with indexing and retrieval steps similar to those in a traditional RAG retrieval workflow, but extends them with a graph layer for connected reasoning and contextual information that reflects real-world relationships.

Let’s look at a sequential progression of a typical RAG workflow:

- Build the knowledge graph: Start by collecting your source material – documents, datasets, or domain-specific content. From this, you extract entities (such as people, concepts, or places) and the relationships between them, either with natural language processing tools or manual curation. These entities and connections are then stored in a graph database or graph data structure.

- Retrieve relevant context: When a user submits a query, the system first searches for relevant nodes and edges in the graph. A search engine like Meilisearch can quickly handle text or metadata retrieval before moving on to graph traversal. The result is a set of related entities and their connections, forming a structured context for the query.

- Generate with LLMs: Finally, the retrieved subgraph, supporting documents, and the user’s query are passed to the LLM. The model combines the textual content with the graph’s relationships to produce a context-rich answer that responds directly to the query and provides supporting details tied to the graph’s structure.

In practice, this setup helps the LLM understand how different pieces of information fit together and provides a better overall explainability.

What are the benefits of using GraphRAG?

The key benefits of GraphRAG include:

- Improved accuracy: Graph traversal only brings you the most relevant nodes and documents, so there’s a reduction of noise in the search results.

- Stronger reasoning: Structured connections in a graph let the LLM follow logical paths, making its answers more coherent and less prone to hallucinations than when reasoning over unstructured data.

- Explainability: GraphRAG makes tracing how an answer was formed easier. Developers can better view and explain the nodes and relationships supporting the output.

- Scalability: Graph structures make it easier to expand knowledge bases while keeping retrieval efficient, even as the volume of data grows.

Based on these benefits, it’s clear that GraphRAG can be invaluable in domains that rely on precision and trust, such as healthcare, finance, and law.

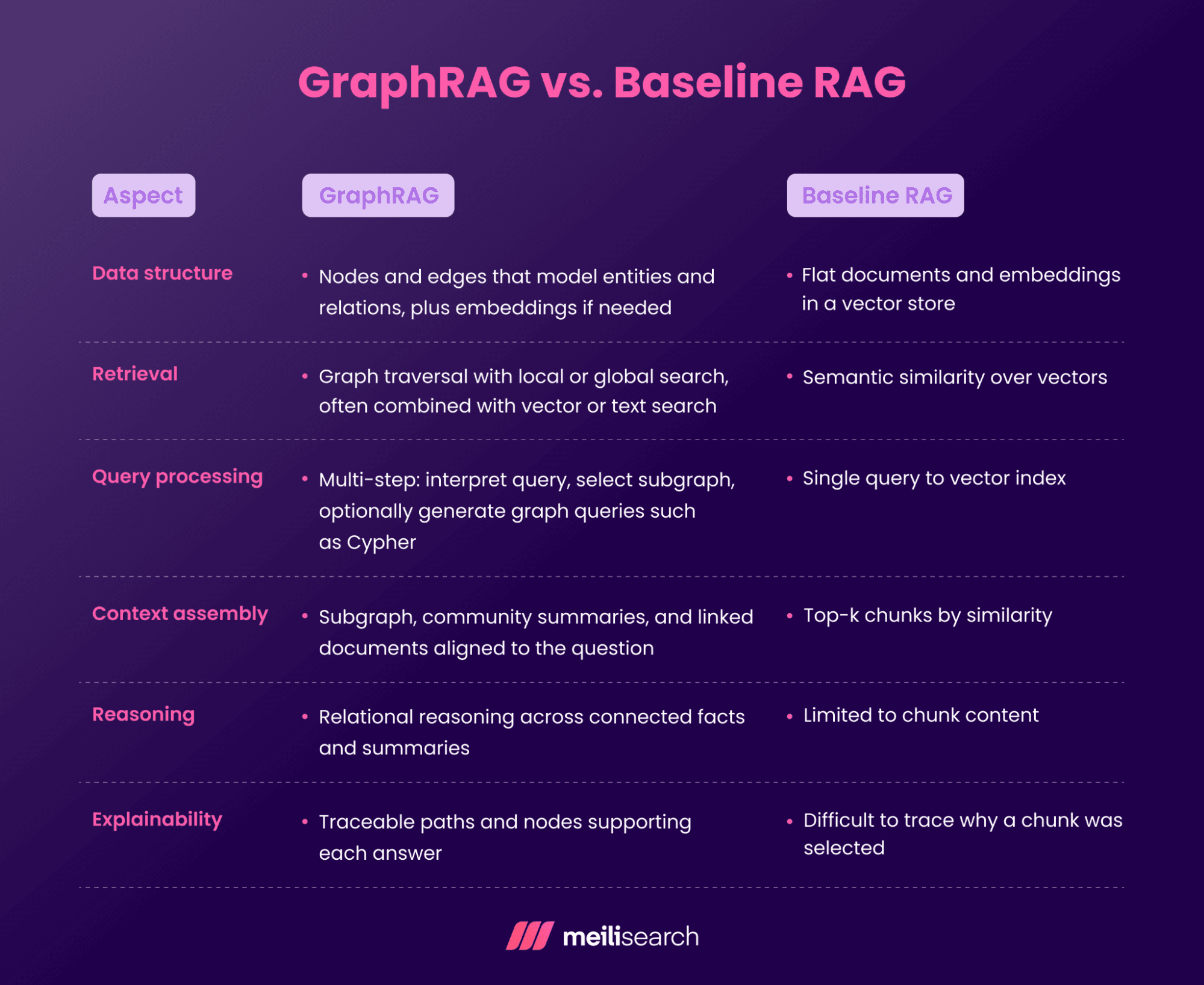

How is GraphRAG different from baseline RAG?

GraphRAG represents one of the different types of RAG, and its retrieval process differs from baseline RAG.

Baseline RAG is vector search-based, while GraphRAG uses structured relationships to get the end result. GraphRAG can still use vector and full-text search, but relationships drive what gets retrieved.

Key differences between baseline RAG and GraphRAG include:

- Data structure: Baseline RAG stores documents as embeddings in a vector database, while GraphRAG models entities and relationships as nodes and edges, optionally combined with embeddings.

- Retrieval: Baseline RAG relies on semantic similarity over vectors, whereas GraphRAG uses graph traversal with local or global search, often combined with vector or text search.

- Query processing: Baseline RAG sends a single query to the vector index, while GraphRAG follows a multi-step process that interprets the query, selects a subgraph, and may generate graph queries such as Cypher.

- Context assembly: Baseline RAG provides top-k chunks by similarity, while GraphRAG assembles a subgraph, community summaries, and linked documents aligned to the question.

- Reasoning: Baseline RAG is limited to reasoning within the retrieved chunk, whereas GraphRAG enables relational reasoning across connected facts and summaries.

- Explainability: Baseline RAG makes it difficult to trace why a chunk was retrieved, while GraphRAG offers traceable paths and nodes that support each answer.

What is a knowledge graph in GraphRAG?

A knowledge graph is a structured representation of information in which entities are stored as nodes and the connections between them are relationships.

The nodes don’t always represent the same thing. Each one can represent a different document, concept, or data point. The relationships tell you how these nodes are linked. All of this helps genAI use better context to generate its answers.

Next, we will look at common use cases where GraphRAG shines.

What are common use cases for GraphRAG?

GraphRAG is ideal in domains where the answers must be based on structured relationships and context.

Let’s see some examples:

- Advanced chatbots: GraphRAG helps chatbots deliver more relevant responses through a better-established context from linked sources.

- Answering complex queries: GraphRAG contributes significantly to handling queries that span multiple topics, such as linking research findings across disciplines.

- Financial services: This industry has arguably the most use for GraphRAG, which can help connect company data, market trends, and regulatory filings to support investment analysis.

- Healthcare research: In healthcare, GraphRAG contributes to research by linking patient records, studies, and treatment outcomes for more informed recommendations.

- Legal document analysis: In the legal domain, GraphRAG helps map case law, statutes, and prior rulings to answer legal questions with supporting references.

- Scientific knowledge exploration: This is another use case involving connecting datasets, experiments, and literature to build hypotheses.

These use cases highlight the variety and potential influence of GraphRAG when applied correctly.

Moving on, we will review tools that can help you implement it.

What tools are available for implementing GraphRAG?

We already know that GraphRAG tools are designed to manage knowledge graphs, retrieve relevant nodes and documents, and integrate with LLMs for generation.

But, depending on the goal, you may need a graph database, a fast search engine, or an orchestration framework.

RAG tools commonly used in Graph RAG applications include:

- Meilisearch: Our fast, developer-friendly search engine that can serve as the initial retrieval layer before graph traversal.

- LangChain: A framework for chaining retrieval and generation components, with support for graph-based workflows.

- Weaviate: An open-source vector database with built-in support for knowledge graphs, allowing you to combine semantic search with graph-based retrieval.

- FAISS: A high-performance library for efficient similarity search across large-scale embeddings, often used alongside graphs to speed up relevant information retrieval with specialized algorithms.

- Haystack: A flexible framework for building end-to-end RAG pipelines, including integration with graph databases and LLMs.

- Neo4j: A widely used graph database that supports Graph RAG approach implementations.

- GitHub repositories: Many generative AI examples of GraphRAG can be found on GitHub, shared by both independent developers and enterprise providers like Microsoft or OpenAI.

The right combination of tools depends on the format your data sources, scale, and reasoning needs.

Now, it’s time to go through the steps to implement GraphRAG.

How to implement GraphRAG

GraphRAG follows a relatively simple pattern involving fast text indexing, building a graph of entities and links, and retrieving a focused subgraph before LLM generation uses that structure.

Here’s a practical path you can build:

1. Prepare data and set up Meilisearch

The first step ingests documents, normalizes fields, and creates fast text and filter search.

Fast recall ensures the graph only has to operate over a smaller, high-relevance subset of documents instead of the entire dataset, which keeps queries efficient.

# Install Meilisearch client # pip install meilisearch from meilisearch import Client client = Client("http://127.0.0.1:7700", "MASTER_KEY") # Create an index and add documents idx = client.index("docs") docs = [ {"id":"r1","title":"AML report 2024","body":"Acme Ltd acquired Nova Bank in 2021."}, {"id":"r2","title":"Filing 10-K","body":"Nova Bank operates in New York."} ] # Add docs and make title filterable idx.add_documents(docs) idx.update_settings({"filterableAttributes":["title"]})

Note: update_settings is asynchronous. In production, you’d check the task status before searching. Otherwise, results may not be immediately available.

Next, use these docs to build the graph.

2. Build the knowledge graph

Step two involves extracting entities and relationships and storing nodes and edges in a database or in-memory graph.

Unlike flat text, a knowledge graph preserves the relationships between entities (who did what, where, when) giving the LLM structured context for reasoning.

import spacy nlp = spacy.load("en_core_web_sm") text = "Acme Ltd acquired Nova Bank in 2021. Nova Bank operates in New York." doc = nlp(text) # Extract entities entities = [{"id": ent.text, "type": ent.label_} for ent in doc.ents] # For simplicity, define relations manually relations = [ ("Acme Ltd", "ACQUIRED", "Nova Bank"), ("Nova Bank", "OPERATES_IN", "New York") ] # Connect to Neo4j from neo4j import GraphDatabase driver = GraphDatabase.driver("bolt://localhost:7687", auth=("neo4j", "password")) # Insert entities and relations def add_entities_and_relations(entities, relations): with driver.session() as session: for e in entities: session.run( "MERGE (n:Entity {name: $name, type: $type})", name=e["id"], type=e["type"] ) for src, rel, dst in relations: # Ensure relation label is safe before using it session.run( f""" MATCH (a:Entity {{name: $src}}), (b:Entity {{name: $dst}}) MERGE (a)-[r:`{rel}`]->(b) """, src=src, dst=dst ) add_entities_and_relations(entities, relations)

Note: In practice, you’d extract relations automatically (e.g., using dependency parsing or an IE model). Here they’re hard coded for clarity.

3. Connect retrieval to the graph

This step takes the user query, does fast recall in Meilisearch, maps results to entities, and expands the subgraph.

This step ensures the LLM sees only the relevant neighborhood of connected entities, reducing noise and making the answer more precise.

q = "Who owns Nova Bank and where do they operate?" hits = idx.search(q, limit=5)["hits"] # Map results to seed entities (here done manually, but usually via NER/metadata) seed_entities = ["Nova Bank"] with driver.session() as session: graph_results = session.run(""" MATCH (n:Entity {name: $name})-[r]-(m) RETURN n.name AS source, type(r) AS relation, m.name AS target LIMIT 50 """, name=seed_entities[0]) edges = [dict(record) for record in graph_results] print("Retrieved edges:", edges)

Next, pack the right context for generation.

4. Assemble context and generate with the LLM

This step summarizes nodes, edges, and linked passages. It builds a compact prompt that shows content and relationships.

The assembled context presents both the facts and their links, so the LLM can generate an answer that reflects not just content but relationships.

context = { "nodes": entities, "edges": [{"src": e["source"], "rel": e["relation"], "dst": e["target"]} for e in edges], "supporting_text": [hit["body"] for hit in hits] } prompt = f""" Question: {q} Graph nodes: {context['nodes']} Graph edges: {context['edges']} Supporting text: {context['supporting_text']} Answer with cited nodes and edges. """ # Example with OpenAI from openai import OpenAI client = OpenAI() # expects OPENAI_API_KEY in environment response = client.chat.completions.create( model="gpt-4o", messages=[{"role": "user", "content": prompt}] ) print(response.choices[0].message.content)

Moving to the final step, we will measure quality and tighten the loop.

5. Evaluate and improve

The final step involves checks for grounding, novelty, and path coverage. It adds caching, reranking, and guardrails.

# Feedback storage feedback_log = [] CONFIDENCE_THRESHOLD = 0.7 # Logging query feedback def log_query_feedback(query, answer, confidence, missing_entities=None): feedback_log.append({ "query": query, "answer": answer, "confidence": confidence, "missing_entities": missing_entities or [] }) # Confidence / gap detection def needs_expansion(entry): return ( entry["confidence"] < CONFIDENCE_THRESHOLD or len(entry["missing_entities"]) > 0 ) # Auto-expand graph def auto_expand_graph(missing_entities): for entity in missing_entities: # Placeholder: implement recall + entity extraction docs = search_index(entity) for doc in docs: entities, relations = extract_entities_relations(doc["content"]) upsert_graph(entities, relations) # Feedback loop runner def run_feedback_loop(): for entry in feedback_log: if needs_expansion(entry): auto_expand_graph(entry["missing_entities"])

Evaluation closes the loop: it helps detect missing entities, reduce latency, and keep answers grounded and reliable.

Note: search_index, extract_entities_relations, and upsert_graph are placeholders. In a real pipeline, you’d implement these functions to query Meilisearch, run NER/RE, and insert results into Neo4j.

Key takeaways on building with GraphRAG

GraphRAG brings structure and reasoning to retrieval-augmented generation, improving accuracy, context, and explainability. Combining a knowledge graph with a fast search layer gives LLMs the context they need to deliver better answers – a crucial step toward mastering RAG.