RAG evaluation: Metrics, methodologies, best practices & more

Discover what RAG evaluation is, what methodologies, frameworks and best practices are used, how to implement it and more.

In this article

RAG (retrieval-augmented generation) evaluation is the process of measuring how well a system actually retrieves relevant information to generate accurate answers for the users. It helps improve retrieval logic as well as the response quality.

RAG evaluation is crucial for identifying gaps in the system’s performance and reliability across various use cases.

The most common methods associated with RAG evaluation are human evaluation, reference answer scoring, and retrieval relevance checks.

In addition to this, the most widely used retrieval metrics are Exact Match, F1 score, Recall, Precision, BLEU, and ROUGE. The tools you’ll see used most often in this domain include Meilisearch, LangChain, RAGatouille, and Haystack.

Now that the stage is set, let’s have a deeper look at what RAG evaluation is and the methods involved.

What is RAG evaluation?

RAG evaluation is the process that determines how effectively a RAG system retrieves information and then uses that information to generate accurate answers for users.

You might have noticed that RAG evaluation covers both retrieval and generation. This is to provide a clear view of the system's overall correctness.

Once you have that, it becomes easier to identify weaknesses, test improvements, and benchmark different models or approaches.

Why is RAG evaluation important?

RAG evaluation is important because it helps determine whether your system is actually delivering responses that are relevant and helpful to users.

Without this kind of evaluation, it's difficult to know if your pipeline is improving or drifting off course.

RAG evaluation can help teams identify and address issues early. This can include spotting hallucinations, irrelevant retrieval, and complex, inaccurate answers:

- Reduces hallucination risk: Evaluation highlights the generation based on weak retrieval, allowing you to correct it immediately.

- Improves user trust: This evaluation helps make your RAG system fact-based and transparent, improving user confidence.

- Enables faster iteration: With regular evaluation, you can measure impact across changes and optimize performance quickly.

Next, let’s look at the methods used in RAG evaluation.

What methods are used in RAG evaluation?

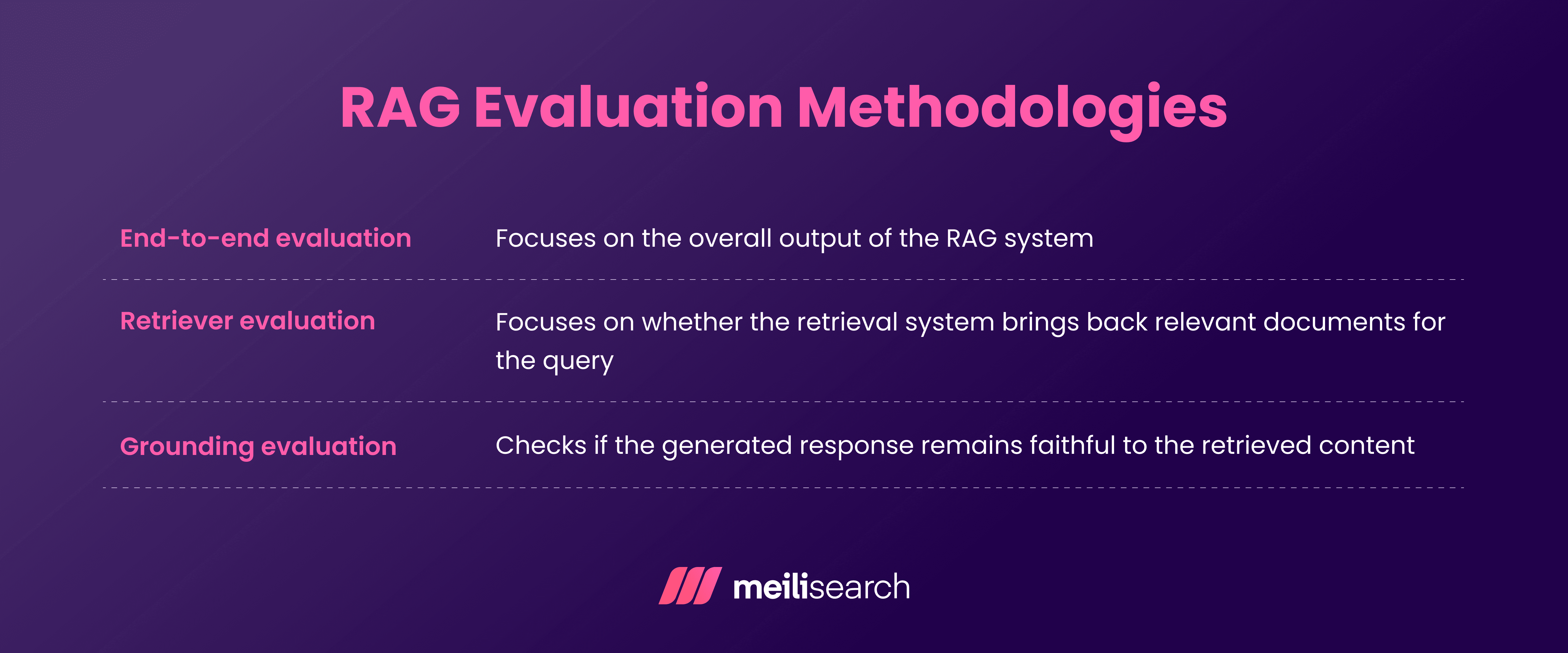

The different methods used in RAG evaluation are shown in the image below:

These approaches evaluate both the retriever and generator, ensuring no compromise on high-quality outputs at any step.

End-to-end evaluation

First up is the end-to-end evaluation. It focuses on the overall output of the RAG system.

The factors under consideration are the usefulness, accuracy, and fluency of the generated response without breaking down the pipeline components.

While it provides a quick way to compare systems, it doesn’t reveal which part of the pipeline – if any – actually failed.

Retriever evaluation

Retrieval evaluation, as its name suggests, focuses on whether the retrieval system brings back relevant documents for the query.

The evaluators can be human or automated. Either way, their function is to judge the quality of the retrieved context using a relevance score.

You can pinpoint the weakness that led to poor answers downstream.

Grounding evaluation

Grounding eval checks if the generated response remains faithful to the retrieved content.

How do we find that out? The output is compared with the supporting context to check factual accuracy. Naturally, this helps you spot and fix potential hallucinations.

What are the key RAG evaluation metrics?

RAG evaluation metrics measure the quality of the retrieved documents and the accuracy of the generated response. Developers can use this information to identify where the pipeline is failing and make the necessary corrections.

Some of the key RAG evaluation metrics to know are the following:

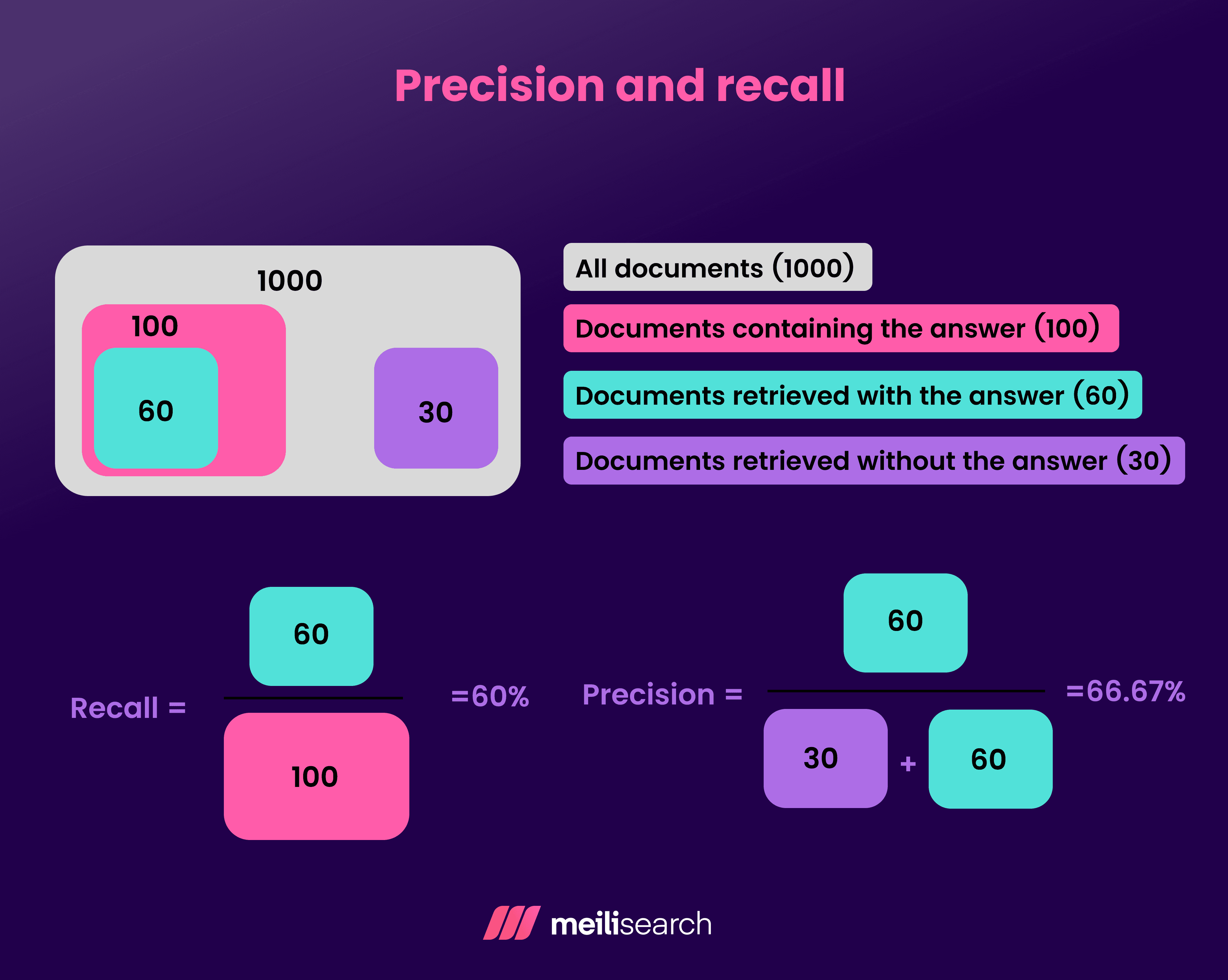

- Precision and recall: Precision measures the percentage of retrieved docs that are actually relevant, while recall assesses the percentage of relevant documents that were retrieved. You need both to achieve a balance between accuracy and coverage in information retrieval.

- Groundedness: This metric evaluates whether the generated output is factually consistent with the retrieved documents. A high groundedness score means the model is less likely to hallucinate.

- Faithfulness: Faithfulness measures how well the generated text sticks to the facts in the source material. Unlike groundedness, faithfulness seeks to identify distortions or unsupported claims.

- Answer relevance: Is the final answer even relevant to the user’s query? This is determined through answer relevance that relies on human ratings or automated scoring methods.

- Fluency: Fluency assesses how natural and readable the generated response is. After all, the target user is human. While secondary to accuracy, fluency impacts user trust and the overall experience.

Together, these metrics serve as essential benchmarks for any RAG applications, providing a complete and honest picture of the RAG system.

What are the challenges in RAG evaluation?

RAG evaluation has a few unique challenges that we’ll list here:

- No universal benchmarks: Unlike classic machine learning, there is no universal benchmark for RAG evaluation that applies to every type of RAG system, as they all differ in architecture.

- Human evaluation costs too much: Groundedness and relevance often require human judgment, which is expensive and hard to scale.

- Dynamic data and drift: The frequent interaction of RAG pipelines with evolving knowledge bases means that evaluation results soon become outdated.

- Trade-offs between metrics: There’s always a trade-off between the metrics. For instance, optimizing for fluency might reduce faithfulness. Similarly, improving recall could harm precision.

How is RAG evaluation implemented?

Different tools, frameworks, and services are responsible for implementing RAG evaluation. The evaluation process is mostly a combination of human review, automated evaluation, and continuous monitoring.

Most teams prefer to conduct offline testing first and then move on to live monitoring once the pipeline is deployed.

Popular RAG evaluation frameworks

Popular open-source frameworks, such as Ragas, TruLens, and DeepEval, are a major help when it comes to automated testing.

These frameworks use automated tests to help measure retrieval precision and factual consistency, and monitor hallucination rates. Through the LLM-as-a-judge grading they provide, you can easily identify weaknesses and correct them within the system.

For an easily digestible overview of retrieval layers and orchestration platforms, feel free to check out our guide on the top RAG tools.

Synthetic test datasets

Another common method is creating synthetic evaluation datasets that replicate real-world query patterns.

How does this help? It allows developers to benchmark retrieval quality and groundedness under a controlled set of conditions. You get to identify coverage gaps before the pipeline enters the production stage.

Human-in-the-loop validation

There’s no alternative to human insight. Even with strong automation, human evaluation remains critical.

Subject matter experts review sampled queries to verify if the retrieved content is relevant and the generated answers are accurate. It provides a nuanced view of the system that metrics alone cannot determine.

Continuous monitoring in production

As a developer, you already know that evaluation does not stop once a system is live. Production monitoring tracks key metrics, including latency, error rates, and feedback loops.

A reliable retrieval layer, such as Meilisearch, supports this process by consistently delivering fast, high-quality context that evaluation frameworks depend on.

Together, these approaches form a cycle of pre-deployment testing, human review, and real-time monitoring. As a result, the RAG pipelines remain accurate, efficient, and trustworthy over time.

What are the best practices in RAG evaluation?

Best practices give you the most efficient way to ensure that pipelines are tested thoroughly and fairly. Early weakness detection, hallucination spotting, and maintaining reliability are all part of these best practices.

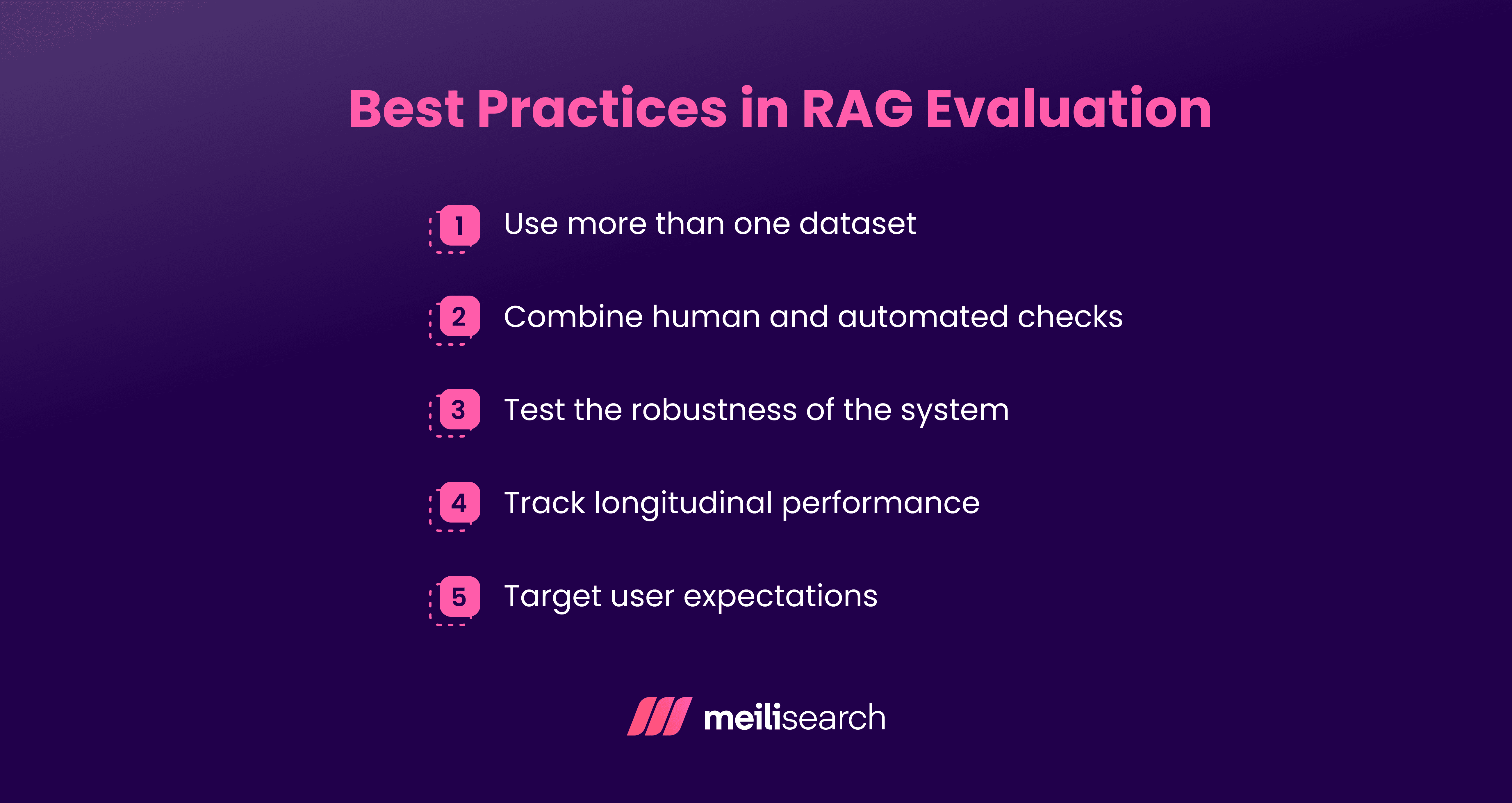

Some of the key best practices include:

- Use more than one test set: Evaluate your system with multiple data types and domains, rather than relying solely on a single benchmark. This exposes weaknesses that would otherwise go unnoticed.

- Combine human and automated checks: Humans provide context-sensitive judgments, while automated metrics offer speed and scale. A balance between both is your best bet.

- Test the robustness of the system: Introduce adversarial or noisy inputs to see how well the system maintains accuracy under less-than-ideal conditions.

- Track longitudinal performance: Don’t stop evaluating pipelines. Continue over a staggered time period. This helps identify model drift and measure whether improvements stick.

- Target user expectations: Design evaluations that mirror how end-users will interact with the system. Metrics should reflect usefulness, not just technical accuracy.

These practices make RAG evaluation a reliable part of development, ensuring systems remain stable and trustworthy at scale. To explore techniques that can further improve pipeline performance, take a look at our article on advanced RAG techniques.

How long does it take to evaluate a RAG pipeline?

The answer to this question lies in the variables. Smaller prototypes can be evaluated within hours, while production-scale pipelines require days of rigorous evaluation.

The duration also depends on factors such as dataset chunk size, evaluation methods, and the number of metrics being tracked.

For example, using human annotators for quality checks is slower but provides better, more nuanced insights than automated benchmarks.

The complexity of the pipeline also affects the timeline.

Are there any papers on RAG evaluation?

Yes, there are several notable papers on RAG evaluation, and most of them can be found on arXiv. Here are some recent ones from 2024 and 2025:

- ‘Evaluation of Retrieval-Augmented Generation: A Survey’ (2024) by Hao Yu et al.

- ‘Evaluating Retrieval Quality in Retrieval-Augmented Generation’ (2024) by A. Salemi et al.

- ‘VERA: Validation and Evaluation of Retrieval-Augmented Systems’ (August 2024) by Tianyu Ding et al.

- ‘Unanswerability Evaluation for Retrieval-Augmented Generation’ (December 2024) by Xiangyu Peng et al.

- ‘Retrieval Augmented Generation Evaluation in the Era of Large Language Models’ (April 2025) by Aoran Gan et al.

- ‘The Viability of Crowdsourcing for RAG Evaluation’ (April 2025) by Lukas Gienapp et al.

These papers provide a rich foundation for anyone wanting to understand or benchmark RAG evaluation methods, metrics, and emerging best practices.

The future belongs to teams that master RAG evaluation

RAG evaluation is not just a technical exercise – it is the feedback loop that keeps RAG systems honest, reliable, and ultimately useful.

In practice, evaluation becomes the difference between a system that only looks impressive in demos and one that consistently delivers value in production.

As data sources shift, user expectations evolve, and generative models grow more complex, the ability to adapt evaluation strategies will separate cutting-edge teams from those left chasing errors downstream.