Retrieve and gain: Three RAG use-case patterns you can ship today

Building real RAG systems isn’t about flashy demos – it’s about shipping features that stay fast, accurate, and reliable as your product grows.

This is the third in our series on practical RAG with Meilisearch. In post #1, we explained how hybrid retrieval (lexical + semantic) boosts both recall and precision so your results are actually the right ones. In post #2, we walked through the full RAG pipeline: query refinement → retrieval → generation, and why keeping those pieces integrated keeps latency low and the system simple.

In this post, we focus on how teams actually ship. We’ll look at three patterns that show up over and over in real products: Retrieval, Answers, and Growth, and illustrate each with concrete use cases from teams using Meilisearch today.

(R)etrieval: making the search bar fast, precise, and dependable

Shoppers don’t want an essay about sneakers; they want the right product card with a clean 'Add to cart' button. In a commercial flow, generation is optional, but retrieval quality is everything. That’s the heart of the revenue pattern: make rank #1 correct, obvious, and fast, so the next click is a purchase, and not a string of revised queries.

Retrieve: Find results fast and accurately

Retrieve: Find results fast and accurately

You can see this play out at Bookshop.org, where better retrieval directly translates into more purchases from search, increasing from 14% of searches leading to a purchase to 20%, a 43% relative lift. That’s the sort of metric you feel in the revenue graph, and it came from relevance, not more copy.

Minipouce showcases the “great on day one” angle: solid defaults, typo tolerance, and intuitive facets produce results that feel right without weeks of tuning.

And for teams outside classic retail, Qogita (B2B marketplace) and HitPay (fintech/commerce) exhibit the same retrieval-first pattern, dropping into very different surfaces: fast to set up, easy to operate, and friendly to lean teams.

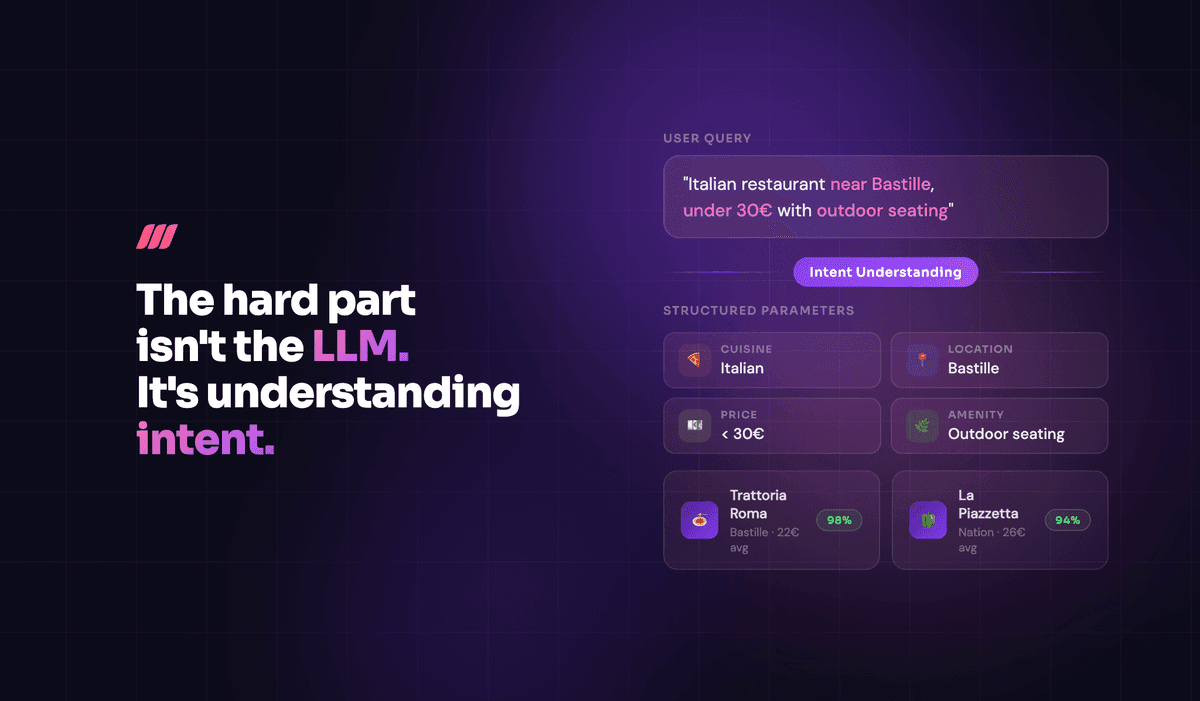

What makes this work is a short, disciplined loop: hybrid ranking to catch both exact matches (“Air Max 270 black 42”) and intent phrasing (“black Nike runners size 9”), facets that narrow instantly, and a UI that respects the action. Keep an eye on zero-results queries, measure search→purchase conversion, and treat synonyms/typos as first-class relevance features. When intent is commercial, R > G: retrieval first, retrieval last.

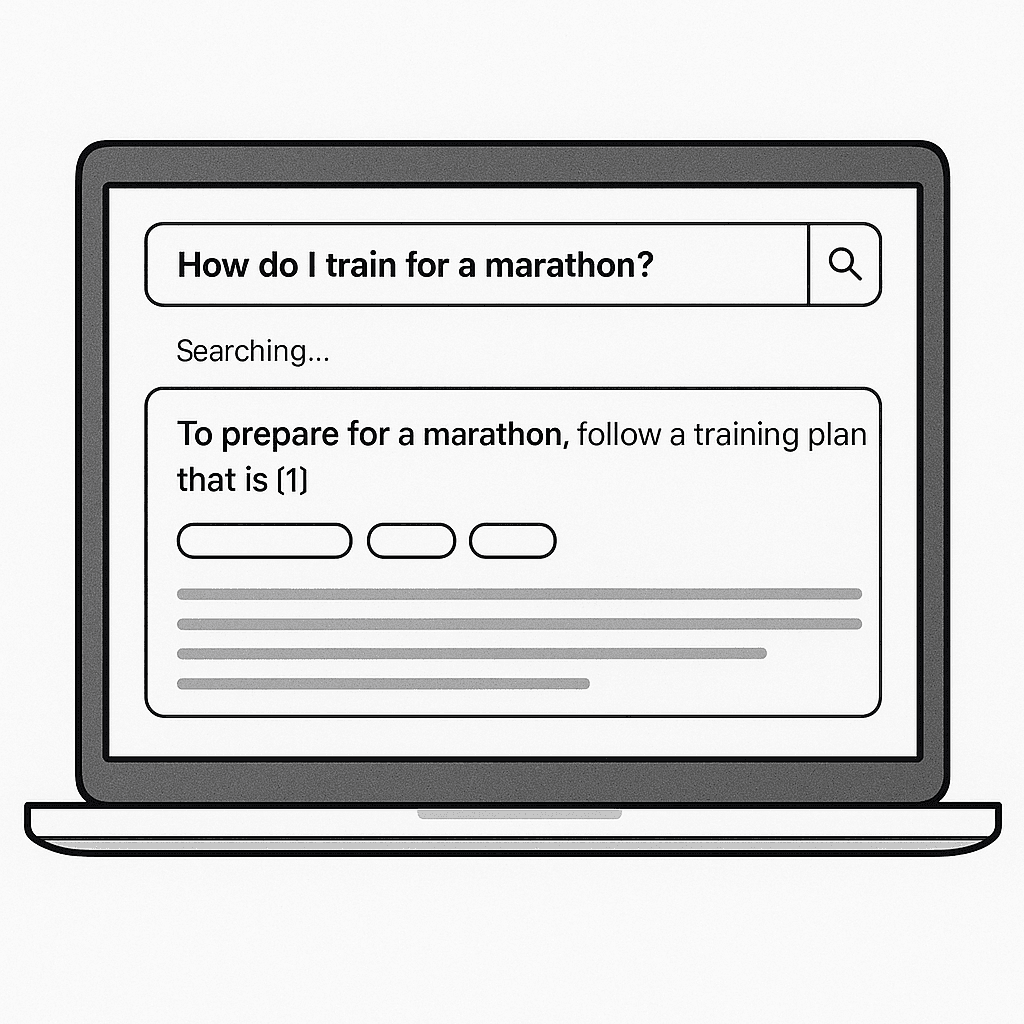

(A)nswers (retrieval + generation): one reliable answer beats ten links

Internal questions are scavenger hunts across policies, procedures, docs, tickets, and code. Your teammate doesn’t want ten blue links; they want a short, sourced answer they can act on. That’s where retrieval + generation shines: retrieval assembles the right building blocks; generation assembles them into a trustworthy and concise answer.

Answer: Produce accurate answers synthesizing the searched results.

Answer: Produce accurate answers synthesizing the searched results.

In practice, Louis Vuitton is a clear example: store associates get consistent, fast answers to policy and product questions across locations. The value isn’t that an LLM can chat – it’s that retrieval surfaces current, canonical guidance, and generation condenses it into steps a person can follow.

For developer productivity, Symfony shows what low-latency, well-scoped search looks like at scale: sub-10 ms responses over 85,000+ docs across 30 versions help engineers land on the exact class, command, or procedure they need. At OCTO Technology, the same approach operates as a dependable, production-ready solution in client environments where reliability and maintainability are crucial.

The craft is in the details: make retrieval source-aware (favor canonical and current, down-rank duplicates and stale pages), retrieve snippets instead of whole pages so the model stays on a short leash, and use a prompt that’s opinionated about behavior, yet can synthesize briefly, cite every claim, include steps or a code block when useful, and say “no evidence found” instead of guessing. A tiny feedback loop (“useful / missing / outdated”) helps you fix the source, not just the surface.

(G)rowth (infrastructure): scale you don’t have to think about

Most RAG stories focus on search and answers. That’s great, until the demo grows up. The catalog doubles, traffic spikes on a Tuesday, someone adds three new locales, and suddenly your tidy prototype is juggling a keyword engine, a vector store, and a re-ranker stitched together with custom middleware. Queries get slow, dashboards get noisy, and your on-call starts carrying two laptops.

Grow: Scale effortlessly as the data and customer base grow

Grow: Scale effortlessly as the data and customer base grow

Some things are meant to be boring, and scaling should be one of them. Instead of an ever-changing stack where you tweak three services every time the user base grows, Meilisearch offers one hybrid path that stays fast as you add data, users, and languages. One engine and one request handle both lexical precision and semantic recall. No service hopping, fewer moving parts, and fewer surprises. As the client, you focus on content and UX, and we focus on the plumbing.

That’s the experience we aim for with Meilisearch. Index growth feels like adding shelves, not rebuilding the warehouse. Versioned indexes and safe schema changes turn “we need a new attribute” into a routine deployment. Language-aware analyzers, combined with embeddings, handle phrasing differences without a meeting. Monitoring covers what actually matters: latency and quality. That way you can spot recall/precision drift before users do. And vectors don’t have to eat your budget: compact embeddings, ANN indexes, and only storing what moves relevance keep costs sensible.

You can see this pattern in the wild. CarbonGraph moved from a standalone vector setup and found that adding embeddings inside a hybrid engine was straightforward, meaning no extra choreography was required.

Hugging Face is the stress test: discovery keeps working across 2.2M model cards, 500k datasets, and 60k demos without turning every query into a science project.

And for “hard-mode” content, TutKit serves 15,000+ items in 26 languages, while Bildhistoria keeps 90,000+ richly annotated historical images findable and fast. Different shapes of data, the same quiet promise: it scales, and you barely notice.

Ready, Aim, Go!

RAG isn’t one architecture; it’s a set of patterns you can plug in and ship. With Meilisearch, you get three practical pillars to build on: Retrieve, Answer, and Grow. Retrieve for quick discovery. Answer for concise, sourced guidance. Grow for speed and stability at scale. Start where you are, keep one path, and keep iterating.