RAG vs. long-context LLMs: A side-by-side comparison

Compare the key differences between RAG and long-context LLMs. See which approach best suits your needs, where to apply them, and more.

In this article

Large language models (LLMs) are becoming better at handling larger context windows. But does that mean retrieval-augmented generation (RAG) is obsolete? Not quite.

Here’s a quick look at what this guide covers:

- How RAG delivers grounded and user-friendly responses through retrieval and generation.

- The hype surrounding long-context LLM models like Gemini 1.5 Pro or GPT-4.1, which can process thousands of tokens simultaneously.

- The major difference between RAG and long-context as well as the gap between them.

- When to use RAG and long-context LLMs, and what makes one better than the other in different situations.

- A look at the pros and cons of each, and whether there is a need to use both together.

- How both RAG and long-context LLMs perform in real-world use cases, from AI agents to enterprise knowledge bases.

Let’s find out which approach gives you better, more grounded responses while incurring minimal costs.

What is retrieval-augmented generation (RAG)?

Retrieval-augmented generation connects information retrieval with LLMs to generate accurate responses. How? By searching external knowledge bases or vector bases for relevant documents.

RAG provides an LLM with retrieved chunks of external data, providing relevant context and helping it produce the response the user is looking for.

As a result, RAG reduces the likelihood of AI hallucinations. It’s used in AI agents and real-time workflows that depend on external data.

Next, let’s take a look at how long-context LLMs handle larger context windows without retrieval.

What is a long-context LLM?

A long-context LLM, as the name suggests, is a large language model specifically designed to handle a much larger context window size than the standard models. The difference is quite staggering, as it can sometimes process even millions of tokens without relying on retrieval.

Modern examples include GPT-4.1, Gemini 2.5 Pro, Claude 4 Opus, and Llama 4 Maverick. They can read entire reports, research papers, or knowledge bases at once, making them ideal for summarization, deep reasoning tasks, and extended document analysis.

Next, let’s compare how these long-context models differ from RAG.

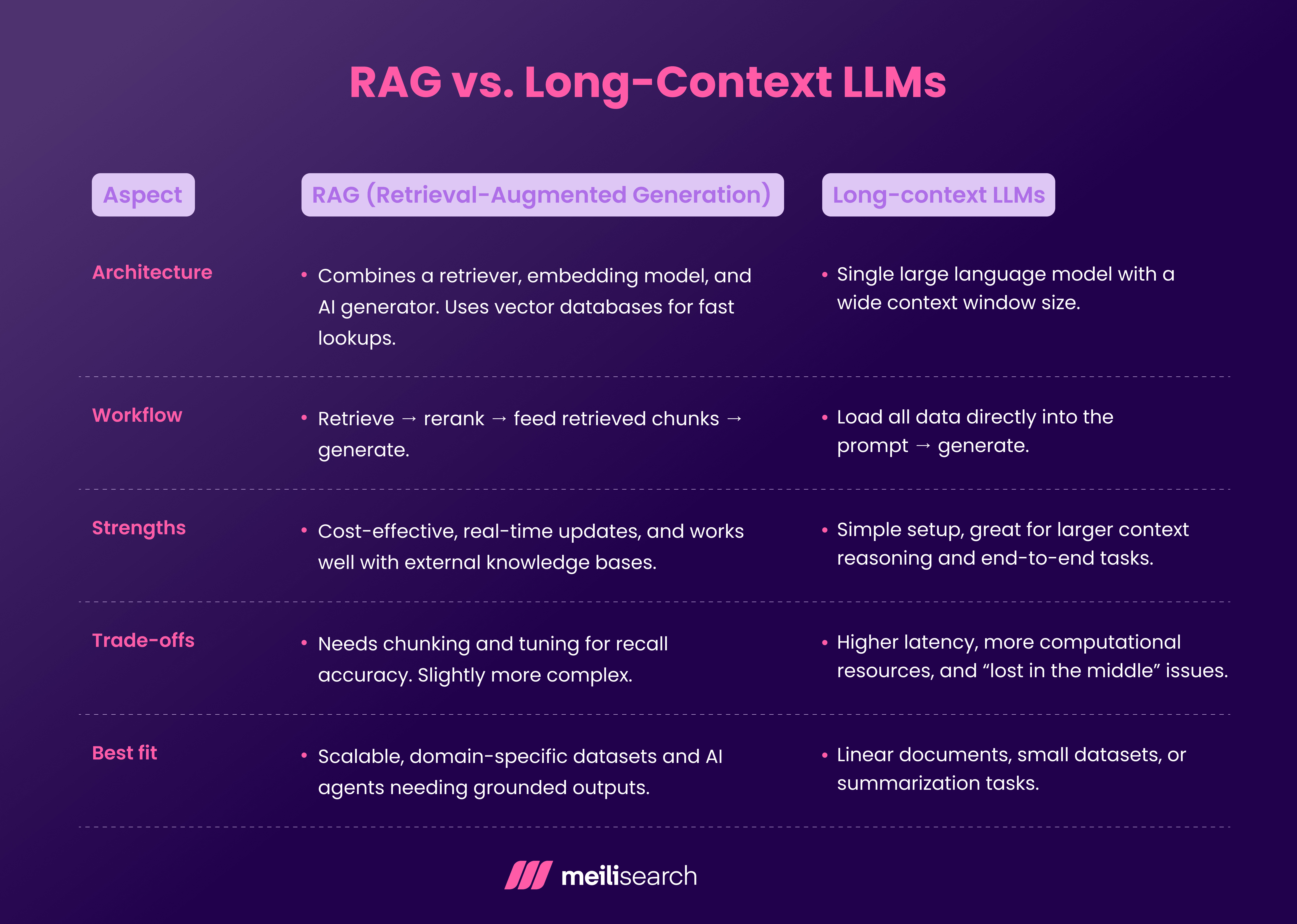

How does RAG differ from long-context LLMs?

The basic difference you need to understand between RAG and long-context LLMs is that they handle context differently.

RAG retrieves relevant information from external sources before generation. Long-context LLMs, on the other hand, process all input within a single, long-context window.

While accuracy and scalability are goals for both approaches, the methods used to achieve them differ.

If you’re exploring different architectures, our post on RAG types breaks down each variant with examples.

Now, let’s see when using one of these is better than the other.

When should you use RAG over long-context LLMs?

Retrieval-augmented generation is the perfect match when your app relies on external knowledge or dynamic datasets. If you need real-time retrieval and scalability, you can’t go wrong with RAG.

RAG handles changing knowledge bases and keeps latency predictable, minimizing the required number of tokens per query.

It’s cost-effective and enables domain-specific optimization as well as granular access control for transparent audit trails.

When are long-context LLMs a better choice?

The best time to use long-context LLMs is when your data fits inside a single large context window and doesn’t change often. Summarization, reasoning, technical reports, understanding transcripts; they do it all.

The newer models, such as GPT-4.1, Gemini 2.5 Pro, Claude 4 Opus, and Llama 4 Maverick, push context window sizes past a million tokens and easily handle complex cross-document reasoning. Additionally, these models can handle entire datasets directly, eliminating the need for retrieval pipelines.

As amazing as that sounds, it comes with a significant computational cost. The latency also goes up for larger inputs.

In short, for static knowledge bases, more extended context exploration, or quick experimentation, long-context LLMs are often the more practical path.

What are the main advantages of RAG?

RAG gives you all the control and flexibility you need to build faster and minimize costs when developing your system.

Let’s see what makes RAG special:

- Efficient use of resources: By retrieving only relevant documents, RAG keeps the number of tokens small, reduces costs, and speeds up each query.

- Speed: Thanks to the use of vector bases and embedding models in the retrieval process, the speed of RAG systems is unmatched.

- Flexibility and adaptability: RAG easily integrates with various knowledge bases, requiring no fine-tuning.

- Full transparency: Every answer can point back to its retrieved chunks, so it can easily be traced while maintaining user trust.

Want to see how teams optimize performance? Check out our official guide on RAG tools.

What are the main advantages of long-context LLMs?

Long-context LLMs are by far the best option when you need the system to handle large inputs while retaining the context.

Let’s take a deeper look at their pros:

- Thorough understanding: These models process entire datasets or documents in a single pass, ensuring minimal fragmentation and preserving the meaning.

- Exhaustive reasoning: Long-context LLMs shine in reasoning tasks and can connect ideas spread across long sequences for stronger logical flow.

- Simplicity: There’s no need for external RAG pipelines or vector databases. Just input your text and generate.

Now, it’s time to see the limitations of both RAG and long-context LLMs.

What are the limitations of RAG?

RAG is the go-to option for accuracy and control, but that doesn’t mean it comes without challenges. Let’s look at some below:

- Retrieval is not 100% accurate: You may sometimes receive poor output quality due to inadequate chunking and embedding models.

- Costly maintenance: Keeping indexes fresh requires ongoing updates to datasets and managing data pipelines. None of this is free or easy.

- Experienced prompt engineering skills needed: RAG depends on well-structured prompts to merge retrieved chunks without confusing the LLM.

- Latency changes too easily: Each retrieval step adds milliseconds to the response time. When you estimate this at scale, it adds up to a significant amount of latency.

These factors make RAG more complex to deploy and maintain than long-context LLMs, which is why small teams tend to avoid it.

What are the limitations of long-context LLMs?

Let’s now explore the key drawbacks to long-context LLMs:

- Excessive resource use: When you want to process millions of tokens, you need more computational resources.

- Scaling isn’t easy: It’s not straightforward to scale long-context LLMs, as latency and response times increase with added context length.

- Lost context: You can lose important details, especially in extremely long inputs or mixed datasets.

- Data security: The larger the prompt, the greater the risk of prompt injection, which can lead to data leakage and compromise enterprise security.

Even advanced models face these issues when pushed to their context window size limits.

Next, we’ll examine how both methods perform using real-world benchmarks.

How do RAG and long-context LLMs perform on benchmarks?

Numerous qualified studies offer nuanced insights into how each approach performs.

Let’s look at some of the most recent papers that discuss this in detail.

‘Retrieval Augmented Generation or Long-Context LLMs? A Comprehensive Study and Hybrid Approach’ (Li et al., 2024) found that long‐context LLMs consistently outperformed RAG when ample resources were available, but RAG was undoubtedly far more cost-efficient.

Another study, ‘LaRA: Benchmarking Retrieval-Augmented Generation and Long-Context LLMs – No Silver Bullet for LC or RAG Routing’ (Li et al., 2025), observed that there was no one-size-fits-all solution. The choice ultimately depends on the model size, task type, and other factors surrounding context length and retrieval quality.

Long-context models shine for long, static documents. On the other hand, RAG performs better when datasets are dynamic or diverse.

How do RAG and long-context LLMs impact costs?

It’s no secret that RAG offers lower overall costs for setup, while long-context LLMs incur a higher token and infrastructure cost at scale:

- Unique setup cost: With RAG, the focus is on indexing, embedding, and retrieval. For LLMs, the focus is on a premium API or GPUs that support large context windows.

- Inference costs: The inference cost for RAG is much lower since it only sends relevant chunks to the LLM. This way, it minimizes the token cost and usage. On the other hand, LLMs that are processing 200k+ tokens per request might even cost up to $20 per call.

- Scaling and maintenance costs: RAG pipelines require ongoing index upkeep and retrieval tuning, but base LLM token consumption stays low. Long-context solutions scale poorly with more users or larger documents because each call incurs increased costs and latency.

- Operational charges to consider: Enterprises with strict cost or latency targets often choose RAG since it better suits their needs. For long-context LLMs, hidden costs like GPU usage, memory capacity, and slower responses become an uninvited guest that you don’t enjoy dealing with.

What are real-world use cases for RAG?

RAG is ideal for domains that deal with rapidly changing contexts. These include:

- Enterprise knowledge systems: Companies use RAG to consolidate all their internal data from wikis, emails, and CRMs into a unified knowledge base. AI assistants can then use this knowledge base to deliver relevant answers.

- Intelligent search engines: RAG serves as the backbone for intelligent search engines. Thanks to vector databases and hybrid retrieval, manual lookup time can be significantly reduced.

- Dynamic document management: In fast-moving domains such as finance or healthcare, RAG enables real-time updates without requiring retraining of the AI model. This way, the output always reflects the latest updates.

What are real-world use cases for long-context LLMs?

Long-context LLMs excel at handling tasks that require large inputs:

- Summarization and analysis: Models can process entire reports, transcripts, or books. They provide all the information you need from these sources, whether summaries, transcripts, or answers to questions that require reasoning.

- Legal and compliance review: Long-context LLMs are a go-to choice for enterprises that need to review legal contracts in their entirety. They also help in flagging inaccurate info and comparing clauses without losing context.

- Academic and technical research: Long-context systems can explore long datasets, scientific papers, or arXiv collections to identify relevant information and trends across different fields.

It’s now time to explain why LLMs’ growing capabilities are starting to challenge traditional RAG pipelines.

Why are long-context LLMs challenging traditional RAG pipelines?

All these recent advances in context window size are reshaping how developers think about retrieval.

Models that can handle over a million tokens reduce reliance on RAG pipelines and vector databases. For smaller or static datasets, long-context LLMs provide faster and simpler results without requiring any retrieval infrastructure.

However, RAG remains undefeated when it comes to dynamic or real-time data retrieval, offering both data freshness and scalability.

Let’s see how to choose between these two approaches effectively.

How to choose between RAG and long-context LLMs?

Choosing between RAG and long-context LLMs depends on your data volume, update frequency, and performance goals. Each approach can meet a distinct need:

- Project scope: If the project is small or static, such as a policy chat or document summary, the long-context models are way easier to deploy. For larger projects that need real and ongoing reasoning, RAG is what you want.

- Data type and freshness: If your system relies on constantly changing or real-time content (such as support tickets, logs, or news) RAG ensures the results remain current and up-to-date.

- Scalability and cost: RAG minimizes token usage, so it’s more cost-effective for enterprise-scale workflows. Long-context LLMs consume more tokens as the context length increases, resulting in increased latency and computational resources.

- Compliance and control: When there can be no compromise with audit trails and explainability, such as in healthcare or finance, RAG’s indexed architecture provides audit-ready outputs.

In short, RAG suits high-volume, dynamic ecosystems, while long-context LLMs excel in reasoning-intensive domains.

How does retrieval work in RAG vs. long-context LLMs?

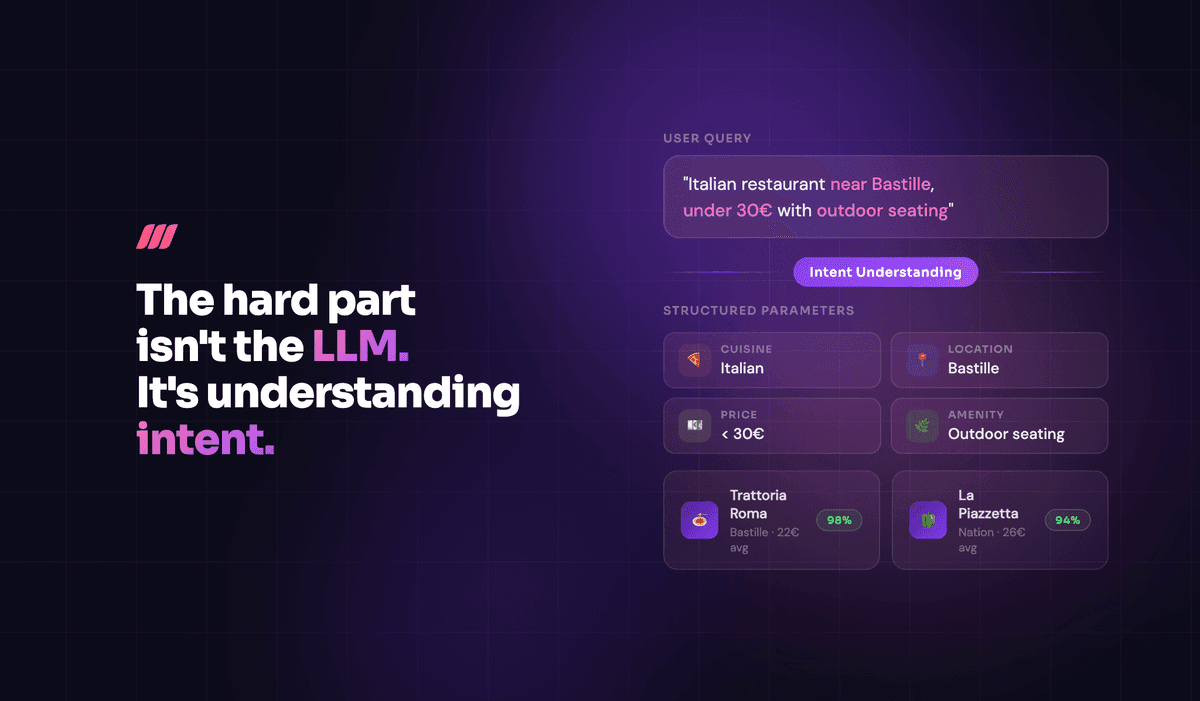

In RAG, retrieval happens before the model generates a reply. A retriever searches your knowledge base to find the most relevant documents, then passes the retrieved information to the large language model for response generation.

Tools like Meilisearch make this step even faster and production-ready. Meilisearch indexes content and performs hybrid retrieval (keyword + vector) with built-in filters, scoring, and reranking. This gives grounded and citable snippets that aren’t just accurate but also easy to track. It’s transparent and quick to update or scale through Meilisearch’s simple /chat API.

Long-context LLMs have no retrieval step at all. They rely entirely on what’s packed inside the prompt.

While this works for short, static data, it becomes costly and less fresh as context windows grow. Additionally, there’s no automatic refresh or audit trails, so it is impossible to trace the origin of the response.

Can RAG and long-context LLMs be combined?

Yes, and it’s often the best of both worlds. Hybrid setups use RAG to fetch relevant documents from a knowledge base, then feed them into a long-context LLM. Then comes the reasoning across the entire input combined.

This hybrid approach strikes the perfect balance between recall and reasoning. RAG ensures the prompts are lean and cost-effective, while the long-context LLMs handle the analysis and reasoning the user requires.

Meilisearch handles the retrieval layer in such systems: indexing, filtering, and returning grounded, high-quality snippets before the model processes them.

If you’re into experimenting with model correction or hybrid routing, explore our guide on corrective RAG for practical strategies.

Bringing it together: choosing between RAG and long-context LLMs

Both RAG and long-context LLMs push the limits of how large language models use data, but their goals differ significantly from one another.

RAG is all about accuracy and efficiency, but long-context LLMs excel in reasoning and retaining context.

The best foundation you can build for any system is to combine both of them.