Mastering RAG: unleashing precision and recall with Meilisearch's hybrid search

Learn how to enhance LLM accuracy using Retrieval Augmented Generation (RAG) with Meilisearch's hybrid search capabilities. Reduce hallucinations and improve search relevance.

In this article

We've all marveled at the capabilities of large language models (LLMs) like ChatGPT, Gemini, or DeepSeek, witnessing their ability to generate coherent and often insightful text. However, alongside this power, we've also encountered their limitations, particularly their tendency to "hallucinate" or generate incorrect information. This isn't because they're designed to store facts, but rather because they're trained to predict the next word based on patterns in vast amounts of text, relying on a kind of "muscle memory" rather than a factual knowledge base.

The problem of hallucination and limited context

Many known cases show that this is a problem that needs to be addressed. One of them was the real-world example of lawyers who, relying solely on an LLM, presented fabricated case citations in court, only to find out that all the information given to them by the LLM was false. This highlights the critical need for LLMs to access and accurately cite external, reliable information.

The recurring question then becomes: how do we leverage the power of LLMs with our own domain-specific or business-specific data?

One approach to connect LLMs with specific data is to provide them with context directly within the prompt. This is a good approach, although it can only go so far. While models like Google's Gemini are increasingly capable of handling larger contexts, there's a limit to how much information an LLM can effectively process and utilize within a single prompt.

Another method is fine-tuning, where you further train an LLM on your specific dataset. This is quite useful too, but unfortunately, fine-tuning is resource-intensive, requiring significant time and data, making it impractical for frequently updated information.

The solution: retrieval-augmented generation (RAG)

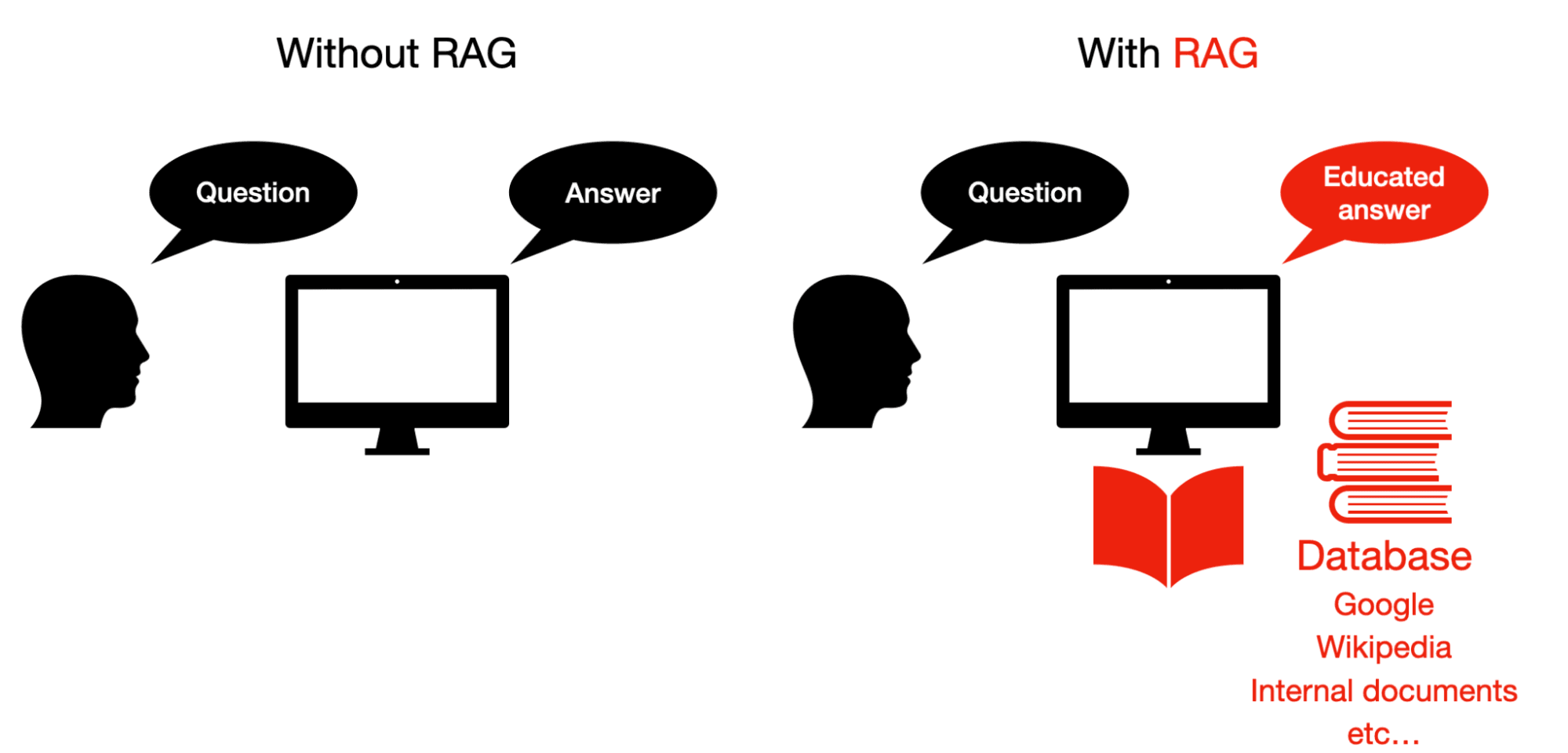

The most effective solution to these challenges is Retrieval Augmented Generation (RAG). In short, RAG tells the model “before you start talking, search for the answer among these documents, and then talk”. Think of RAG as equipping your LLM with the skills of a librarian and providing it access to a comprehensive library (your knowledge base or database). Instead of generating responses purely from its training data, the LLM first "looks up" relevant information from your provided sources and then uses that information to formulate an educated answer. This process not only improves the accuracy of responses but also allows the LLM to provide sources, directly combating the issue of hallucination.

Figure 1: With RAG, the model will first search for an answer to the user’s query in a database, and then it formulates a response using the query and the retrieved documents.

Figure 1: With RAG, the model will first search for an answer to the user’s query in a database, and then it formulates a response using the query and the retrieved documents.

The RAG workflow typically involves three key steps:

- Query Transformation: The user's query is optimized into an effective search query.

- Information Retrieval: The transformed query is used to search a database for relevant information.

- Response Generation: The retrieved results, along with the original prompt, are fed to the LLM to generate an informed response.

As an example, if the query from the user is “Hmm, I’m trying to find out the best places to eat in Paris, any ideas?”, a good query transformation model (step 1) would turn it into “Restaurants in Paris, highest quality”. The information retrieval system (step 2) would return a list of documents or links containing names and ratings of several restaurants in Paris. The LLM would then formulate a verbal response (step 3) using the modified query and the list of restaurants. Think of it this way, imagine your friend calls you on the phone and asks you for good restaurants in Paris. You first turn their question into a Google query, search for it in Google, look at the first 4 or 5 results, and then respond to them with a sentence or two, giving them recommendations based on what you found. That is exactly the RAG process.

While many associate RAG with vector databases and a separate re-ranking step after retrieval, solutions like Meilisearch simplify this process by integrating the entire workflow and stack. This blog post will focus on the crucial second step (how to search properly), while the next blog post will delve deeper into the entire RAG pipeline and explore how Meilisearch simplifies its deployment and maintenance.

The tools: full-text and semantic search

Effective searching within a text database is paramount for successful RAG. Two prominent methods stand out:

- Full-text search: This method focuses on matching keywords present in your query with those found in the search results.

- Semantic search: This approach retrieves documents that are conceptually closest to your query, understanding the meaning behind the words, not just the words themselves.

The best of both worlds is

- Hybrid search, which, as the name suggests, combines the strengths of both full-text and semantic search. Let's examine when each method excels before diving into how they can be combined.

When is semantic search better?

Semantic search shines when the combined meaning of words is more important than individual keyword matches. Consider the query "Book a trip".

- An article titled "Books about trips" might be highly ranked by full-text search due to keyword overlap, but it's likely not what the user intends.

- An article stating "Schedule your travels with us" is semantically more relevant, even if it shares fewer exact keywords. Semantic search would prioritize the latter, providing a much better result.

When is full-text search better?

Full-text search is superior when you need precise keyword matches, especially for technical terms or specific identifiers. For instance, if your query is "ISO 9001 certification process":

- An article titled "ISO 9001:2025 Certification Checklist" is a perfect match due to the specific terminology.

- An article like "How to achieve quality management compliance" might be semantically similar, but lacks the direct keyword specificity.

Full-text search is also valuable in cases where semantic similarity doesn't equate to the correct answer. For example, if the question is "What color is an apple?", semantic search might return "What color is an orange" as the most similar sentence. While similar, it doesn't provide the direct answer to the original question.

How to combine the results: hybrid search scoring

Once we have results from both full-text and semantic searches, the challenge lies in effectively combining them into a unified, fair ranking. Meilisearch addresses this by assigning and combining scores based on their relevance.

For semantic search, the score is directly based on similarity. Similarity is a measure that's high for closely related pieces of text and low for disparate ones, directly reflecting how well the results conceptually match the query. For example, the similarity between the sentences “hello, how are you” and “hi, how’s it going” is high. The similarity between the sentences “Hello, how are you?” and “I like apples” is low.

For full-text search, RAG methods often employ traditional algorithms like BM25 or TF-IDF to calculate a relevance score for each document, reflecting how well its keywords match the query. Meilisearch, on the other hand, employs another method, an algorithm called bucket sorting.

This process involves organizing documents into progressively smaller "buckets" based on a set of defined ranking rules (such as a relevance score, number of typos, or word proximity), with each subsequent rule acting as a tie-breaker until a precise order is achieved.

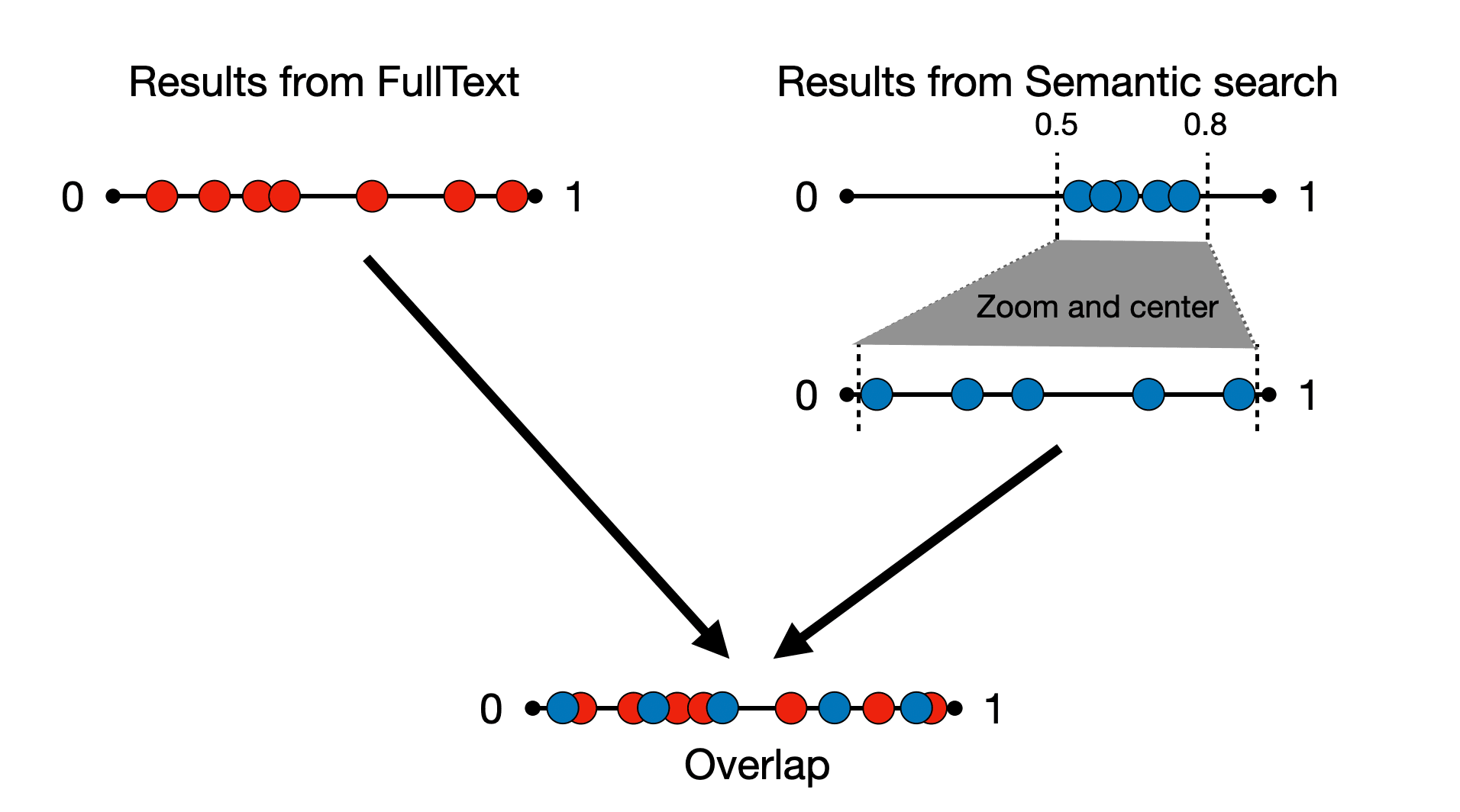

A key aspect of Meilisearch's approach is normalizing these scores. Initial scores from full-text and semantic search often have different ranges. For example, BM25 scores might range from 0 to 1, while semantic similarity scores could be concentrated within a narrower band like 0.5 to 0.8. To ensure comparability, semantic search scores are stretched and centered (a process called an affine transformation), bringing them into the same 0-1 range as full-text scores (Figure 2). This normalization ensures consistency across both types of search results, allowing for a unified relevance score. In other words, this allows the results from both search methods to compete fairly.

Figure 2: The results from Semantic search are stretched (zoomed and centered) in order to combine them with the results of FullText search.

Figure 2: The results from Semantic search are stretched (zoomed and centered) in order to combine them with the results of FullText search.

This consistent scoring across both search types is crucial. It enables you to decide, at query time, the "semantic ratio", meaning how much weight you want to give to semantic results versus full-text results when combining them.

Benefits of hybrid search: precision and recall

To understand the power of hybrid search, let's briefly review the concepts of precision and recall, two fundamental metrics in information retrieval. The importance of each metric varies depending on the specific problem.

Imagine you have an email spam filter:

- Recall measures the percentage of all truly spam emails that your filter successfully identified as spam (meaning that the number of actual spam emails that still landed in your inbox was small).

- Precision measures, among the emails your filter flagged as spam, what percentage were actually spam (meaning that the number of legitimate emails mistakenly sent to your spam folder was small).

In the context of searching for answers to a query in a database:

- Recall measures the percentage of all relevant articles (those containing the answer) that we successfully retrieved.

- Precision measures the percentage of retrieved articles that actually contain the answer.

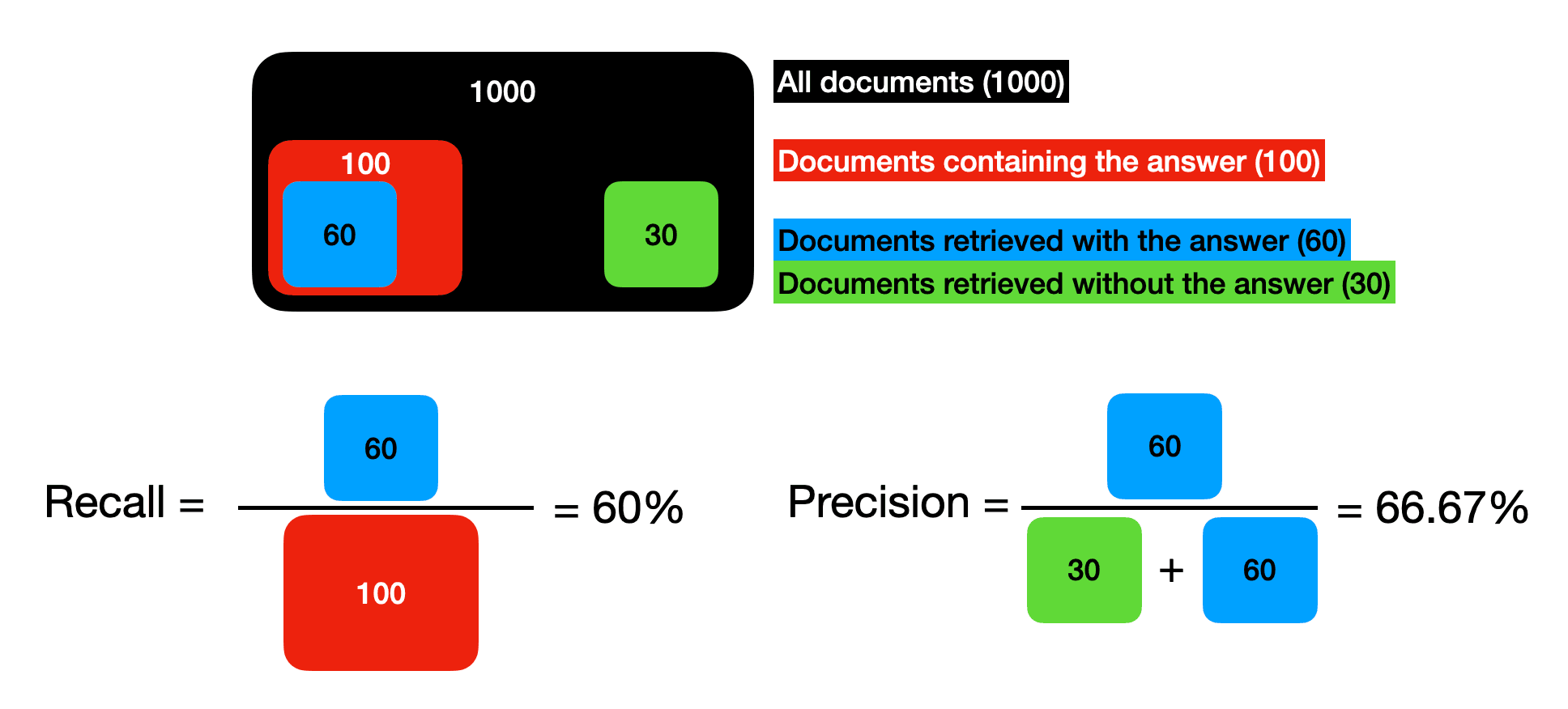

Let's illustrate with a numerical example: Suppose we have 1000 articles, and 100 of them answer the query "What documents do I need to open a company?". Our search model retrieves 90 articles. And out of those 90 articles, 60 contained the answer to the query.

- If, out of the 100 relevant articles, our model successfully retrieved 60, then the recall is 60/100=60%.

- If, out of the 90 retrieved articles, 60 contained the answer and the other 30 did not, then the precision is 60/90≈66.67%.

Figure 3: Example of precision and recall.

Figure 3: Example of precision and recall.

Hybrid search and precision-recall

Recall: Hybrid search significantly enhances recall. If your initial search is poor, a re-ranking step cannot magically fix it; it can only reorder the results it's given. I like to think of this as "rearranging deck chairs on the Titanic", since no matter how well you rearrange the results, the result won’t be good. Hybrid search, by combining the strengths of full-text and semantic approaches, effectively expands the initial pool of relevant documents, building a much stronger ship.

Precision: Hybrid search also bolsters precision. Full-text search naturally promotes high precision for queries requiring specific keyword matches, as it's difficult for a document with many exact word overlaps to be irrelevant. For those trickier edge cases where a document might share many words with the query but be on a different topic, semantic search steps in, ensuring that only truly related articles are prioritized.

By intelligently combining the strengths of both full-text and semantic search, hybrid search offers a powerful approach to information retrieval, ensuring that RAG systems have access to the most relevant and accurate information for generating insightful and reliable responses. In our next blog post, we'll delve deeper into the entire RAG pipeline and explore how Meilisearch simplifies its deployment and maintenance.

Optimizing performance: binary quantization and arroy

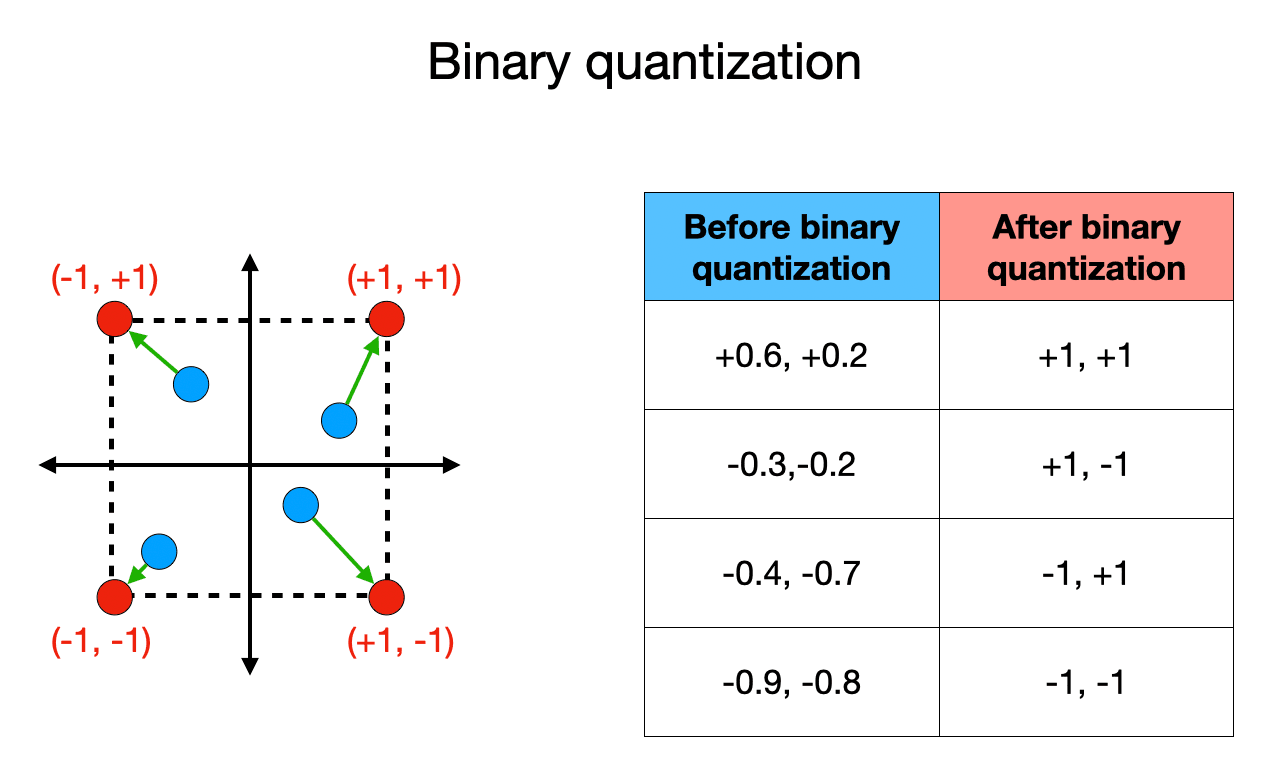

Beyond the core logic of hybrid search, efficient vector management is crucial for large-scale RAG systems. This is where Binary Quantization (BQ) comes in. BQ dramatically shrinks vector embeddings by converting 32-bit floats to compact 1-bit representations, significantly reducing disk space and memory usage. In short, binary quantization simplifies a number by only taking its sign. For example, the numbers 0.4, -0.5, and 0.6 will be turned into +1, -1, and +1. While this looks like a broad oversimplification, when we’re dealing with really long vectors of hundreds or thousands of entries, BQ really speeds things up in similarity calculations, while keeping most of the information.

Figure 4: Binary quantization turns numbers into their sign, effectively pushing them to the corners of a square. Notice that the distance between points changes slightly, but the calculations become much simpler.

Figure 4: Binary quantization turns numbers into their sign, effectively pushing them to the corners of a square. Notice that the distance between points changes slightly, but the calculations become much simpler.

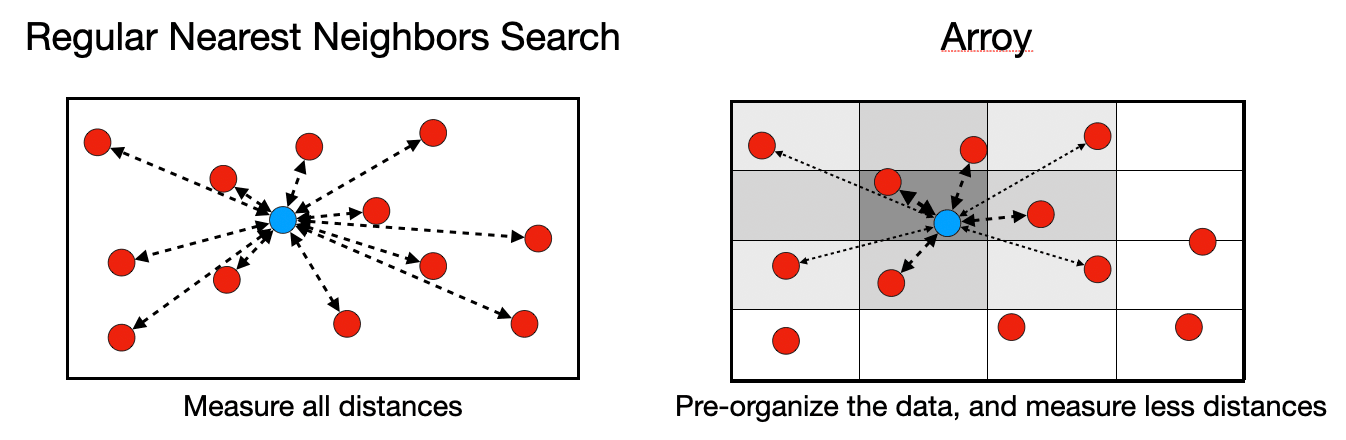

Meilisearch also leverages Arroy, its Rust-based vector store designed for efficient Approximate Nearest Neighbors (ANN) search. Nearest neighbors search is very effective, but it can be really slow, as many distances between pieces of text in the embedding need to be compared. Arroy provides several speeding up techniques that help the model ‘guess’ where the closest articles are in the embedding.

Figure 5: Regular nearest neighbors search is effective, but too many distances need to be calculated. Arroy uses different techniques to organize data, so that much fewer distances need to be calculated. In this example, a grid is used, and only the neighboring cells are searched, but in general, many other techniques, including tree-based techniques, are used to organize the data for effective and fast nearest neighbors search.

Figure 5: Regular nearest neighbors search is effective, but too many distances need to be calculated. Arroy uses different techniques to organize data, so that much fewer distances need to be calculated. In this example, a grid is used, and only the neighboring cells are searched, but in general, many other techniques, including tree-based techniques, are used to organize the data for effective and fast nearest neighbors search.

This powerful combination of Binary Quantization and Arroy allows Meilisearch to handle vast knowledge bases with remarkable indexing speed and performance, ensuring your RAG system delivers fast, accurate answers even for high-dimensional embeddings.

Explore Meillsearch’s capabilities today!

The power of RAG lies not just in the LLM's ability to generate text, but in its foundation of accurate and relevant information retrieval. As we've seen, embracing hybrid search, which skillfully blends the specificity of full-text search with the contextual understanding of semantic search, is key to overcoming the challenges of hallucination and delivering precise answers. With Meilisearch, implementing this crucial search layer into your RAG pipeline becomes remarkably straightforward, allowing you to focus on building truly intelligent applications.

Ready to supercharge your RAG system with unparalleled search relevance?

Explore Meilisearch's capabilities today and stay tuned for our next article, where we'll delve into building the complete RAG pipeline with Meilisearch, simplifying its deployment and maintenance.