From RAG to riches: Building a practical workflow with Meilisearch’s all-in-one tool

Walk through a practical RAG workflow with Meilisearch – query rewriting, hybrid retrieval, and LLM response generation—simplified by a single, low-latency platform.

In this article

In our previous post, we dove into the complexities of hybrid search, a powerful technique that combines the precision of keyword-based searching with the contextual understanding of vector-based searching. We learned that this dual approach is essential for retrieving relevant information from your data.

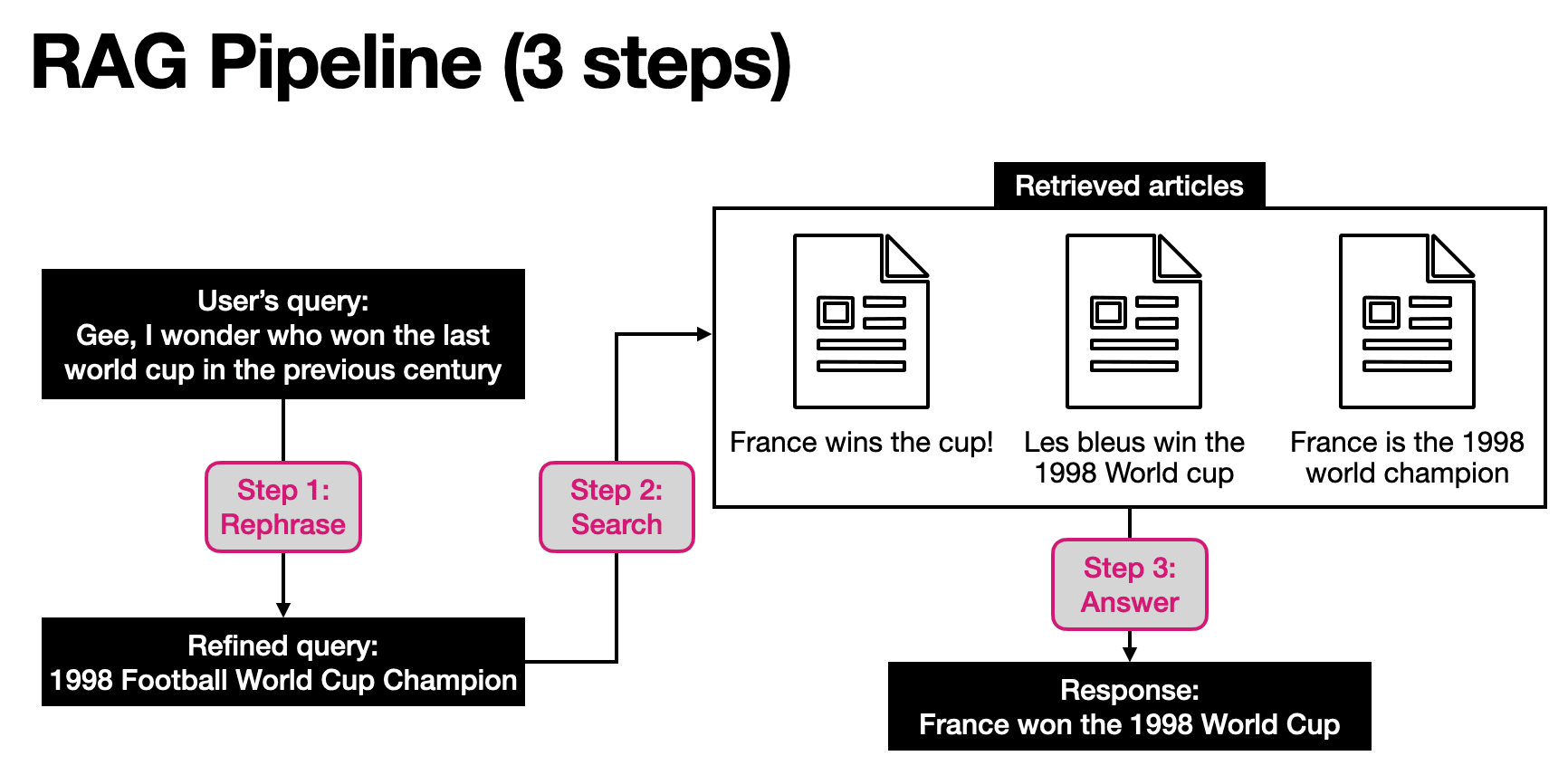

But a great search is only part of the story. Today, we'll zoom out and explore the complete Retrieval Augmented Generation (RAG) workflow, showing how Meilisearch simplifies the entire process. In short, RAG has 3 steps: Query regeneration (rewriting the user’s question), Retrieval (searching for the answer to the question in a database), and Generation (using the question and the search results to formulate an answer to the question). While our last discussion focused on step 2 of the RAG pipeline (the retrieval step), we'll now cover the whole system, from preparing the initial query to generating the final, informed response.

The Motivation for RAG

Before we get into the nuts and bolts, let's briefly recap why RAG is a game-changer. Large Language Models (LLMs) are remarkable at generating human-like text, but they have two significant limitations:

- Limited Knowledge: LLMs are trained on vast amounts of public data, but their knowledge is static. They can't access real-time information (e.g., the results of yesterday’s game) or specific, private data (e.g., your company's internal documents).

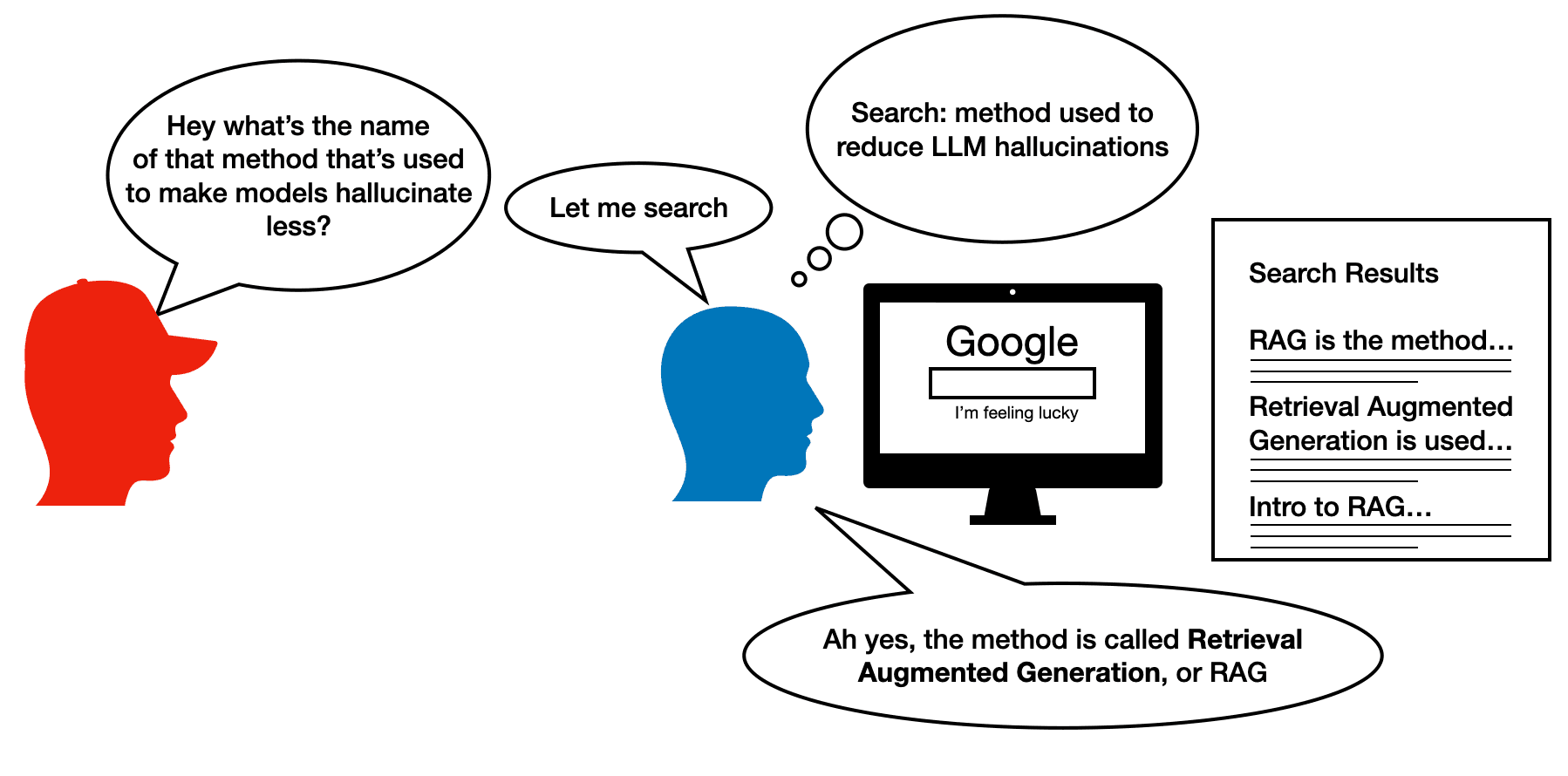

- Hallucinations: When faced with a question they don't have a definitive answer for, LLMs will sometimes "hallucinate", they make up plausible-sounding but factually incorrect information. This is because they are designed to predict the next word in a sequence, not to be a factual knowledge base. In short, the LLM doesn’t even know it is lying, as it has no concept of this, only a muscle-memory that indicates what is the word that will most likely follow the current text.

RAG solves these problems by giving the LLM a new superpower: the ability to "look up" information before answering. It’s like giving an LLM a personal librarian who can find and summarize relevant documents from your private data, and help the LLM formulate the best possible response.

An Analogy: You've Been Doing RAG Your Whole Life

To understand the RAG workflow, let's use a simple, everyday example. Imagine a friend asks you, "Hey, I'm trying to remember who won the last World Cup of the 20th century, but for the life of me I can’t recall, do you know?"

You wouldn't just type their exact question into Google. Instead, your brain performs a series of RAG-like steps:

- Query Rephrasing: You rephrase their question into a more effective search query, like "1998 World Cup winner" or "football world cup champion late 90s." You know that a good search query is precise and uses keywords that are likely to appear in the documents you want to find.

- Information Retrieval: You type your refined query into Google, which then retrieves a list of web pages, articles, and other sources that are likely to contain the answer. This is the retrieval step.

- Synthesis and Generation: You quickly scan the top few search results, read the key information (e.g., several articles with titles such as "France was the 1998 World Cup champion", “Les bleus have won the cup!”, or “Zidane and his team have won the 1998 World Cup”), and then, based the articles you skimmed, formulate a clear, concise response for your friend: "France won the 1998 World Cup." This is the generation step.

This is exactly what RAG does. It's a three-step process that allows an LLM to answer questions using external, up-to-date, or private data.

The RAG Workflow in Detail

The RAG process can be broken down into three main stages.

Step 1: Generating a Better Search Query

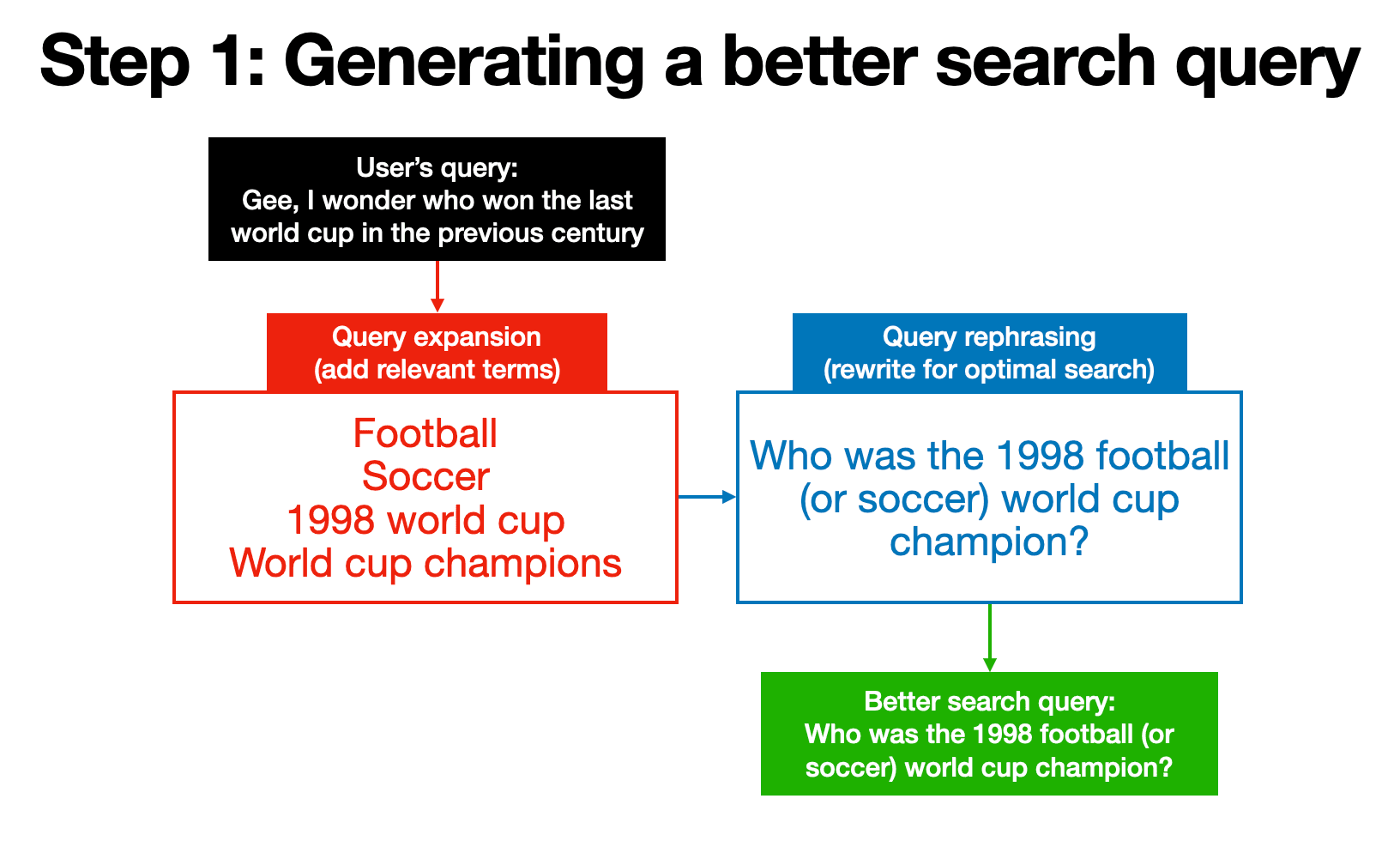

The user's initial question is rarely the best search query. A well-crafted search query is crucial for retrieving the most relevant documents. This step involves techniques to improve the initial user query:

- Query Expansion: Adding synonyms or related terms to broaden the search. For example, if a user asks about "Meilisearch's documentation," a query expansion model might also include terms like "Meilisearch docs" or "Meilisearch reference." If the query is “Who won the world cup at the end of the last century”, a query expansion model may include terms like “1998 world cup”, “soccer world champions”, etc.

- Query Rephrasing: Rewording the query to make it more direct and concise, helping the search engine find a better match. A user query like "What are some of the features of Meilisearch's hosted solution?" could be rephrased to "Meilisearch Cloud features." If the query is something poorly written like “Gee, I wonder who won the last world cup in the previous century”, the model can rephrase it as “1998 football world cup champion.”

These methods ensure that even if the user's phrasing is a bit clunky or non-technical, the search engine receives an optimal query.

Step 2: The Search for an Answer

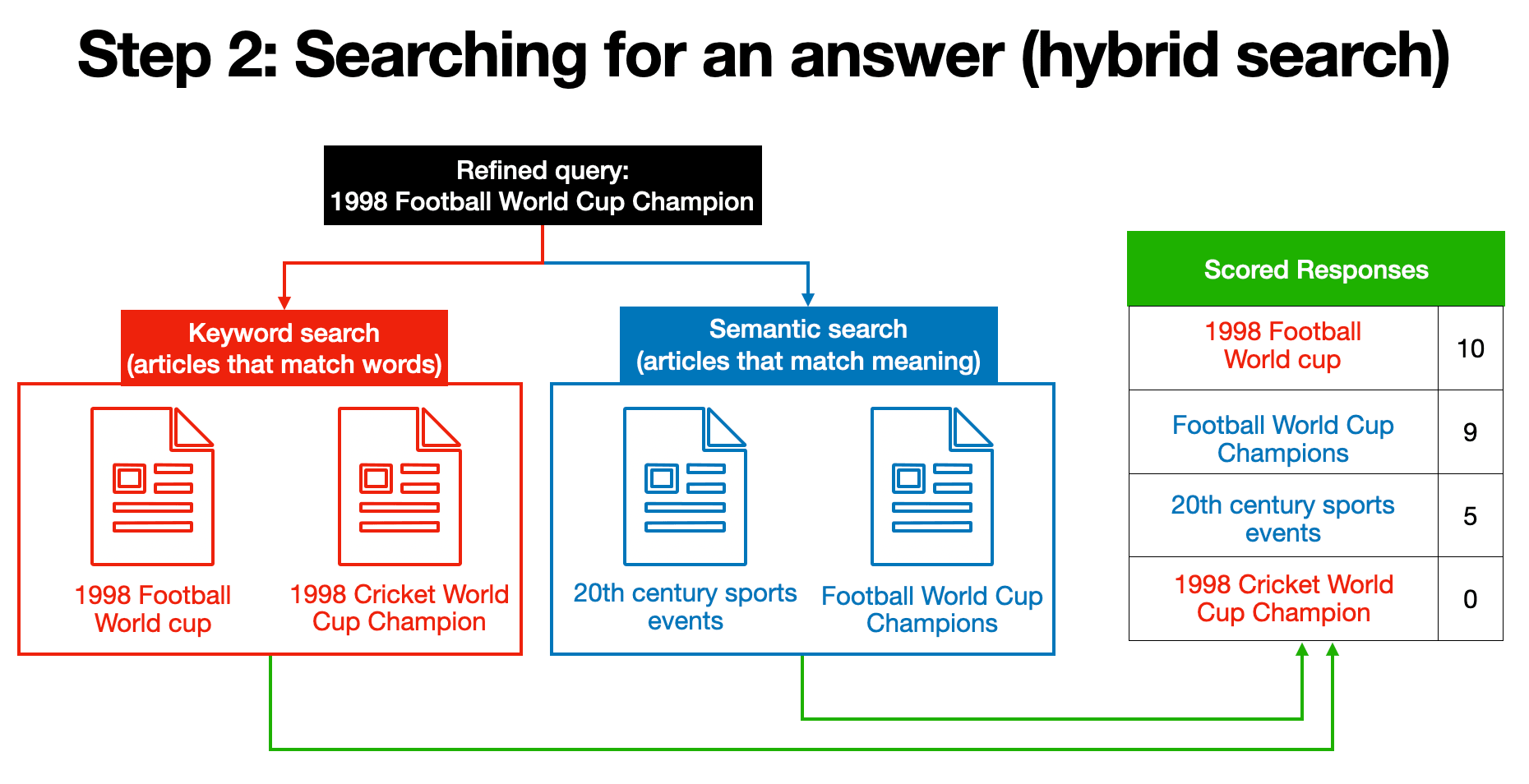

This is where the magic of retrieval happens. As we discussed in our previous post, the most effective way to search is through a hybrid approach. This method combines:

- Full-Text Search: Identifies documents containing the exact keywords from your query. This is great for factual recall and precision.

- Semantic Search: Finds documents that are contextually or semantically similar to your query, even if they don't contain the exact keywords. This is powered by vector embeddings, which represent the meaning of text.

Meilisearch excels at this step by providing a unified, integrated hybrid search engine. Unlike systems that require you to manage a separate vector database, a keyword search engine, and a re-ranking model, Meilisearch handles all of this out of the box with a scoring method, reducing complexity and operational overhead.

Step 3: Generating a Good Response

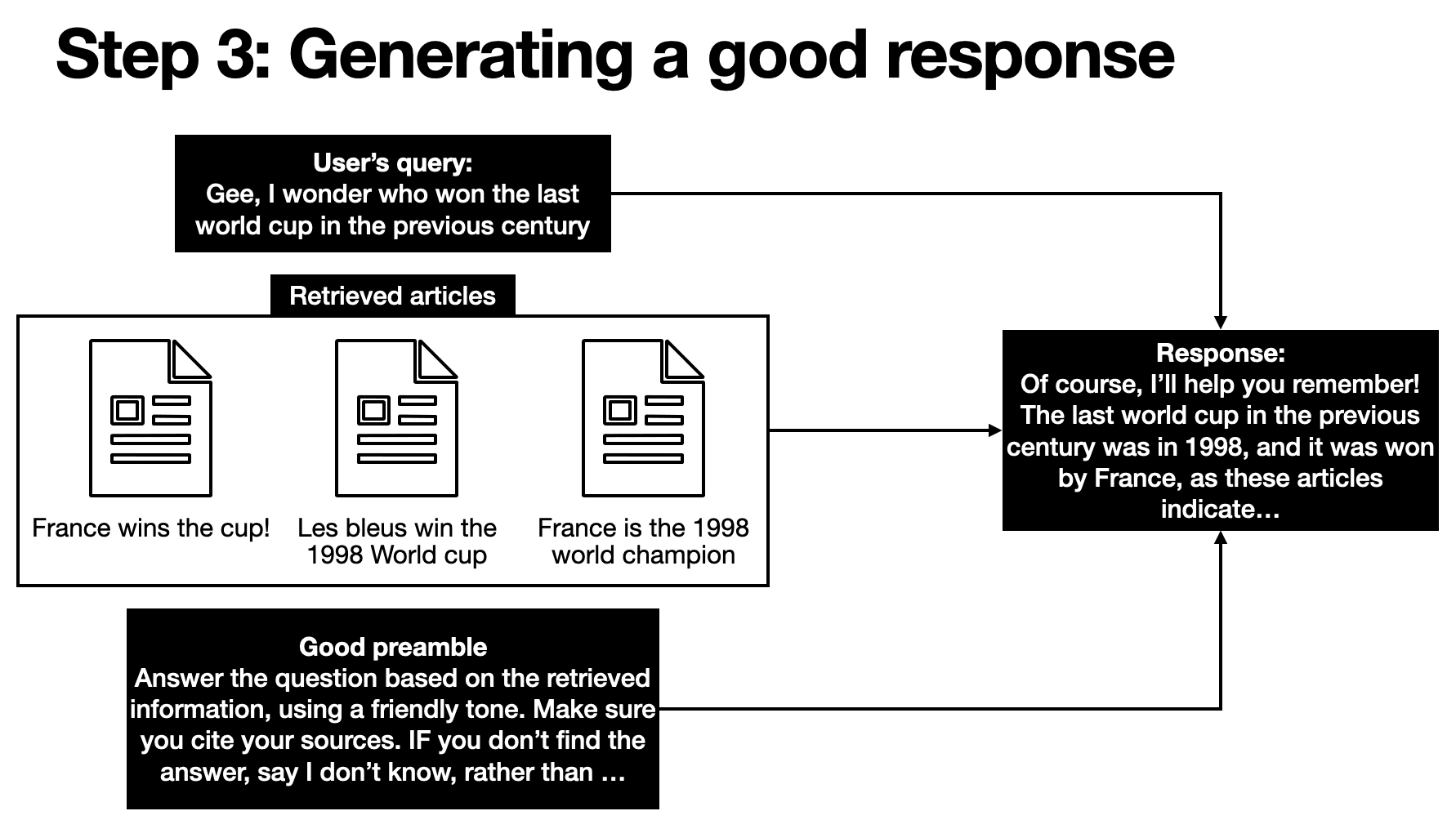

Once the most relevant documents are retrieved, the LLM is given two key pieces of information:

- The Original User Query: The question the user asked.

- The Retrieved Documents: The "context" or "ground truth" information found in step 2.

These are combined with a carefully crafted preamble or system prompt, which provides the LLM with specific instructions. This is a critical step for preventing hallucinations. A good preamble does the following:

- Instructs Synthesis: Tells the LLM to synthesize information from the provided documents rather than just repeating them.

- Requires Citations: Instructs the LLM to cite the sources it used, reinforcing accuracy and giving the user confidence in the answer.

- Handles Ambiguity: Instructs the LLM to explicitly state when it cannot find an answer in the provided documents (e.g., "I couldn't find any information on that topic"), preventing it from generating a fabricated response.

Meilisearch Advantages: Streamlining, latency, and more

A Streamlined RAG System

Building a RAG pipeline from scratch can be a complex and brittle process. It often involves integrating multiple services: a traditional search engine, a vector database, an embedding model, and a re-ranking model. Each of these components needs to be deployed, configured, and maintained independently.

This is where Meilisearch's approach stands out.

Meilisearch simplifies the RAG workflow by providing a single, powerful platform that integrates hybrid search natively. Instead of managing a complex web of interconnected services, developers can leverage Meilisearch's unified solution. This dramatically reduces operational overhead and the number of moving parts that can break or fall out of sync.

- Simplified Deployment: Meilisearch's all-in-one architecture means you can get your RAG system up and running faster. There's no need to spend time configuring separate APIs or dealing with compatibility issues between different tools.

- Easier Maintenance: When new embedding models are released or your data schema changes, updating a multi-component system can be a nightmare. Meilisearch's integrated approach means that updates and fine-tuning are managed from a single point, making your system more robust and easier to evolve.

Latency Improvements

One of the most significant performance advantages of Meilisearch's unified approach is latency. In a multi-component RAG system, the user query might have to go through several network hops and API calls:

- Query to an embedding model (API call).

2. Embeddings sent to a vector database (API call).

3. Query sent to a full-text search engine (API call).

4. Results from both are sent to a re-ranking model (another API call).

5. Finally, the re-ranked results are sent to the LLM (API call).

Each of these steps adds milliseconds of latency. Meilisearch performs all of these steps internally in a single search request, drastically reducing the total time from query to retrieved documents. This leads to a much faster and more responsive user experience.

In essence, Meilisearch's unified solution allows you to focus on what matters most: building an amazing user experience powered by your data. It provides the performance, ease of use, and flexibility needed to build and scale a state-of-the-art RAG application without the headaches of managing a complex infrastructure. If you’d like a hands-on tutorial on implementing RAG with Meilisearch, check out this blog post.