Knowledge graph vs. vector database for RAG: which is best?

Learn the key differences between knowledge graphs and vector databases for RAG, when to use each, and how to combine them for optimal results.

In this article

The durability and potential of your retrieval-augmented generation (RAG) system depend on the database you choose for it. In RAG information retrieval, vector databases and knowledge graphs serve distinct roles: the former focuses on similarities between vector representations, while the latter focuses on relationships between entities.

In this guide, we will cover:

- What exactly knowledge graphs and vector databases are, and how they work within an RAG AI system.

- The process through which RAG retrieves and augments information to generate user-friendly responses.

- When you should go for a knowledge graph, and when you should use vector search.

- An in-depth comparison of their data models, along with their scalability and retrieval performance.

- A look into the advantages and limitations of both, and whether or not they can be combined for a better yield.

- How you can use real-world tools and frameworks to build RAG pipelines that are strong and scalable.

By the end, you will have a solid idea of which setup will work best for your goals, data, and production requirements.

Let’s get into it.

What is a knowledge graph?

A knowledge graph is, well, a graph of knowledge. It’s a structured data model that represents entities such as people, places, numbers, and other data points, along with the relationships between them. Using nodes and edges, a knowledge graph organizes information and deciphers the connections within it to answer complex or hidden queries for users.

A graph structure enables the system to apply reason to the data and uncover the real connections needed to answer complex queries.

In AI applications and RAG, knowledge graphs are primarily used for contextual reasoning and compliance monitoring, where the depth and explainability of answers are paramount.

What is a vector database?

A vector database runs on vector embeddings. These are more easily understood as numerical representations of text, images, and even audio that capture meaning instead of full-fledged keywords. These vectors are indexed using similarity search algorithms, such as cosine similarity or HNSW.

The most popular vector databases, such as Pinecone and Weaviate, power RAG apps by allowing fast retrieval across massive datasets. Most modern semantic and generative AI systems use vector retrieval as their backbone.

How does RAG work?

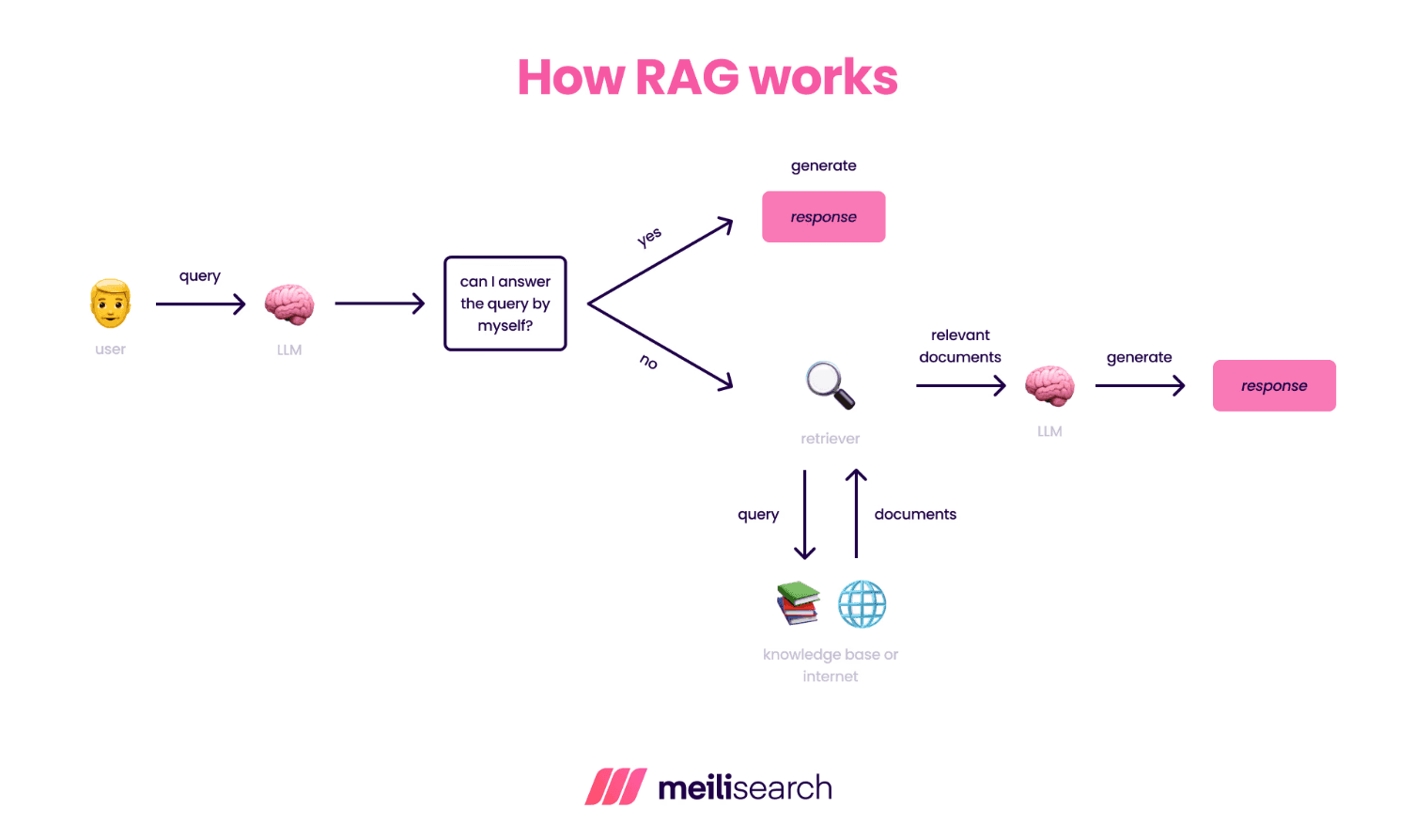

Retrieval-augmented generation is a power-up for large language models (LLMs). It grounds their outputs in external data and adds a smarter, context-aware layer. It works in three steps that are seemingly very simple:

- A retriever traverses the knowledge base or vector database to find documents similar to the user’s query.

- The retrieved documents and their context are handed off to the LLM.

- The LLM generates an answer that’s supported by the context and addresses the user’s query.

With this externally fetched context, the likelihood of AI hallucinations or generic answers is significantly reduced.

Do you need a vector database for RAG?

A dedicated vector database can significantly improve retrieval precision, but it isn’t always a prerequisite for a functional RAG pipeline.

Plenty of teams use hybrid search engines that combine full-text search, keyword extraction, and vector embeddings within a single layer. The benefit of doing this is that they don’t need to rely on multiple services or heavy infrastructure.

For example, Meilisearch offers a simple way to set up your workflow through a single /chat endpoint. It can perform retrieval and generation in a single API call, unlike the traditional multi-step RAG approach.

When should you use a knowledge graph for RAG?

A knowledge graph is ideal for RAG systems that have structured or interconnected data. It excels in scenarios where the ‘how’ is just as important as the ‘why,’ such as in medical research, financial compliance, analytics, and business data.

A knowledge graph enables multi-hop reasoning by modeling entities such as people, organizations, roles, and products. It understands the relationships between these entities and helps your LLM trace how the answer was retrieved, not just the information.

When is a vector database better for RAG?

A vector database is a good option when you’re dealing with unstructured or high-volume semantic data. This can include documents, support tickets, research papers, and so on.

In all situations where meaning matters more than the relationships, you want to use a vector database for RAG.

Since it stores the data in the form of vector embeddings, the retriever can use semantic similarity and deliver the relevant information you’re looking for.

All of this makes vector databases the go-to option for search, summarization, and content summarization. Unlike knowledge graphs, they scale easily and return results in milliseconds.

What are the key differences between knowledge graphs and vector databases?

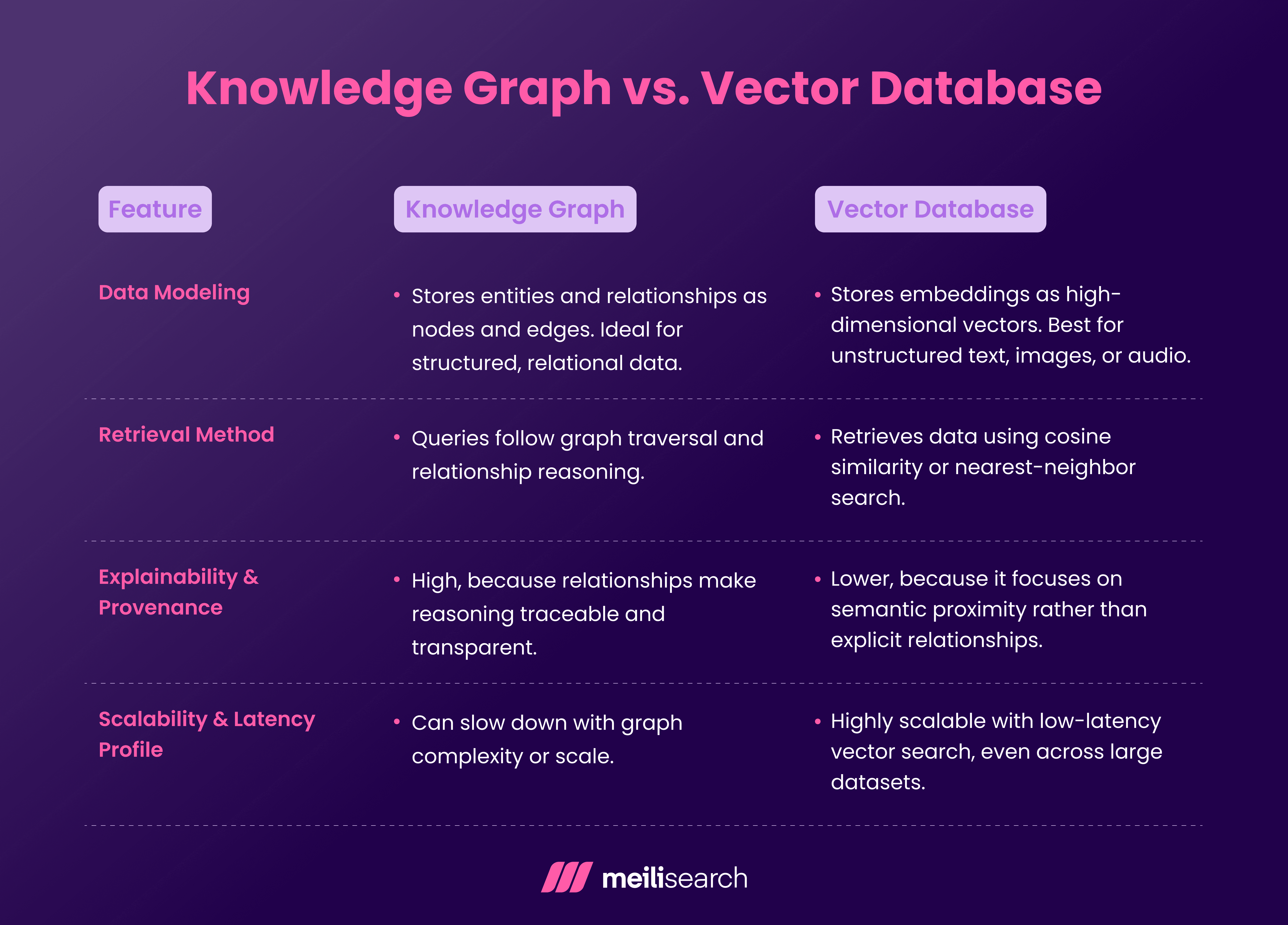

If you’re looking for the main difference between knowledge graphs and vector databases, you’ll find it in how they model and retrieve information.

Let’s take a look at some specifics for both.

Need reasoning and traceability? Knowledge graphs are your best bet.

On the other hand, if you want semantic matching and scalability, go with vector databases.

That said, modern RAG systems often combine the best of both worlds for quick recall and solid reasoning.

What are the advantages of using knowledge graphs in RAG?

Knowledge graphs provide the structure and reasoning that enable RAG systems to understand how data points are connected, rather than treating each point in isolation.

The benefits of employing knowledge graphs in RAG include:

- Relational reasoning on point: Knowledge graphs capture relationships between entities and are helpful in domain-specific contexts such as legal or medical research.

- Easy to explain: Developers enjoy the traceability of knowledge graphs through nodes and edges because it gives results that can be audited.

- Upholding the context: Data linked through knowledge graphs helps the LLM maintain context coherence across future prompts or windows.

- Semantic precision: Knowledge graphs reduce noise and improve the quality of retrieval.

What are the advantages of using vector databases in RAG?

Here’s why RAG pipelines rely heavily on vector databases:

- Effortless scalability: Since vector databases can handle millions of embeddings without a hitch, you never have to worry about increased latency across large-scale datasets.

- High speed and performance: Vector databases can deliver results within milliseconds without compromising accuracy or quality.

- Easy handling of unstructured data: They’re optimized to work smoothly with text, audio, and even image embeddings. This is particularly useful in chatbots.

- Flexible and low-cost: Their architecture supports easy scaling without complex infrastructure.

Modern retrieval systems, such as Meilisearch, combine vector search, full-text search, and reranking in a single engine. This hybrid approach targets both speed and reasoning for retrieval.

What are the limitations of knowledge graphs in RAG?

Even though they are helpful in specific cases, knowledge graphs have limitations that you need to keep in mind before putting all your retrieval eggs in this basket:

- Hard to develop: As effective as knowledge graphs are, building and maintaining them takes significant time. Development teams need to work on schema design, ontology mapping, and, of course, manual relationship curation.

- Can’t scale as easily: More edges and nodes mean multiple hops. These traversals degrade retrieval performance.

- Don’t handle unstructured data well: When the content is text-heavy, it doesn’t fit well into node-edge structures. This limits recall breadth and might limit responses.

- Maintenance is expensive: Frequent schema updates and data versioning increase overhead.

What are the limitations of vector databases in RAG?

Vector databases aren’t without drawbacks either. Let’s look at a few:

- No transparency: Since the similarity scores are based on math, it’s tough to trace why certain documents were retrieved. As such, you cannot justify outputs either.

- Not for complex relationships: Vector databases don’t do well with structured relationships because they can’t support entity hierarchies or established relationships between them.

- Operational complexity: Ever heard of ‘glue code’? That’s what you get when integrating vector databases with retrievers and generators. It leads to a high infrastructure overhead that nobody wants. The best modern hybrid systems have already simplified this. For example, Meilisearch does so by bundling retrieval and generation into a single endpoint, reducing the number of orchestration layers and the LLM's call volume.

How can you combine knowledge graphs and vector databases in RAG?

Combining a knowledge graph and vector database in a single RAG pipeline enables teams to capture both semantic similarity and structured relationships.* *As a result, you get stronger reasoning and pinpoint precision.

Let’s take the example of Meilisearch. It handles high-recall retrieval and grounding by running a hybrid search. In addition, a knowledge graph layer can be set up to trace entity relationships and perform multi-hop reasoning.

This architecture is useful for enterprise-scale data where both speed and explainability are a must. Meilisearch’s /chat endpoint handles both retrieval and generation in a single call, while the knowledge graph adds a traceability layer on top.

How does semantic search differ in knowledge graphs and vector databases?

Semantic search behaves differently across these two systems because of how each one represents meaning.

In knowledge graphs, meaning revolves around entities and their relationships. The retrieval then depends on these logical connections and relationships. You’ll find it most useful in domains such as law or healthcare, where there’s no room for error.

In vector databases, semantic search is tied to embeddings that rely on similarity algorithms to generate answers for users. It’s fast and efficient. For example, if a user searches for ‘HR policy’, it will find content by matching the query with relevant documents, such as ‘employee handbook.’ You won’t even need to define this relationship.

What are popular vector databases used for RAG?

Let’s explore the most commonly used vector DB tools:

- Meilisearch: The perfect modern hybrid blend. Meilisearch is a search engine with a built-in vector store and hybrid retrieval. It also provides the

/chatAPI, which unifies understanding, reranking, and generation into a single layer. Here’s a not-so-secret fact: it often replaces a standalone vector database in production-grade RAG pipelines. - Pinecone: A staple in the industry when it comes to managed vector DBs. It’s known for its low latency and high reliability.

- Weaviate: An open-source option that supports hybrid search and schema-based reasoning. Plus, it integrates with most major embedding models.

- Qdrant: Qdrant is popular because it is lightweight and developer-friendly. It’s been optimized primarily for real-time similarity search.

- Milvus: This one has been designed specifically for enterprise workloads with a distributed architecture and high-throughput performance.

Examples of knowledge graph platforms for RAG

Let’s now look at some examples of knowledge graph platforms that have been popular with development teams.

- Neo4j: One of the most widely used graph databases. It is the ideal option if you want to store entities and edges with Cypher queries that support semantic reasoning.

- Amazon Neptune: Amazon Neptune is a fully managed graph service, optimized for RDF and property graph models.

- Ontotext GraphDB: As the name suggests, this one was designed for ontology-driven graphs and semantic search. It is widely used in sectors such as publishing and life sciences.

- ArangoDB: A multi-model database supporting both graph and document queries for flexible, hybrid RAG applications.

How do you choose between a knowledge graph and a vector database for RAG?

The perfect foundation for your RAG system depends on your data structure, reasoning needs, and operational goals. Here are the factors you must consider before finalizing this decision:

- Data type: The data you’re working with is the main factor that determines the foundation you need. If your data is structured, go for a knowledge graph. If it’s unstructured, go with a vector DB.

- Explainability: Choose a knowledge graph when you need traceability and reasoning paths. Vector databases excel when you only need similarity-based retrieval.

- Scale and performance: Vector databases handle massive datasets and low-latency retrieval better. Knowledge graphs give up retrieval speed but make up for it with deeper reasoning and richer context.

- Maintenance and integration: Knowledge graphs require schema definition and maintenance, whereas vector databases integrate easily into modern AI pipelines.

If you want a deeper understanding of RAG, here are the different types of RAG to know, along with the RAG techniques and tools that drive the modern AI wave.

Choosing the right path: knowledge graph vs. vector database for RAG

Modern RAG systems are as effective as they are because of the foundations on which they are built. Both knowledge graphs and vector databases are a part of this foundation.

The choice between them will always depend on your needs, and most teams today lean toward a hybrid approach to better prepare for future needs.