Introducing Meilisearch Chat: conversational search for your data

Introducing Meilisearch Chat: turn your Meilisearch index into a conversational AI with a single /chat endpoint. Faster launches, lower costs, and direct answers your users actually want.

We're kicking off Launch Week with something we've been excited to share: Meilisearch Chat, a new way to make your data conversational.

For the first time, you can turn your existing Meilisearch index into an AI-powered chat experience – without complex infrastructure, separate vector databases, or months of custom development.

Search is becoming a conversation

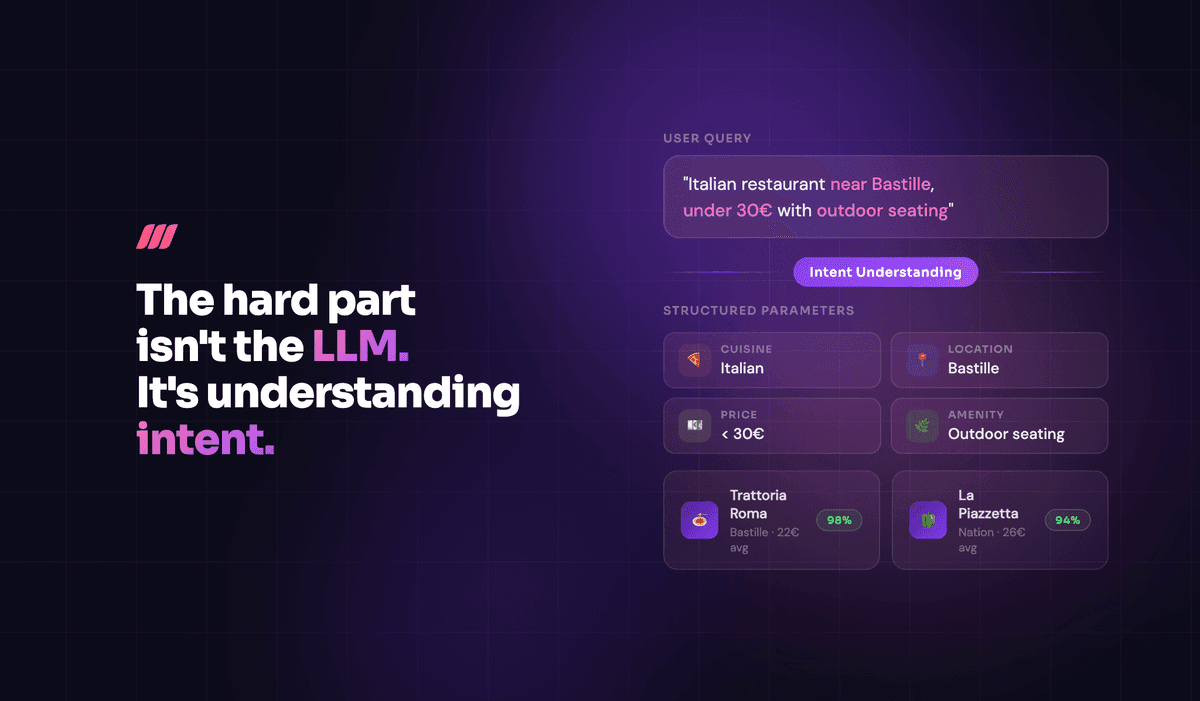

Your users and buyers don't want to search anymore – they want to ask. They expect apps, documentation, and internal tools to understand natural language and give them direct answers, not endless lists of results.

Until now, that's been really hard to deliver. Traditional conversational AI required juggling multiple LLM calls, vector databases, reranking pipelines, and endless tuning. The complexity alone made it expensive and slow, often taking months just to get a working prototype.

Meilisearch Chat changes all of that.

One API call, complete conversational AI

The new /chat brings AI-native search to your Meilisearch data through a single API endpoint. It handles the entire Retrieval-Augmented Generation (RAG) workflow, from understanding the query to generating the response, automatically.

Here's what you get:

Conversational AI for search – Users ask natural-language questions and get precise, contextual answers from your Meilisearch index.

Built-in RAG capabilities – Retrieves relevant information, contextualizes it in your factual data, and generates accurate responses. No extra vector database, reranker, or separate AI service required.

Native integration – Runs directly on your Meilisearch instance with a single, OpenAI-compatible endpoint.

Less infrastructure. Faster implementation. No compromise on quality or accuracy.

Why this matters for your business

Get to market faster

Meilisearch Chat turns months of AI integration work into days. You can prototype conversational experiences immediately, test them with real users, and iterate quickly without needing a dedicated AI team.

Lower costs, less complexity

We've streamlined the entire RAG pipeline, so you don't need separate LLM orchestration, reranking logic, or vector storage. Fewer moving parts means lower maintenance costs and less things that break.

Better user experiences

Whether it's customer support or internal knowledge bases, your users get direct, reliable answers in context – not just a list of links. That means faster resolution times, higher engagement, and happier users.

Built for real-world use cases

Retail & e-commerce – Help shoppers find exactly what they need through natural conversation.

Customer support – Let users ask questions in plain language and get answers directly from your documentation or help center.

Internal knowledge bases – Turn your company documentation into a chat assistant that answers questions instantly.

Developer docs – Add conversational search to your API or SDK documentation so developers can find what they need faster.

Simple to implement, easy to scale

If you're already using Meilisearch, adding conversational capabilities is straightforward. The /chat endpoint is OpenAI-compatible, which means it works with your existing LLM setup and fits right into your current workflows.

You can start with your existing indexes – no need to rebuild or re-embed your data.

A new approach to AI-powered search

With Meilisearch Chat, we're making it simpler to bring conversational AI to your data. Instead of managing multiple services and complex pipelines, you get a complete conversational search experience through one endpoint.

This isn't just another feature – it's a new foundation for more natural, human-like interactions with your data.

Ready to make your search conversational? Explore the docs for how to get started and try /chat today