Why traditional hybrid search falls short (and how we fixed it)

Meilisearch's innovative scoring system revolutionizes hybrid search by properly combining full-text and semantic search, delivering more relevant results than traditional rank fusion methods.

In Meilisearch v1.6, we revolutionized search by combining the precision of full-text search with the intelligence of AI. This hybrid approach isn't just another feature—it's a fundamental reimagining of how search should work. Today, we'll pull back the curtain on our implementation and show why Meilisearch's unique approach to hybrid search delivers superior results.

Join us as we explore the mechanics of hybrid search, examine conventional implementation methods, and reveal how Meilisearch's innovative solution raises the bar for search relevance.

What is hybrid search?

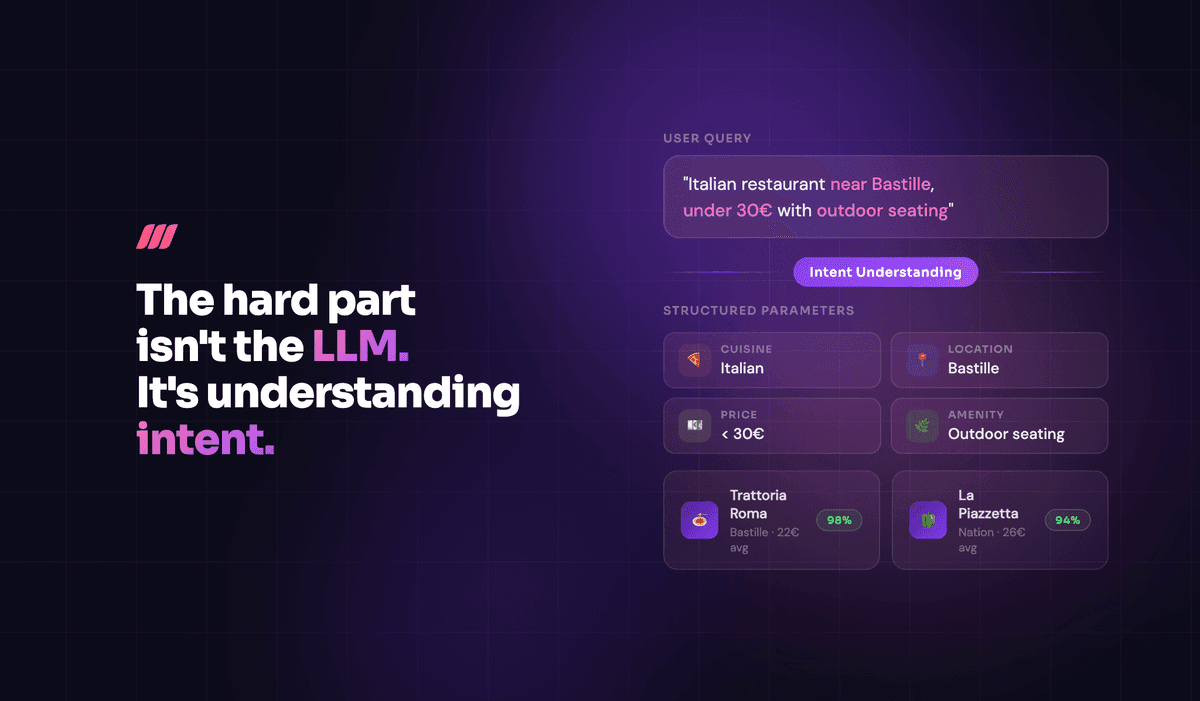

Search is always about finding the documents that are the most relevant to a user’s query.

Hybrid search is about combining the results of two complementary approaches to search: full-text search and semantic search.

Full-text search analyzes the words that appear in a query in a lexical manner, excelling at correcting typos and completing incomplete queries. It is also fast, and its results are easy to understand in retrospect.

Semantic search leverages Large Language Model (LLM) technology to understand the meaning of words in the query. It excels at finding documents that contain synonyms to the user’s query and other similar concepts.

Both search approaches build a list of relevant documents according to their respective methods. Hybrid search combines these lists to produce a final list of results.

The golden question is, how to combine them?

The traditional way of mixing results is flawed

The traditional method of mixing the results from both lists is called Reciprocal Rank Fusion (RRF), because it considers the rank of the documents in each list of results, and uses that as a basis to compute the final list of results.

In this context, the rank of the document refers to its position in the list of results, indicating how close it appears to the top.

For example, in a list of 20 documents, the result ranked first can be attributed 20 points. RRF can then attribute points to documents in each of the lists, sum the points for each document, and reorder the documents accordingly. Here’s a detailed explanation about an implementation of RRF on Azure AI Search.

However, this method of mixing results is flawed, because to function properly, it assumes that documents from all the lists of results are of comparable relevance: that is, all the documents in all lists are “good documents” that we want to return.

But, like Pokémons, each search method is vulnerable to different types of search queries. Covering for the weakness of the other method is the whole point of mixing them!

So let’s say you’re using a query that is fine for semantic search, but gives underwhelming results for keyword search. Using RRF, you might get some variant of the following:

Recursive rank fusion is like wasting fine chocolate by mixing it with spoiled milk!

To address this issue, we require additional information about the documents listed in our results.

Ranking is not enough: Scoring documents

The main idea is that you can score how relevant a document is from 0 (not relevant at all) to 1 ("perfect” match), independently of its rank in the list.

Naturally, documents closer to the top of the list are more relevant, but the first document might be anywhere between 0 and 1. Then, you compare the scores in both lists and rank the documents across both lists that way.

Fortunately, Meilisearch already scores documents for full-text search (for more details about this, refer to our previous article about scoring), and semantic search natively ranks documents depending on how similar they are to the query (although the details are complicated).

So we have a score for both full-text search and semantic search, but can we compare them?

Making the scores comparable

When you have two scores ranging from 0.0 to 1.0, it can be tempting to compare them, but for this to work, similarly relevant documents must be scored similarly with both methods.

One difficulty that arises when performing this comparison is that the models used in semantic search tend to use only a portion of the full vector space they could use, resulting in vectors that have a similarity of at least 0.5 even for texts that appear entirely dissimilar. Furthermore, even very similar texts might be capped in similarity around 0.7, which will cause them to rank lower than good full-text results.

Fortunately, Meilisearch provides the tools to solve that particular problem. Meilisearch allows you to perform an affine transformation to the scores of the semantic search, so that the scores can be offset by the observed mean similarity between vectors, and the results that are packed around that mean similarity can be spread more in the 0.0, 1.0 space. For more details, refer to the documentation on the distribution field.

For models known to Meilisearch (such as OpenAI models), a suitable default distribution is already applied to configured embedders, so users don’t have to configure it themselves!

With this operation, our scores are comparable, and depending on the query, the method that produces the most relevant documents will be favored by the hybrid search

What else goes into making hybrid search right?

Scoring documents is a decisive relevancy advantage of Meilisearch over the traditional approach based on Reciprocal Rank Fusion, but there are a lot more aspects involved in making hybrid search right:

- We developed our bespoke vector store, arroy, to leverage our database backend (LMDB) and meet our performance target. Arroy supports Approximate Nearest Neighbor search, multiple distances, native document filters, and binary quantization. Arroy doesn’t block a reader while writing or a writer while reading, and allows for incremental updates.

- We kept flexibility in mind with the mantra of making easy things simple, and hard things possible: users that are new to hybrid search can quickly setup an embedder with our default provider and configuration, but users can also carefully optimize their embedding process with multiple supported provider (or even the ability to provide your own with REST embedders and user-provided embeddings) and document templates to customize how documents are turned into text to embed.

- Best-in-class full-text search engine, seamlessly integrated with semantic search, with support for efficient and expressive sorting and filtering of documents.

Search is foundational; it needs to be rock solid to reliably serve the needs of your application. Meilisearch’s hybrid search implementation scores documents to merge results while preserving their relevancy. Scores also power the federated search, a generalized version of semantic search that unifies results from multiple lists of intermediate hits.

Our users use federated search to perform hybrid searches across multiple vector stores, frequently combining image and text search requests.

Why Meilisearch's hybrid search stands apart

Meilisearch's innovative approach to hybrid search goes beyond simply combining full-text and semantic search results.

By implementing a sophisticated scoring system instead of traditional rank fusion, carefully calibrating score comparability, and building a robust technical foundation with arroy, we've created a hybrid search solution that consistently delivers more relevant results.

This thoughtful implementation ensures that the strengths of each search method are preserved while compensating for their weaknesses, setting a new standard for search relevance in modern applications.