LlamaIndex RAG tutorial: step-by-step implementation

Build smarter AI search with LlamaIndex RAG. Learn step-by-step how to create, optimize, and scale reliable retrieval-augmented generation systems.

Most developers spend weeks building retrieval systems from scratch, wrestling with vector databases and embedding models, only to discover their LLM still hallucinates answers.

The irony? While everyone talks about RAG as the solution to grounding AI responses in real data, the implementation complexity often defeats the purpose entirely.

LlamaIndex changes this equation by collapsing what used to be a month-long engineering project into a few dozen lines of code, without sacrificing the sophistication that makes retrieval-augmented generation actually work. Instead of building infrastructure, you can focus on what matters: turning your company's knowledge into intelligent, accurate responses that your users can trust.

Understanding LlamaIndex and RAG for advanced search applications

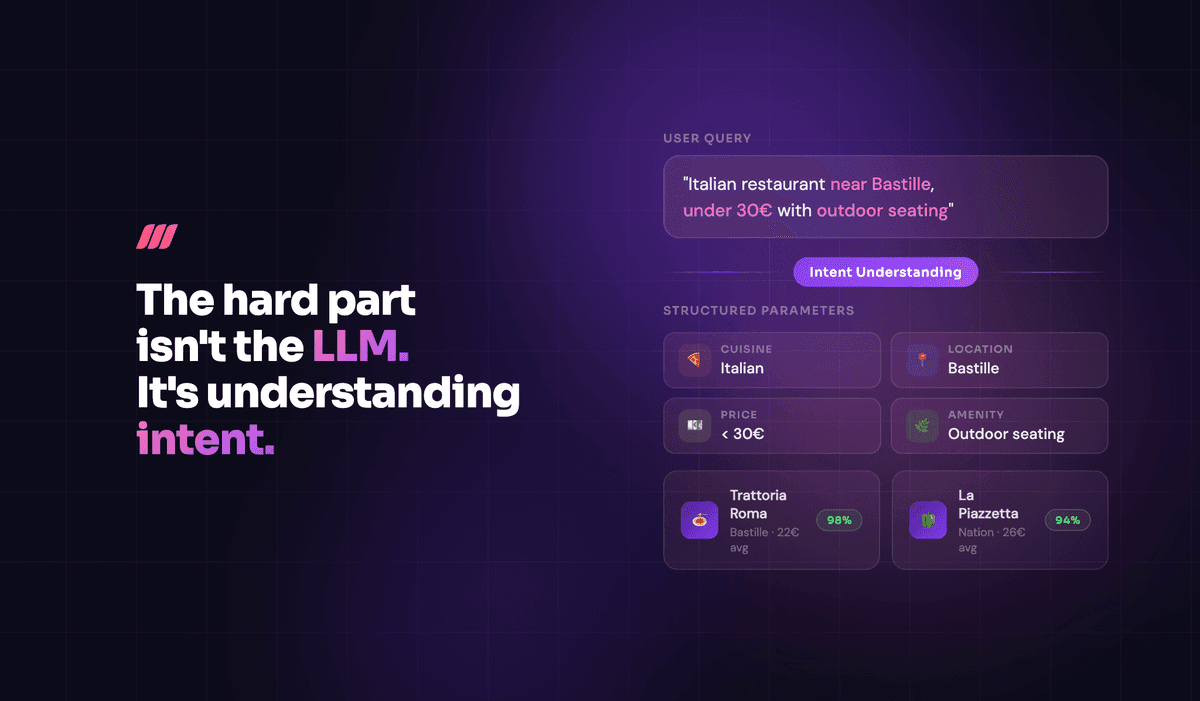

LLMs are great at generating text, but they can make mistakes or use outdated info. RAG fixes this by connecting language models to up-to-date, relevant data.

Introducing LlamaIndex: your toolkit for building RAG pipelines

If RAG is the strategy for smarter LLM applications, LlamaIndex is the toolkit that makes it happen. Building a RAG system is like preparing a gourmet meal: you need quality ingredients (your data), the right tools (to process and understand that data), and a recipe (the workflow). LlamaIndex provides all of this.

Formerly GPT Index, LlamaIndex is an open-source framework that simplifies building RAG systems. It offers components that manage your data’s journey, from ingestion to indexing to integration with LLMs for answer generation.

Core components of a LlamaIndex RAG system: from raw data to smart answers

Turning a raw document into a context-aware answer with LlamaIndex follows five clear stages:

- Loading: Bring your data into the system. LlamaIndex supports many document loaders, making it easy to import PDFs, text files, websites, APIs, and more.

- Indexing: Convert loaded documents into vector embeddings, numerical representations capturing the meaning of your text. This helps find relevant information even if queries use different words.

- Storing: Save your index and metadata to avoid reprocessing large datasets every time you start your application.

- Querying: When a user asks a question, LlamaIndex transforms the query into an embedding and searches the indexed data for the most relevant text chunks. It can also apply advanced search strategies to improve results.

- Generation: The retrieved context and user query go to an LLM, which crafts a human-like answer grounded in your specific data.

This workflow ensures the LLM always accesses precise, relevant information.

Key benefits of using LlamaIndex for RAG development

Using LlamaIndex for RAG projects streamlines development. It simplifies complexity by abstracting data connection, transformation, and retrieval details. This lets developers build sophisticated RAG applications with less boilerplate code.

LlamaIndex’s modular design lets you swap components—embedding models, vector stores, or LLMs—to fit your needs and budget. This flexibility is crucial as AI technology evolves.

For tech leaders and developers, this means faster iteration, more reliable applications, and the ability to deliver intelligent, data-driven experiences.

Building your foundational LlamaIndex RAG pipeline: a step-by-step guide

Let’s build a LlamaIndex RAG system that answers questions using your own documents. We’ll go step by step, from project setup to asking your first queries. This guide uses Meilisearch documentation files as an example, but you can apply these steps to any documents.

Setting up your development environment for a LlamaIndex RAG project

Start by preparing a Node.js environment. Make sure you have Node.js version 18 or higher (20+ recommended). Check your version with:

node -v

Create a new project directory and initialize it:

mkdir rag-tutorial cd rag-tutorial npm init -y

This creates a folder named rag-tutorial, moves you into it, and sets up a basic Node.js project.

Update your package.json to use modern JavaScript modules and TypeScript. It should include:

"type": "module"for ES module syntax- Scripts to run TypeScript files with

tsx

Example:

{ "name": "rag-tutorial", "version": "1.0.0", "type": "module", "main": "rag-llamaindex.ts", "scripts": { "start": "tsx rag-llamaindex.ts", "interactive": "tsx interactive-rag.ts", "test-setup": "tsx test-setup.ts" } }

Install the necessary npm packages:

npm install llamaindex @llamaindex/openai @llamaindex/readers dotenv tsx

Starting with Node.js v23.6.0, developers can run TypeScript files directly without manual transpilation. This feature became default in v24.1.0.

Each package serves a purpose:

llamaindex: Core LlamaIndex.TS library@llamaindex/openai: Connects to OpenAI models for text generation and embeddings@llamaindex/readers: Tools for reading data from various sources, includingSimpleDirectoryReaderdotenv: Loads environment variables from a.envfile to keep API keys securetsx: Runs TypeScript files directly in Node.js

Set up your OpenAI API key by creating a .env file in your project root:

OPENAI_API_KEY=your_actual_openai_key_here

Replace the placeholder with your real key to keep credentials private.

Create folders for your data and index storage:

mkdir data storage

datawill hold your documentsstoragewill save the processed index

Add a tsconfig.json file for TypeScript configuration:

{ "compilerOptions": { "target": "ES2022", "module": "ESNext", "moduleResolution": "node", "allowSyntheticDefaultImports": true, "esModuleInterop": true, "strict": true, "skipLibCheck": true } }

With this setup, your environment is ready. Next, bring in your data.

Ingesting and loading diverse data sources effectively with LlamaIndex

Now, feed your LlamaIndex RAG system some knowledge. For this tutorial, we use Markdown (.mdx) files from Meilisearch documentation, but you can use PDFs, web pages, or database entries.

Copy your .mdx files into the data directory. For example:

# Copy documentation files to data directory # find ../documentation -name "*.mdx" -exec cp {} ./data/ ;

Ensure your data/ folder contains the files you want to index.

Use SimpleDirectoryReader from @llamaindex/readers/directory to read these files. In your main TypeScript file (e.g., rag-llamaindex.ts), import and use it like this:

import "dotenv/config"; import { SimpleDirectoryReader } from "@llamaindex/readers/directory"; const reader = new SimpleDirectoryReader(); const documents = await reader.loadData("./data"); console.log(`📄 Loaded ${documents.length} documents.`);

Each file becomes a Document object containing its content and metadata.

LlamaIndex supports many data formats. You can combine multiple sources into a single index to create a comprehensive knowledge base.

With your documents loaded, you’re ready to index them.

Indexing data for optimal retrieval: creating your first LlamaIndex vector store

Make your documents searchable by meaning, not just keywords. Vector indexing organizes text using numerical vectors that capture semantic meaning.

Set it up like this:

import { VectorStoreIndex, storageContextFromDefaults, Settings } from "llamaindex"; import { openai, OpenAIEmbedding } from "@llamaindex/openai";

VectorStoreIndex: Manages the vector indexstorageContextFromDefaults: Handles where your index is savedSettings: Configures language and embedding modelsopenaiandOpenAIEmbedding: Set up OpenAI’s LLM and embedding models

Configure your models:

Settings.llm = openai({ model: "gpt-4o", temperature: 0.0, maxTokens: 1024 }); Settings.embedModel = new OpenAIEmbedding({ model: "text-embedding-ada-002", });

This setup ensures factual, focused answers and efficient embeddings.

Build your index with:

console.log("🔄 Creating vector embeddings... (this may take a few minutes)"); const storageContext = await storageContextFromDefaults({ persistDir: './storage' }); const index = await VectorStoreIndex.fromDocuments(documents, { storageContext }); console.log("💾 Index built and saved to storage.");

This process:

- Splits documents into smaller chunks for better retrieval

- Converts each chunk into a vector using the embedding model

- Stores vectors and text chunks in the index

- Saves the index to the

./storagedirectory for future use

Depending on your data size, this may take a few minutes. Once done, your system can answer questions.

Looking for the best search experience in town? Deliver lightning-fast search results that will keep your users engaged and boost your conversion rates. Explore Meilisearch Cloud

Implementing the query engine and generating responses with your basic LlamaIndex RAG

With your data indexed, ask questions and get intelligent answers. LlamaIndex’s query engine handles this smoothly.

Get your VectorStoreIndex and create a query engine:

const queryEngine = index.asQueryEngine({ similarityTopK: 5 });

The similarityTopK parameter controls how many relevant chunks the engine retrieves per query.

Ask a question like this:

const question = "What is Meilisearch and what are its main features?"; const result = await queryEngine.query({ query: question }); console.log("📖 Answer:"); console.log(result.toString());

Behind the scenes:

- Your question converts into a vector

- The engine finds the most relevant document chunks

- These chunks form the context

- LlamaIndex builds a prompt including your question and context

- The LLM generates an answer grounded in your data

- The answer is returned and displayed

Here is an example of the final result against the Meilisearch documentation:

This ensures answers are accurate and based on your documents, not just the LLM’s pre-trained knowledge.

Now you have a working LlamaIndex RAG pipeline. Next, let’s improve efficiency for repeated use.

Persisting and efficiently reusing your LlamaIndex for subsequent RAG operations

LlamaIndex can persist the index, saving time and cost. Indexing involves chunking documents and making API calls for embeddings, which can be resource-intensive. You don’t want to repeat this every time you run your app.

Persistence uses storageContext and a loadOrBuildIndex function. When building the index:

const storageContext = await storageContextFromDefaults({ persistDir: './storage' }); const index = await VectorStoreIndex.fromDocuments(documents, { storageContext });

This saves the index to ./storage.

On later runs, try loading the saved index first:

try { const storageContext = await storageContextFromDefaults({ persistDir: './storage' }); const index = await VectorStoreIndex.init({ storageContext }); console.log("✅ Loaded existing index from storage."); } catch (error) { console.log("📚 Building new index from documentation files..."); // ...build logic }

If a saved index exists, it loads instantly—no need to reprocess documents or embeddings.

Benefits include:

- Cost savings by avoiding repeated embedding API calls

- Time savings since loading is faster than rebuilding

- Efficiency as heavy processing happens only once

- Automatic management with minimal effort

This persistence makes your LlamaIndex RAG system practical and efficient, ensuring your initial processing investment pays off over time.

With these steps, you’ve built a foundational LlamaIndex RAG pipeline: from setup and data ingestion to indexing, querying, and efficient reuse. This base is ready for further enhancements as your needs grow.

Optimizing your LlamaIndex RAG for production-level performance and relevance

You’ve built your foundational LlamaIndex RAG pipeline. Now, refine every step—from data preparation to delivering insights—to maximize performance, relevance, and efficiency. This process requires understanding how all components work together to create a system that is not only smart but truly effective.

Master advanced data chunking strategies for superior LlamaIndex RAG context

Think of your documents as vast libraries and chunks as the pages or paragraphs your LLM reads. Giving the LLM an entire book for one question is overwhelming, but a single, out-of-context sentence isn’t enough. Chunking finds the “just right” snippet for effective understanding.

Summary retrieval

Default chunking (around 512 tokens) is a good start, but production systems need more nuance. One effective method is decoupling retrieval and synthesis chunks:

- Embed concise summaries of each feature section for broad retrieval.

- When a query matches a summary, pull detailed, sentence-level chunks from that section for the LLM to synthesize the answer.

This approach is like using a table of contents to find the right chapter, then reading specific paragraphs within it.

Structured retrieval

Structured retrieval adds another layer. If your data has metadata—such as product categories, version numbers, or author tags—use it to narrow the search space before semantic search begins. For example, if a user asks about a feature in “version 3.0,” filter to only v3.0 documents. This pre-filtering makes vector search faster and more focused.

Also, use dynamic chunk retrieval. Different questions require different amounts of context:

- Simple factual queries may need only a few small chunks.

- Complex comparative questions benefit from more or larger chunks.

Adjusting the number and size of retrieved chunks based on query complexity ensures the LLM gets the optimal context, improving answers and reducing token usage.

Enhance LlamaIndex RAG retrieval with reranking and hybrid search techniques

After retrieving candidate chunks using vector similarity (often controlled by similarityTopK), the process isn’t complete. Vector search finds semantically similar content, but “similar” doesn’t always mean “most relevant.”

Hybrid search combines semantic search with keyword-based search. For example, if a user looks for documentation on a specific API error code like “ERR_CONN_RESET_005,” pure vector search might find general connection error documents. A hybrid approach uses the exact error code as a keyword to pinpoint documents containing that string, then applies semantic similarity to understand context. This blend often yields more precise results, especially for queries with specific entities or codes.

Refining the initial set of retrieved chunks is also important. After fetching the top K chunks, you can rerank them based on:

- Direct keyword overlaps.

- Scores from a smaller, faster model assessing relevance to the question.

This ensures the LLM receives the best possible context.

“Multi-step searches” further improve retrieval. An initial query identifies a broad topic or document set, then a focused follow-up query (possibly generated by the LLM) dives deeper into that subset. This coarse-to-fine approach enhances final context quality.

Select optimal embedding models and LLMs for your specific LlamaIndex RAG use case

Choosing the right embedding model and LLM is like selecting the engine and transmission for a car—it affects performance, cost, and suitability. There is no universal best; it depends on your needs.

Experiment with different embedding models and dimensionality. The text-embedding-ada-002 model from OpenAI balances performance and cost well.

However, if your data is specialized—such as legal or medical texts—a domain-specific fine-tuned model may perform better. Higher-dimensional embeddings capture more nuance but increase computational and storage costs, so balance is key.

For the generative LLM, gpt-4o offers a strong mix of cost and quality. For high-volume, low-latency chatbots, smaller, faster, and cheaper LLMs may be more suitable, even if they sacrifice some response nuance. For complex research or analysis, investing in a top-tier model can be worthwhile. Adjust settings like temperature (e.g., 0.0 for factual Q&A) and maxTokens to fine-tune behavior and control costs.

Consider an ecommerce example:

- Use a fast, affordable LLM for simple product questions.

- Use a more powerful model and higher

similarityTopKfor complex comparisons.

Conditional model selection optimizes both user experience and operational costs.

Evaluate your LlamaIndex RAG pipeline with key metrics and benchmarking for success

After refining chunking, retrieval, and model selection, evaluate your system regularly to ensure it performs well.

Focus on these key areas:

- Retrieval quality: Are retrieved chunks relevant? Use human evaluation to review top K documents for sample queries and check if they are on-topic and informative.

- Generation quality: Are LLM answers accurate, coherent, and faithful to context? Avoid hallucinations. Human judgment is crucial early on.

- End-to-end performance: How fast does the system respond? Include embedding, retrieval, and generation time. Latency matters for user-facing apps.

- Cost-effectiveness: Track API calls for embeddings and generation. Check if optimizations like chunking or

similarityTopKtuning reduce costs without hurting quality.

For example, a RAG system helping developers navigate a codebase might succeed if:

- Developers spend less time searching for information.

- Answers to technical questions are more accurate.

- Users provide positive feedback.

- Fewer follow-up questions go to human colleagues.

Benchmark against a “golden dataset” of human-verified question-answer pairs to measure progress. Tweaking components like embedding models, chunk size, or prompts should improve these benchmarks.

Your next steps in the RAG revolution

Building a production-ready LlamaIndex RAG system transforms how your applications handle knowledge retrieval and generation. It makes it move beyond static responses to dynamic, context-aware interactions with your data.

The combination of LlamaIndex's streamlined development experience with robust production patterns creates a foundation that scales from prototype to enterprise deployment.