How to Build RAG Applications Using Ruby: A Developer’s Guide

Discover how to build RAG applications in Ruby with this developer’s guide, covering essentials, tools, step-by-step setup, and how Ruby compares to Python.

Retrieval-augmented generation (RAG) has rapidly become one of the most effective methods for building AI systems. While most apps lean on Python, Ruby offers a lightweight path to implementing RAG pipelines without the need for large frameworks.

This guide will walk you through the essentials, from understanding what RAG means to building a working Ruby app.

You’ll discover:

- What RAG is and how it fits into Ruby projects without heavy frameworks.

- Why developers pick Ruby for flexible, lightweight RAG setups.

- Libraries and services that make retrieval and generation work smoothly.

- Clear steps to build a working RAG app using plain Ruby.

- The difference between Ruby’s ecosystem and performance with Python for RAG development.

- How tools like Meilisearch help you build fast and reliable retrieval layers.

By the end, you’ll have everything you need to develop a functional RAG pipeline in Ruby from scratch.

What is a RAG application in Ruby?

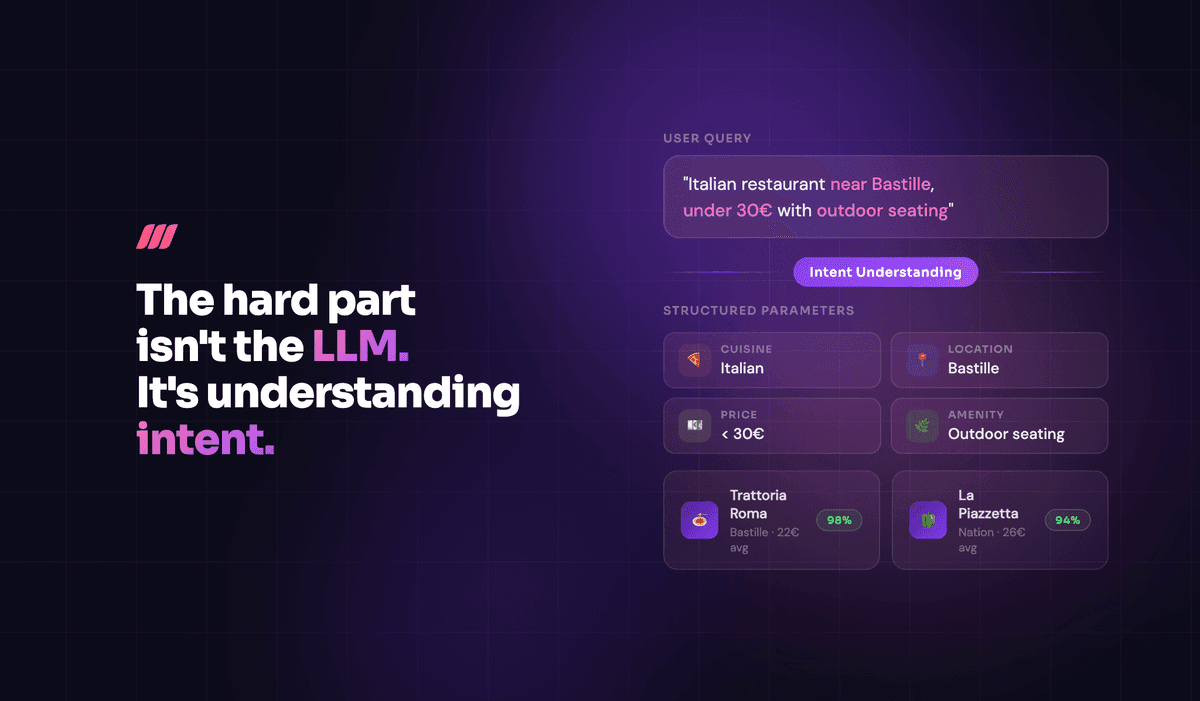

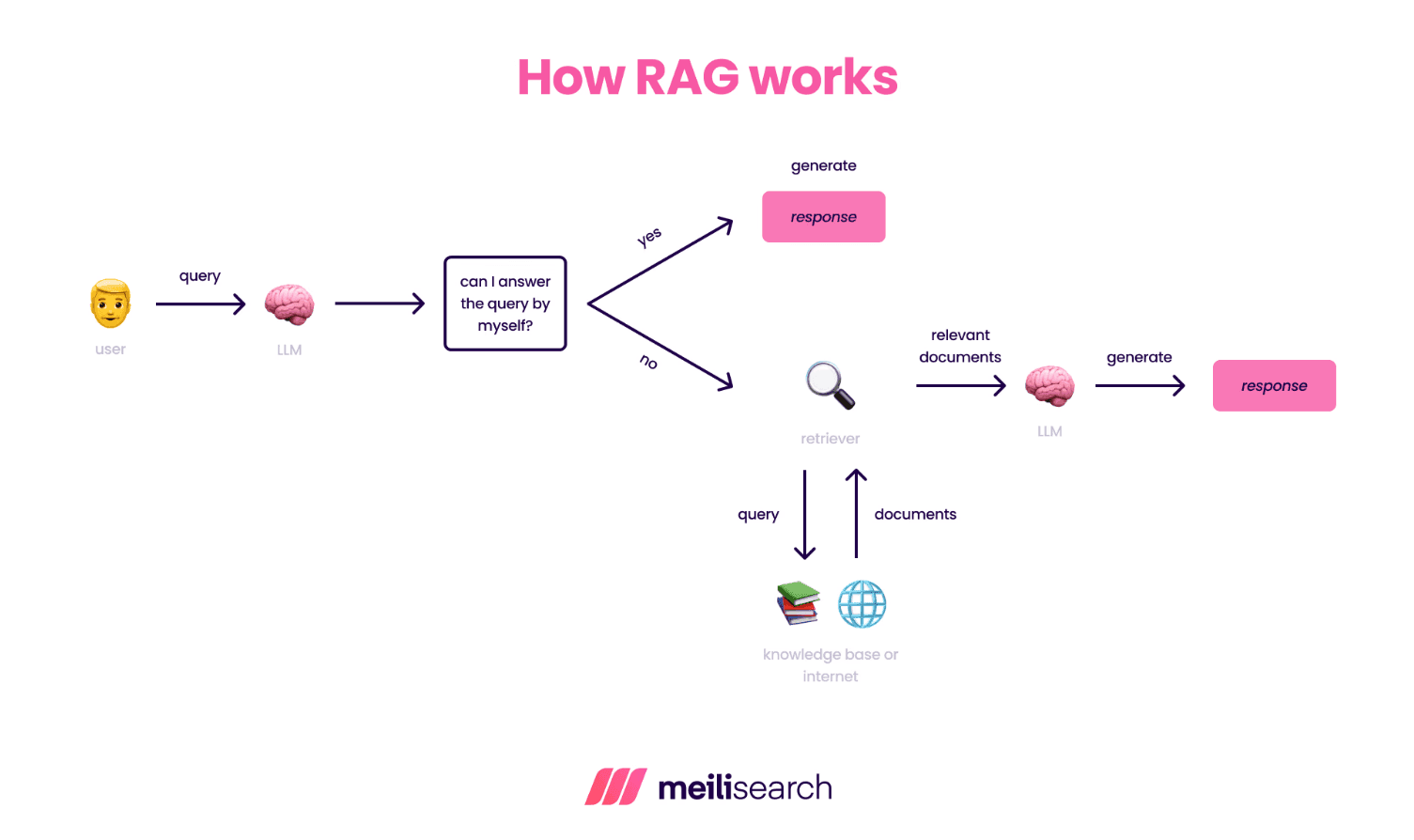

A RAG application in Ruby combines retrieval, augmentation, and generation to create smart, context-aware LLM responses. For details on how it works, you can take a peek at our guide on what RAG is.

In essence, RAG retrieves relevant information from a vector knowledge base, augments a prompt with that data, and then sends it to a large language model (LLM) to generate accurate answers.

A frequent question is whether you can implement RAG without relying on heavy frameworks. Yes, you can.

Ruby developers can rely on embeddings, search engines, and API calls to connect with LLM services for seamless integration. As a result, the AI-powered tools built through Ruby are incredibly lightweight.

The following section explains why Ruby is a suitable option for using RAG.

Why use RAG with Ruby?

In a RAG system, Ruby’s clean syntax and expressive language makes retrieval and embedding management relatively easy. Plus, you can connect to LLM APIs without any heavy frameworks.

While the focus of this guide isn’t on Rails, generally, many teams already run production systems on Ruby or Rails. Adding RAG capabilities on top of that lets them integrate vector search and generation directly into existing apps without migrating their stack.

Ruby’s ecosystem, although not as mature as Python's, simplifies API calls and background job handling, allowing developers to build lightweight scripts that optimize retrieval, augmentation, and generation.

Next, let’s look at the essential tools you’ll need to build a RAG application in Ruby.

What tools are needed for RAG in Ruby?

To build a RAG application in Ruby, you need a combination of libraries and APIs that cover retrieval, embedding generation, and LLM integration.

While the Ruby ecosystem is still growing, several reliable options are available to set up a complete RAG pipeline, many of which overlap with those discussed in our RAG tools comparison.

Let’s look at the best ones below:

- Langchainrb: A Ruby library that aids in building retrieval and generation workflows through building blocks. It excels at supporting document loading, embedding generation, and basic orchestration.

- Qdrant-ruby: A popular open-source vector database as well as a Ruby client for Qdrant. It allows you to store and search embeddings efficiently from Ruby code.

- Hugging Face or Transformer APIs: These APIs can be called from Ruby to generate embeddings or run models remotely. They’re useful when you want to avoid maintaining your own ML infrastructure.

- Other vector store gems: Some developers use custom wrappers for databases, like PostgreSQL with pgvector, or they integrate with Meilisearch for hybrid keyword and vector search.

- LLM API gems: These are gems for OpenAI, Anthropic, or other model providers that allow you to generate responses directly from Ruby.

There you have it: a set of tools associated with Ruby that provide the foundation for handling data retrieval and connecting to powerful language models.

Step-by-step guide to building a RAG app in Ruby

This section is your walkthrough of a five-step process that covers data preparation, embedding generation, retrieval setup with Meilisearch, connecting to an LLM, and building a minimal interface to tie it all together.

1. Load and prepare your data

The first step is to bring text into your Ruby environment in a structured way. This could be from local files, APIs, or databases.

You will need to clean the text, split it into logical chunks, and attach metadata (such as titles or URLs) so that retrieval later on is meaningful.

# Example: Loading and cleaning a text file file_path = "docs/guide.txt" content = File.read(file_path).strip chunks = content.split(/ +/) # split into paragraphs docs = chunks.map.with_index do |chunk, i| { id: i, text: chunk, metadata: { source: file_path } } end

This preparation is the foundation that ensures your retriever can quickly find relevant information without having to parse messy inputs later.

2. Generate embeddings and store them

Once the documents are ready, you’re going to convert each chunk into a numerical embedding. You can use an API like OpenAI or Hugging Face for this purpose.

Next, you will store the resulting vectors alongside the original text in a lightweight database or pair them with Meilisearch for hybrid retrieval.

require 'net/http' require 'json' def embed(text) uri = URI("https://api.openai.com/v1/embeddings") headers = { "Content-Type" => "application/json", "Authorization" => "Bearer #{ENV['OPENAI_API_KEY']}" } body = { input: text, model: "text-embedding-3-small" }.to_json JSON.parse(Net::HTTP.post(uri, body, headers).body)["data"].first["embedding"] end embeddings = docs.map { |d| d.merge(embedding: embed(d[:text])) }

This gives your pipeline a semantic backbone for accurate retrieval.

3. Implement a retriever with Meilisearch

Now, it’s time to index your documents in Meilisearch. Why? To enable fast keyword and hybrid search. Feel free to store metadata alongside text for filtering as well. This gives you typo tolerance, relevance ranking, and blazing-fast queries.

require 'meilisearch' client = MeiliSearch::Client.new('http://127.0.0.1:7700', 'masterKey') index = client.create_index('documents', { primaryKey: 'id' }) index.add_documents(docs) # Query example results = index.search('embedding generation')["hits"]

Developers often underestimate how significantly Meilisearch outperforms manual filtering or SQL full-text search in text retrieval. A clean index makes every later step more reliable.

4. Pass retrieved context to an LLM

Now that you have the retrieved context, let’s move it on to the LLM so the answers are actually grounded in data. You’ll build a prompt that includes the essentials, such as user query, brief instructions, and a trimmed context window.

You can avoid the bloat by focusing on only the most relevant snippets. If you really want to show where the facts came from, just use citation markers.

require "openai" class AnswerGenerator def initialize(model: ENV.fetch("OPENAI_MODEL", "gpt-4o-mini")) @client = OpenAI::Client.new(access_token: ENV.fetch("OPENAI_API_KEY")) @model = model end def answer(query:, hits:) context = build_context(hits, max_chars: 6_000) # keep under model limits messages = [ { role: "system", content: "You are a helpful assistant. Answer using only the provided context. Cite sources like [doc:index]. If the answer is not in the context, say you do not know." }, { role: "user", content: "Query: #{query} Context: #{context} Answer:" } ] @client.chat(parameters: { model: @model, messages: messages, temperature: 0.2 }) .dig("choices", 0, "message", "content") end private def build_context(hits, max_chars:) buf = +"" hits.each_with_index do |hit, i| snippet = hit.dig("_formatted", "content") || hit["content"] || hit["snippet"] || "" block = "[doc:#{i+1}] #{snippet} " break if buf.size + block.size > max_chars buf << block end buf end end

Several factors enhance the retrieval-to-generation step.

Keep the temperature low so the model sticks to facts. If the context doesn’t support an answer, it should say so clearly. Trim or rerank longer contexts to stay within token limits.

And don’t forget to log document IDs for easy traceability and later evaluation.

5. Build a CLI or service interface

Here’s a tidbit you’ll enjoy: wrap retrieval and generation in a small interface so you and your teammates can try the flow quickly.

A CLI keeps things dependency-light and works well for scripts, cron jobs, and demos. Add retries and basic timeouts to handle flaky networks or rate limits.

require "json" require "timeout" require_relative "indexer" # your Meili client and search method require_relative "answer_generator" # the class above class RagCli def initialize @indexer = Indexer.new(index: ENV.fetch("MEILI_INDEX", "docs")) @llm = AnswerGenerator.new end def run puts "RAG on Ruby. Type a question, or 'exit'." loop do print "> " q = STDIN.gets&.strip break if q.nil? || q.downcase == "exit" next if q.empty? hits = safe { @indexer.search(q, limit: 5) } || [] if hits.empty? puts "No context found." next end response = safe { @llm.answer(query: q, hits: hits) } puts " #{response} " puts "Sources:" hits.each_with_index do |h, i| id = h["id"] || h.dig("_formatted", "id") || "unknown" puts " [doc:#{i+1}] #{id}" end puts end end private def safe(max_retries: 3) tries = 0 begin Timeout.timeout(30) { return yield } rescue => e tries += 1 sleep(0.5 * tries) retry if tries < max_retries warn "Error: #{e.class} #{e.message}" nil end end end RagCli.new.run if $PROGRAM_NAME == __FILE__

A few production checks go a long way. Ensure API keys are validated upfront and with clear errors when any information is missing. If your provider allows it, stream tokens so users see answers as they’re generated.

Log the essentials, such as queries, selected document IDs, answers, and citations, for easy debugging and evaluation later. Keep prompts within safe length limits and sanitize inputs to block prompt injection attempts.

And if you’re exposing the app over HTTP, run it through a small Rack or Sinatra service with sensible timeouts and rate limits to maintain stability.

This completes the core loop: retrieve with Meilisearch, ground the answer with your context, and ship an interface that is easy to run and extend.

How is RAG in Ruby different from Python?

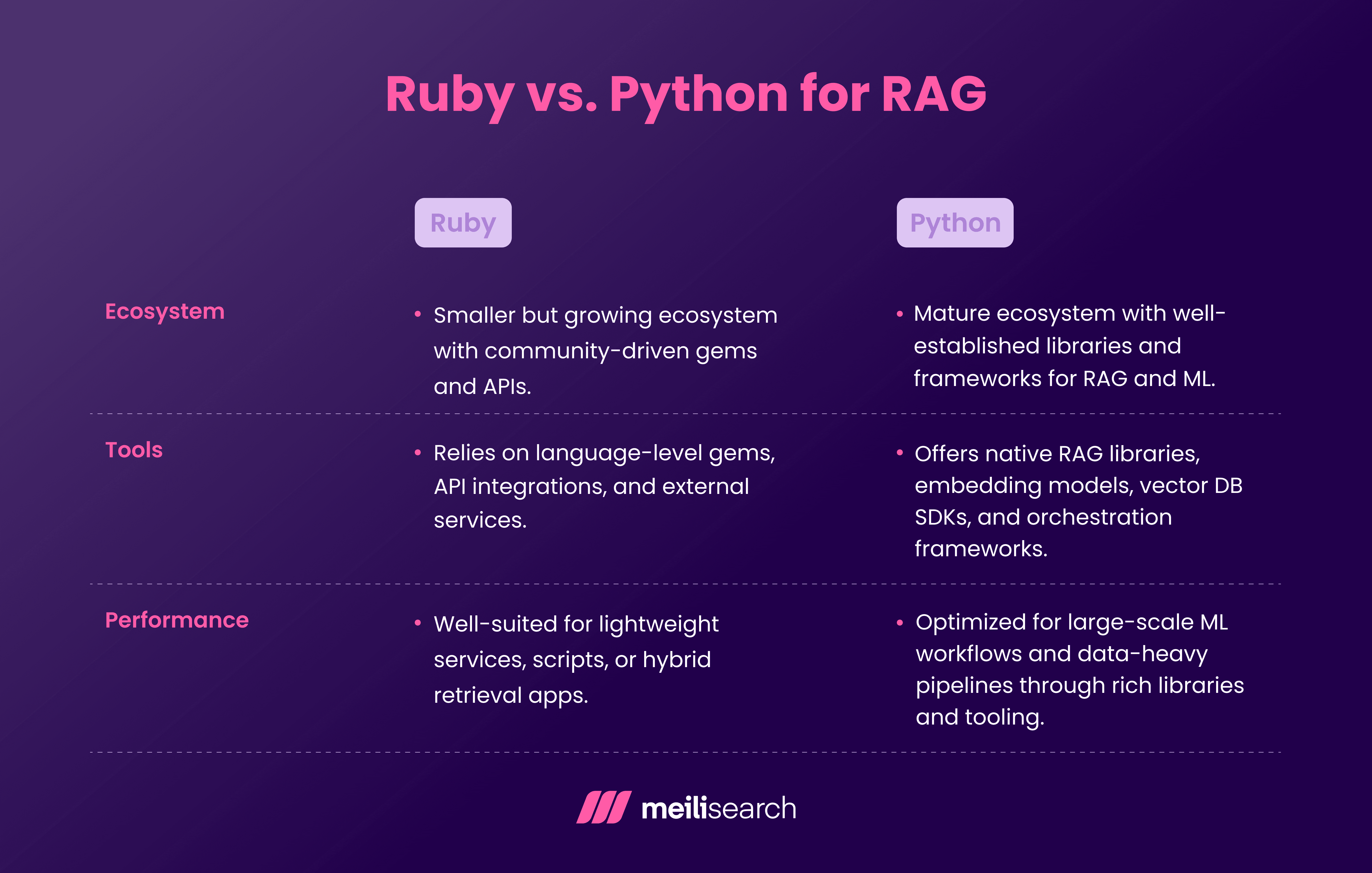

While Python dominates the RAG ecosystem, Ruby has its own strengths for developers who prefer a leaner, language-level approach.

The key differences lie in the ecosystem, available tools, and performance characteristics.

Ruby focuses on simplicity and direct integrations rather than building entire RAG stacks internally.

Python offers more built-in support for machine learning and orchestration, but can feel heavier for smaller apps.

The right choice depends on your goals, scale, and existing stack.

Unlocking the power of RAG with Ruby

RAG unlocks new possibilities for Ruby developers who want intelligent, context-aware applications without shifting their entire stack.

For those working with Rails, our guide to building RAG apps on Rails shows how to extend these ideas further.

By combining Ruby’s flexibility with the right tools, you can build retrieval and generation pipelines that are both lightweight and powerful.