What is text clustering? A complete guide

Learn what text clustering is, how it works, its benefits, use cases, how to perform text clustering in Python, and more.

In this article

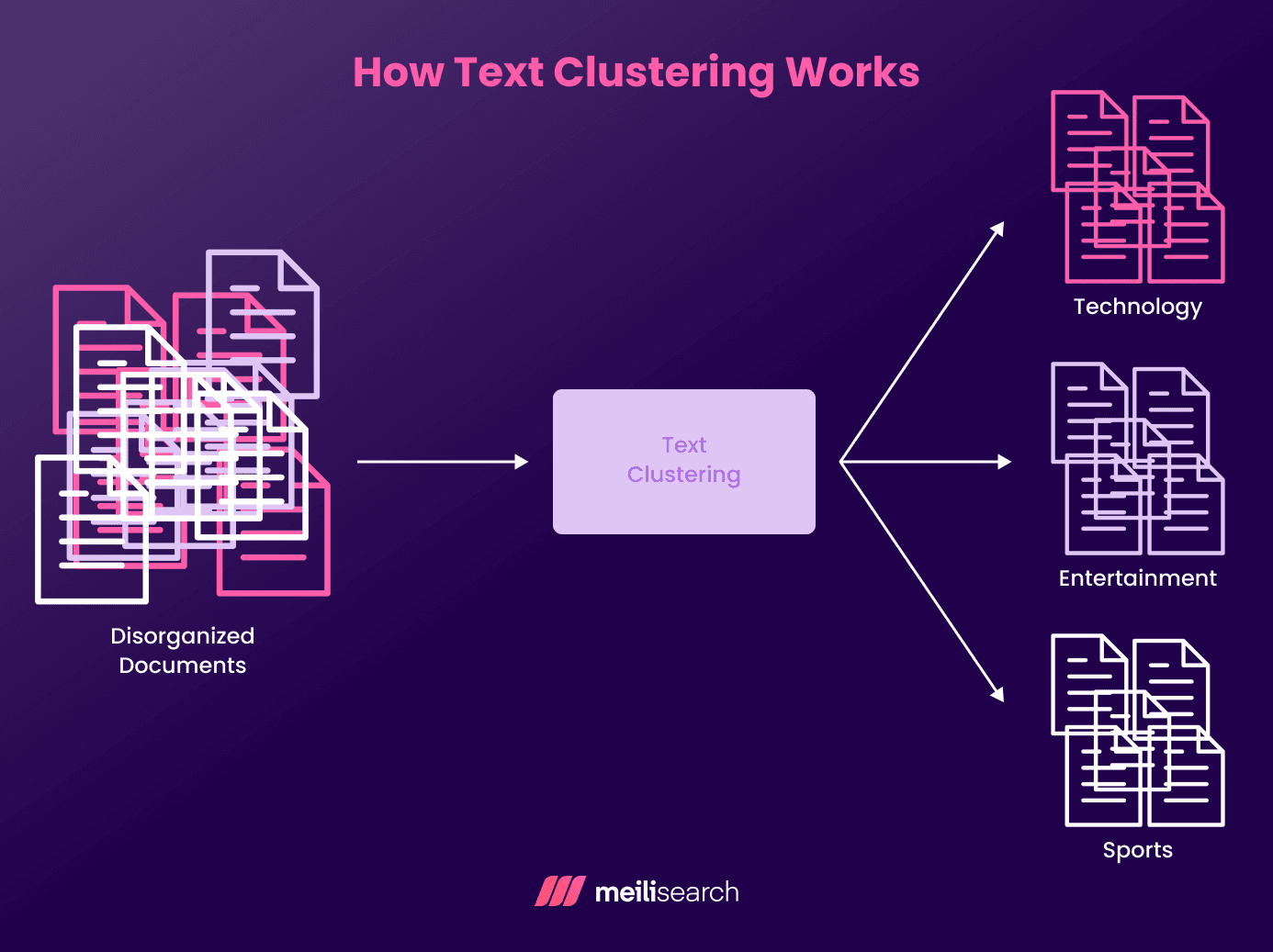

Text clustering organizes unstructured data by grouping similar documents without any labels. It identifies underlying themes, enabling users to enhance their research.

Here’s what this guide covers:

- What text clustering is and how it helps sequester text into groups based on meaning.

- The mechanism behind text clustering, from preprocessing to algorithms.

- Popular methods for text clustering, such as K-means, DBSCAN, and hierarchical clustering.

- A brief look at the benefits and applications of text clustering and how you can take advantage of it.

- For hardcore developers, we’ll look at a step-by-step Python workflow that builds, visualizes, and evaluates clusters.

- Most common text clustering mistakes and challenges for scaling businesses.

- How to use Meilisearch to make clusters searchable and useful.

Let’s get into it!

What is text clustering?

Text clustering is an unsupervised learning technique used by AI models to group similar text documents based on their content. Its primary purpose is to help determine themes in large datasets without pre-defined labels, especially when you want to separate text into different clusters within a broader natural language processing workflow.

It’s most commonly used to organize and analyze customer reviews, news articles, and research documents, among other types of content.

Let’s see how text clustering works behind the scenes.

How does text clustering work?

Text clustering consists of the following stages:

- Text preprocessing: Raw text is cleaned by removing unnecessary whitespace, special characters, and URLs. Then it is tokenized or broken apart into smaller units (words or phrases). Stop word removal (such as ‘a’ and ‘the’) and stemming and lemmatization (reducing words to their root form) can also be part of this stage.

- Embedding: Pre-processed text gets converted into numerical vectors. The techniques used here include term frequency-inverse document frequency (TF-IDF), Word2Vec, and transformer-based embeddings such as BERT.

- Clustering: In the third stage, clustering algorithms, such as K-means, DBSCAN, and hierarchical clustering, are applied. These algorithms assess the vector space created in stage two and group similar vectors into clusters.

- Evaluation: To assess how well the documents fit within the clusters, use metrics such as the Adjusted Rand Index (ARI) or the silhouette score. Techniques such as principal component analysis also enable more precise visualization when assessing separation quality.

Let’s take a closer look at the clustering algorithms that are most effective for different types of text data.

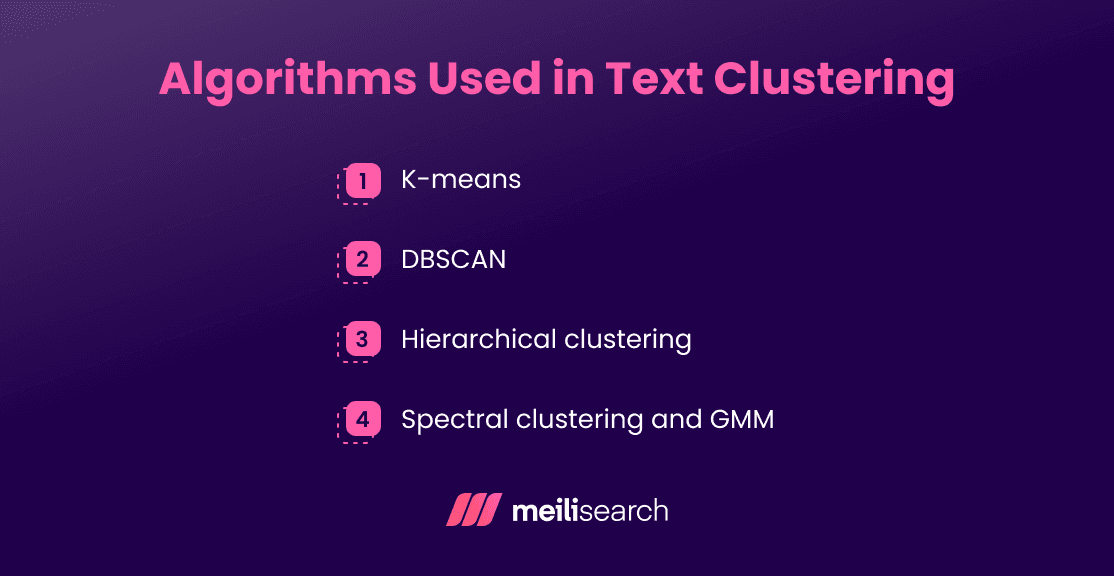

What algorithms are used in text clustering?

Every algorithm handles text data differently, based on the dataset’s size, shape, and noise.

The most commonly used clustering methods include K-means, DBSCAN, hierarchical clustering, and newer approaches such as spectral clustering and Gaussian Mixture Models (GMMs).

Let’s break down the most widely used methods:

1. K-means

K-means is a method in which each data point is assigned to the cluster with the nearest mean (centroid) thereby dividing the data into k clusters. The centroid positions are repeatedly recalculated to be the mean of all data points in a specific cluster. The process is repeated until the centroid positions are no longer changing.

This method performs really well on large datasets. However, it can struggle with noisy or overlapping text data.

2. DBSCAN

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) groups data points that are closely packed and marks the distant ones as outliers.

It works well for irregularly shaped clusters. It also doesn’t need you to predefine the number of clusters, so it works really well for grouping social media posts or product reviews.

3. Hierarchical clustering

Hierarchical clustering builds a tree-like structure that shows relationships among documents. You can cut this tree at different levels, forming clusters of varying granularity.

This comes in handy when dealing with smaller datasets where the relationships between entities are more important than processing speed.

4. Spectral clustering and GMM

Spectral clustering relies on similarity matrices to cluster data. It is computationally more expensive, but the payoff is its effectiveness when dealing with non-linear structures.

Gaussian Mixture Models (GMM) models assume that the data points are generated from Gaussian distributions. As a result, they allow soft cluster assignments. This is useful when you’re dealing with documents that span multiple topics.

What are the benefits of text clustering?

The main function of text clustering is to help teams access large amounts of data in a structured, easily digestible manner. It eliminates manual labeling and complex arrangements.

Here are the benefits of text clustering:

- Automated organization: Text clustering eliminates the need for manual sorting and tagging.

- Discovery of hidden patterns: Clustering reveals themes across datasets, such as the general sentiment in customer reviews or customer support tickets.

- Scalable analysis: Clustering enables large-scale analytics without disrupting operations.

- Better search and recommendations: Text clustering groups related documents to help search engines surface results that share the same theme. For example, if a user searches for ‘running shoes,’ the system can also return documents from clusters focused on durability, fit, or trail performance.

- Data-driven insights: For instance, support tickets may cluster around billing issues, login problems, or feature requests, enabling teams to quickly see which issues users struggle with the most.

Now that you know how valuable it is, let’s explore where text clustering is most useful in practice.

What are the main applications of text clustering?

Text clustering is most helpful in industries with a continual, daily influx of unstructured data. It can be used for:

- Document organization: Enterprises regularly group similar documents, emails, or reports, making extensive archives searchable and easier to manage.

- Easy search results retrieval: Clustering yields better search results faster by significantly improving relevance.

- Customer feedback analysis: When customer feedback is organized efficiently, businesses can analyze it to improve the customer experience.

- Academic and research use: Researchers can cluster papers, abstracts, or citations to identify overlapping topic areas and stay up to date with emerging trends.

Next up is why embeddings play a critical role in clustering quality.

Why use embeddings for text clustering?

Embedding converts text into numerical vectors, capturing its semantic meaning. Based on this, clustering algorithms can group documents by intent and context, rather than just by shared keywords.

AI models such as BERT and Word2Vec are built on deep learning and generate high-dimensional embeddings that capture the nuance of words and their meanings. Techniques like dimensionality reduction also simplify the underlying vector space, making the clusters much more contextually relevant and meaningful.

How do you perform text clustering in Python?

The Python workflow we will outline here has four steps. With the help of modern libraries like spaCy and scikit-learn, you can easily move from messy text to production-ready clusters.

Once you have them, integrating with search engines like Meilisearch is a breeze:

1. Pre-processing and tokenization

First things first, we need to normalize the text we have and remove any noise.

For this, you will convert the text to lower case, remove punctuation and stop words, and then apply stemming. Here’s what it looks like:

import re, nltk, spacy from nltk.corpus import stopwords # Download once if needed # nltk.download('punkt'); nltk.download('stopwords') # python -m spacy download en_core_web_sm nlp = spacy.load("en_core_web_sm") stop_words = set(stopwords.words("english")) def preprocess(text): text = re.sub(r"httpS+|[^a-zA-Zs]", " ", text.lower()) doc = nlp(text) tokens = [token.lemma_ for token in doc if token.text not in stop_words and len(token.text) > 2] return " ".join(tokens) docs_clean = [preprocess(doc) for doc in raw_docs]

The text is now standardized and tokenized, ready for feature extraction and semantic indexing.

2. Vectorization and embedding generation

You need to choose a vector representation that matches your goal and scale:

- TF-IDF: lightweight and interpretable.

- Word2Vec / GloVe: semantic similarity via word embeddings.

- BERT / Transformer models: contextual, sentence-level embeddings for highest accuracy.

from sklearn.feature_extraction.text import TfidfVectorizer from sentence_transformers import SentenceTransformer # TF-IDF representation tfidf = TfidfVectorizer(max_features=50000, ngram_range=(1, 2)) X_tfidf = tfidf.fit_transform(docs_clean) # Transformer-based embeddings model = SentenceTransformer("all-MiniLM-L6-v2") X_emb = model.encode(docs_clean, normalize_embeddings=True)

Another option is to store embeddings in Meilisearch to enable hybrid (keyword + vector) retrieval later.

3. Clustering algorithms in Python

After vectorization, you will need to select an algorithm suited to your data distribution:

- K-means for balanced, spherical clusters.

- DBSCAN for noisy or irregular data.

- Agglomerative (hierarchical) for dendrogram-based analysis.

from sklearn.cluster import KMeans, DBSCAN, AgglomerativeClustering # K-means kmeans = KMeans(n_clusters=10, n_init=10, random_state=42) labels_km = kmeans.fit_predict(X_emb) # DBSCAN dbscan = DBSCAN(eps=0.3, min_samples=5, metric="cosine") labels_db = dbscan.fit_predict(X_emb) # Hierarchical agg = AgglomerativeClustering(n_clusters=10, linkage="ward") labels_ag = agg.fit_predict(X_emb)

Remember that each algorithm has its own set of trade-offs. Before you consider scaling, try benchmarking with small subsets first.

4. Evaluation of clusters and integration into search-oriented workflow

You will need to evaluate the quality of your clusters using both intrinsic and extrinsic metrics:

- Silhouette Score, Davies-Bouldin, or Calinski-Harabasz for structure quality.

- Adjusted Rand Index (ARI) if ground-truth labels exist.

Visualize using PCA or t-SNE for interpretability.

from sklearn.metrics import silhouette_score, adjusted_rand_score from sklearn.decomposition import PCA import matplotlib.pyplot as plt sil_score = silhouette_score(X_emb, labels_km, metric="cosine") print(f"Silhouette score: {sil_score:.3f}") pca = PCA(n_components=2) coords = pca.fit_transform(X_emb) plt.scatter(coords[:,0], coords[:,1], c=labels_km, cmap="tab10", s=10) plt.show()

Store each document with its cluster_id and index it in Meilisearch. This will provide you with all the perks of using Meilisearch, including typo-tolerance, filtering, and hybrid-vector keyword search.

Let’s address the key challenges you’ll face when applying text clustering in real-world projects.

What are the challenges in text clustering?

Useful as it is, text clustering comes with several practical challenges that can affect the accuracy and scalability of your projects:

- High dimensionality: Text data often produces thousands of features, especially with TF-IDF embeddings. It has a very high dimensionality. You can reduce dimensions using PCA or t-SNE, but this can ultimately distort distances.

- Ambiguous language: Algorithms have difficulty accurately grouping semantically similar documents when language elements vary in meaning, such as when sarcasm or slang is used.

- Hard to scale: Large text datasets demand memory-efficient clustering. Algorithms like K-means scale well, but methods like hierarchical clustering can be too computationally expensive.

- Choosing the correct number of clusters: Without labels, deciding how many clusters to create is subjective. Some metrics, such as silhouette score or the elbow method, can provide guidance, but this is still mainly a trial-and-error process.

Next, let’s look at common mistakes teams make when clustering text data.

What are common mistakes in text clustering?

The following are some of the most common mistakes in text clustering that you need to be aware of, because even minor oversights can become costly very quickly:

- Slacking on pre-processing: If you make it a habit to skip cleaning steps, such as stop word removal, you will end up with noisy, inconsistent clusters. Plus, irrelevant tokens will inflate your already high dimensionality, leading to poorer model performance.

- Choosing the wrong clustering algorithm: For instance, if you apply the K-means algorithm to non-spherical data, there might be a split between related documents and a merge between non-related documents.

- Ignoring evaluation metrics: Without validation using silhouette or ARI scores, clusters may look good, but statistically, they will be a mess.

- Overlooking embeddings: If you rely solely on TF-IDF, you might miss out on the contextual meaning. Transformer embeddings, such as BERT or MiniLM, deliver much better separation.

When should you use text clustering over classification?

You should use text clustering when there is a shortage of labeled data, and you want to discover its overarching themes. Text clustering is best when you want to explore new datasets or identify emerging topics with flexible clustering models.

On the other hand, text classification comes in handy when you’re dealing with predefined labels and the data categories are already known.

How do you choose the number of clusters?

Choosing the right number of clusters is part science, part best-guess.

The elbow method plots the sum of squared Euclidean distances to identify a point at which adding more clusters yields suboptimal returns.

On another note, silhouette analysis measures how well a document fits within the aligned cluster, as opposed to others within the same dimensional space.

By combining these metrics with domain knowledge and reviewing your clustering results, you get the most meaningful outcomes.

What is the difference between text clustering and topic modeling?

Text clustering and topic modeling both organize large text datasets. However, the goals they serve are different.

Clustering is used to find relevant documents within a cluster. This helps in building search systems or recommendation engines.

Topic modeling, on the other hand, uncovers latent themes within a collection. Methods such as latent Dirichlet allocation (LDA) or non-negative matrix factorization (NMF) assign multiple topics to each document, with probabilities indicating the prevalence of each topic in that document.

This is more useful in content analysis or summarizing large corpora.

In short, clustering focuses on grouping similar documents, while topic modeling focuses on interpreting what they’re about.

Bringing it all together: mastering text clustering for real-world insights

Text clustering gives structure to disorganized text documents. It ensures that text can be analyzed and searched for insights, especially when you deal with document clustering at scale.

Text clustering supports better information retrieval and workflows powered by modern language models and deep learning. All of this leads to smarter analytics and data-driven decision-making for a business.

Enhance your clustered data with Meilisearch for fast, intelligent search and discovery

Once you have your clusters ready, you can index them in Meilisearch using cluster_id or tags. With Meilisearch, you get hybrid search, typo-tolerant results, and the ability to quickly turn your clusters into real-world, production-ready intelligence.