Elasticsearch vs Pinecone (vs Meilisearch): Which search solution actually fits your needs in 2025?

Elasticsearch vs Pinecone comparison: complex enterprise search vs AI vector database. Or explore Meilisearch's hybrid approach for simplicity + performance.

In this article

If you're comparing Elasticsearch vs Pinecone for your search infrastructure, you're likely wrestling with a fundamental question about the future of search technology: should you invest in a powerful but complex full-text search engine, or bet on the AI-powered vector search revolution?

But here's what most comparisons miss: you might be creating a false dichotomy. While Elasticsearch and Pinecone represent two different approaches to search (keyword-based vs semantic), the real challenge isn't choosing between them, it's finding a solution that delivers both capabilities without the operational overhead or astronomical costs.

The key questions you should be asking are:

- Do you need traditional full-text search, AI-powered semantic search, or both?

- Are you willing to manage complex infrastructure for more control, or do you prefer a managed service?

- Is your team equipped to handle a steep learning curve, or do you need something that works out-of-the-box?

- Can you afford enterprise-level pricing, or do you need a more cost-effective solution?

- Do you want to be locked into a proprietary platform, or maintain flexibility with open-source?

In short, here's what we recommend:

👉 Elasticsearch is the established leader in full-text search and analytics, offering unmatched power and flexibility for large-scale deployments. With its comprehensive feature set covering everything from log analytics to security monitoring, it's the go-to choice for enterprises with dedicated teams. However, its complexity requires significant expertise to manage, its resource consumption can drive up costs, and getting started involves navigating a steep learning curve that can delay time-to-value.

👉 Pinecone pioneered the vector database category, making it the default choice for teams building AI-powered applications with semantic search and RAG capabilities. Its fully managed service abstracts away infrastructure complexity, and its focus on vector embeddings makes it ideal for modern AI use cases. The trade-offs include vendor lock-in with a proprietary platform, potential for usage-based pricing to escalate, and the need to carefully plan metadata structures upfront.

Both platforms excel in their domains but force you to make significant compromises. What if you could have the best of both worlds: powerful search capabilities, AI-ready features, and operational simplicity?

👉 Meilisearch delivers lightning-fast search (under 50ms) with both traditional and emerging AI-powered capabilities in a single, developer-friendly package. Its hybrid search combines keyword and semantic search seamlessly, while features like built-in typo tolerance and instant search work out-of-the-box. With both open-source self-hosting and affordable cloud options (starting at $30/month), Meilisearch provides enterprise-grade search without enterprise complexity, making it the pragmatic choice for teams that want powerful search without the operational burden.

If you're looking for a search solution that combines simplicity, performance, and modern AI capabilities at a fraction of the cost and complexity, see how Meilisearch can transform your search experience with a 14-day free trial.

Elasticsearch vs Pinecone vs Meilisearch at a glance

| Elasticsearch | Pinecone | Meilisearch | |

|---|---|---|---|

| Core Technology | ⭐⭐⭐⭐⭐ Full-text search on Apache Lucene | ⭐⭐⭐⭐ Vector database for embeddings | ⭐⭐⭐⭐⭐ Hybrid search (keyword + vector) |

| Setup Complexity | ⭐⭐ Complex cluster management | ⭐⭐⭐⭐ Fully managed service | ⭐⭐⭐⭐⭐ Works out-of-the-box |

| Time to First Query | ⭐⭐ Slower setup process | ⭐⭐⭐⭐ Quick to start | ⭐⭐⭐⭐⭐ Quick to start |

| Learning Curve | ⭐⭐ Steep (Query DSL complexity) | ⭐⭐⭐⭐ Moderate | ⭐⭐⭐⭐⭐ Gentle |

| Performance | ⭐⭐⭐⭐ Fast with proper tuning | ⭐⭐⭐⭐ Low latency at scale | ⭐⭐⭐⭐⭐ <50ms out-of-the-box |

| Resource Usage | ⭐⭐ Memory-intensive | ⭐⭐⭐⭐ Managed by provider | ⭐⭐⭐⭐⭐ Lightweight |

| Pricing | ⭐⭐ From $99/month | ⭐⭐⭐ Free tier, then $50/month | ⭐⭐⭐⭐⭐ Free OSS, cloud from $30/month |

| Open Source | ⭐⭐⭐ Dual license (some limitations) | ❌ Proprietary | ⭐⭐⭐⭐⭐ MIT license |

| AI/Vector Search | ⭐⭐⭐ Built-in capabilities | ⭐⭐⭐⭐⭐ Core capability | ⭐⭐⭐⭐ Native hybrid search (stabilizing) |

| Best For | Large enterprises with dedicated teams | AI-first applications | Teams wanting simplicity and performance |

The fundamental divide: Full-text vs vector vs hybrid search

The search technology landscape has fundamentally shifted. Where once the debate was about which full-text search engine to use, now it's about choosing between search paradigms entirely.

Elasticsearch represents the pinnacle of traditional full-text search evolution. Built on Apache Lucene, it excels at tokenizing text, creating inverted indexes, and finding exact or fuzzy keyword matches. Its Query DSL offers incredible power for complex queries, aggregations, and analytics. This makes it ideal for log analysis, security monitoring, and any scenario where you need precise control over how text is analyzed and searched.

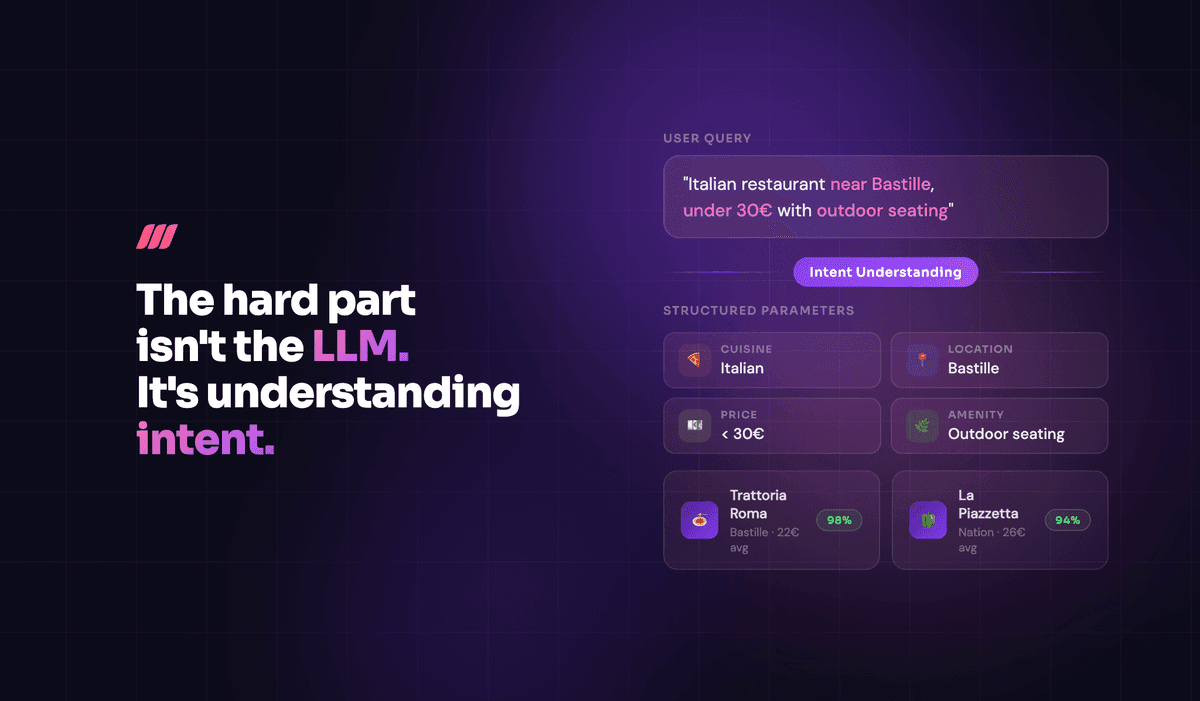

Pinecone took a completely different path, focusing primarily on vector search. Instead of analyzing text directly, it works with numerical representations (embeddings) that capture semantic meaning. This enables finding conceptually similar content even without matching keywords. For AI applications using Large Language Models, Pinecone acts as long-term memory, enabling Retrieval-Augmented Generation (RAG) and helping reduce hallucinations.

Meilisearch recognized that this isn't an either-or decision. Real-world search needs both precision and understanding. Its hybrid search capability combines traditional keyword matching with semantic vector search in a single query. Users get exact matches when they exist, and semantically relevant results when they don't. This unified approach means you don't have to choose your search paradigm upfront or maintain separate systems.

Elasticsearch dominates at scale (but at what cost?)

Elasticsearch's dominance in enterprise search comes from its incredible breadth of capabilities. The platform handles everything from website search to log analytics, from security monitoring to application performance management. With over 300 integrations and a mature ecosystem, it can ingest and analyze virtually any type of data.

The distributed architecture allows Elasticsearch to scale horizontally across hundreds of nodes, handling petabytes of data. Its aggregations framework enables complex analytics that go far beyond simple search, supporting everything from real-time dashboards to machine learning jobs. For organizations with massive datasets and complex requirements, this power is unmatched.

But this power comes with significant costs, both visible and hidden. The obvious cost is financial: cloud deployments start at $99/month and can easily reach thousands for production workloads. The hidden costs are operational. Managing an Elasticsearch cluster requires deep expertise in concepts like shards, replicas, and index lifecycle management. Performance tuning is an art form, requiring careful balance of memory allocation, cache sizing, and query optimization.

The complexity extends to basic operations. Getting data into Elasticsearch requires choosing between multiple agents (Filebeat, Metricbeat, Elastic Agent), each with different configuration requirements. Creating an effective mapping requires understanding analyzers, tokenizers, and field types. Even something as simple as updating a document can involve decisions about refresh intervals and versioning.

For organizations with dedicated search teams and complex requirements, these trade-offs are acceptable. But for teams that just want to add great search to their application, Elasticsearch can be overkill.

Pinecone owns AI search (but locks you in)

Pinecone deserves credit for making vector search accessible. Before Pinecone, implementing semantic search required building custom infrastructure with approximate nearest neighbor algorithms, managing embeddings, and scaling vector operations. Pinecone packaged all this complexity into a simple API.

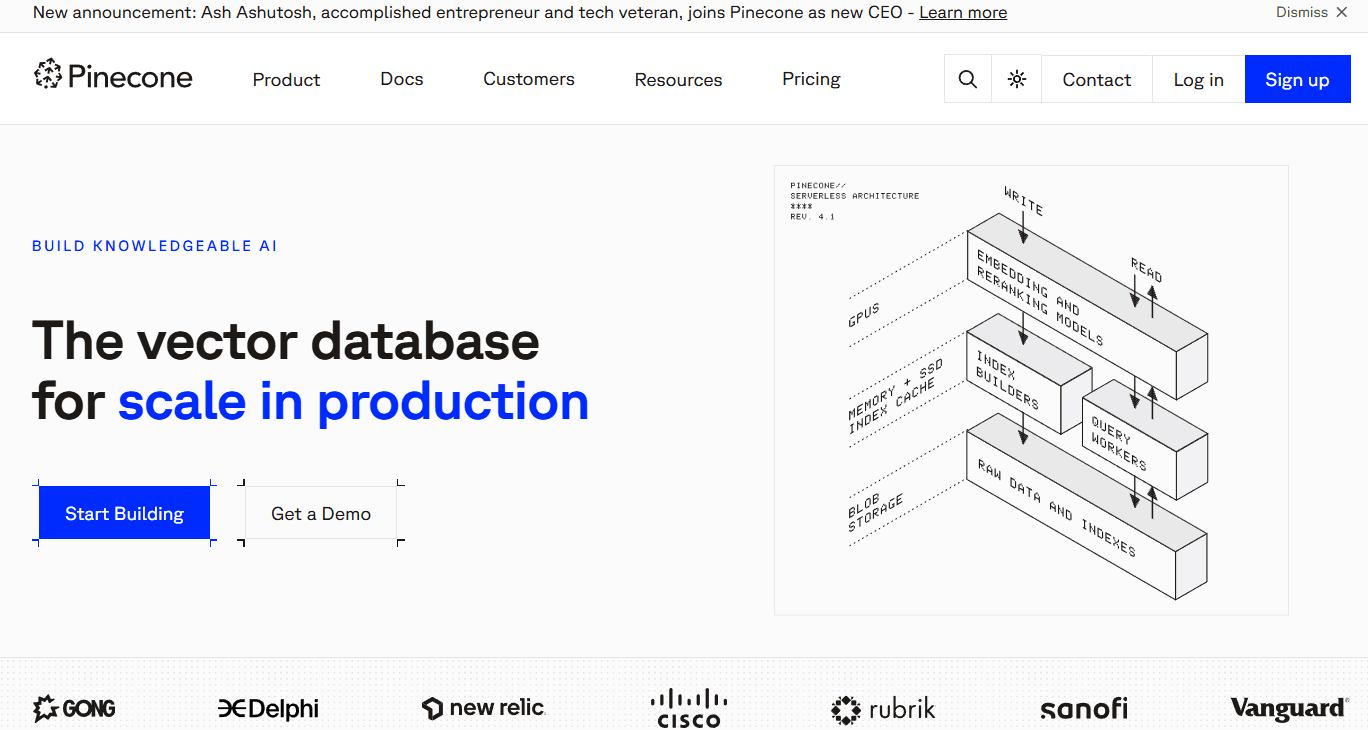

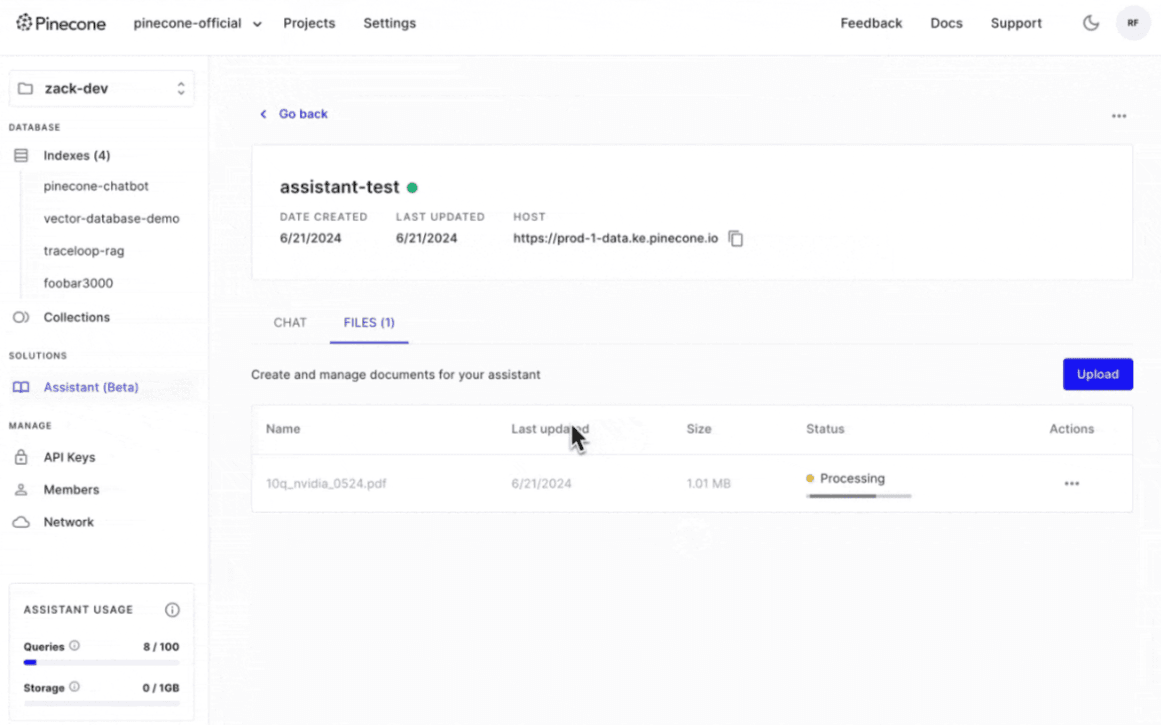

The platform excels at its core mission: storing and searching vector embeddings at scale. Its serverless architecture automatically handles scaling, its managed service eliminates infrastructure headaches, and its integrations with OpenAI, Cohere, and other embedding providers simplify the AI development workflow. For teams building RAG applications or semantic search, Pinecone provides a fast path to production.

Recent additions like the Inference API (providing managed embedding generation) and Pinecone Assistant (automating RAG pipelines) show the company's ambition to move beyond pure vector storage into a comprehensive AI infrastructure platform. These features can significantly accelerate AI application development. The platform also supports sparse vectors and hybrid search, combining traditional keyword capabilities with semantic search.

Source: Pinecone

Source: Pinecone

However, Pinecone's approach creates significant lock-in risks. As a proprietary, closed-source platform, you're entirely dependent on their roadmap, pricing decisions, and platform availability. There's no option to self-host if your requirements change. The pricing model, while offering a free tier to start, can escalate quickly as your data and query volume grow. Some users report unexpected costs from bandwidth charges and operation fees.

The platform also has notable limitations. Metadata structures need careful planning upfront as they cannot be changed after index creation. The eventual consistency model can cause delays in data availability. While Pinecone now offers keyword search through sparse vectors, teams needing complex traditional search operations may find the capabilities limited compared to dedicated full-text engines.

Meilisearch delivers both worlds without the pain

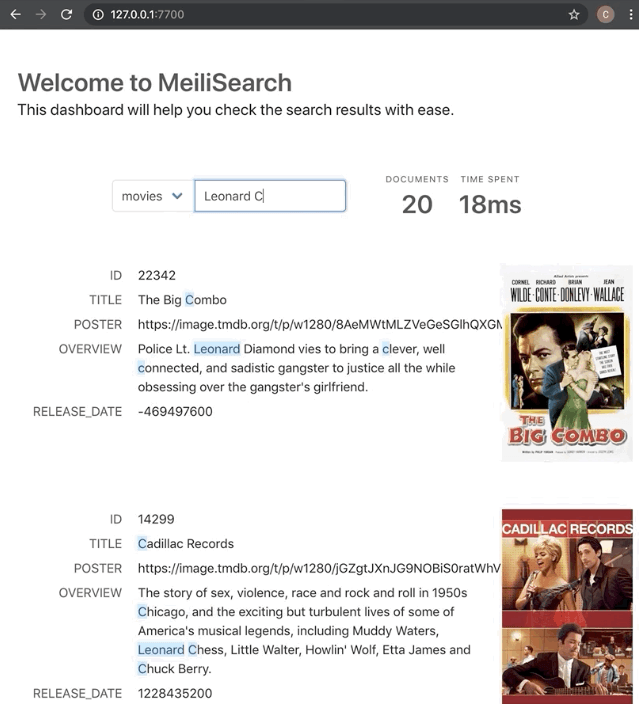

Meilisearch started with a simple observation: search shouldn't be this hard. While others were adding features and complexity, Meilisearch focused on making search that just works. This philosophy of simplicity doesn't mean sacrificing power; it means thoughtful design that provides sophisticated capabilities through intuitive interfaces.

The technical foundation reflects this philosophy. Written in Rust for performance and safety, Meilisearch delivers consistent sub-50ms search latency without any tuning. Typo tolerance is enabled by default. The ranking algorithm provides relevant results immediately. These aren't just convenience features; they're fundamental design decisions that eliminate entire categories of problems.

The recent addition of hybrid search showcases Meilisearch's pragmatic approach to innovation. Rather than forcing users to choose between keyword and semantic search, Meilisearch seamlessly combines both. A single API endpoint handles both search types, automatically balancing between exact matches and semantic similarity. You can adjust this balance with a simple parameter, no infrastructure changes required. While this feature is still stabilizing and marked as experimental in some releases, it represents the future of unified search.

This simplicity extends throughout the platform. Setting up Meilisearch takes minimal time and effort. The API is RESTful and intuitive. Document structure is flexible with no strict schemas required. Updates happen in near real-time without manual refresh commands. Security uses straightforward API keys without complex role hierarchies.

For developers, this means shipping search features faster. For businesses, it means lower operational costs and faster time-to-market. For users, it means consistently fast and relevant search results.

Developer experience reveals true operational costs

The true cost of a search solution isn't just the monthly bill; it's the ongoing effort required to build, maintain, and evolve your search functionality.

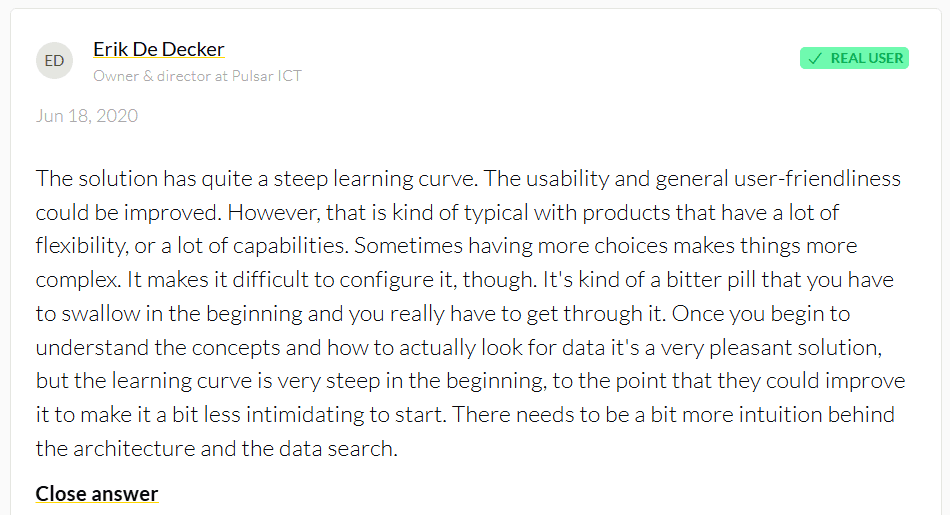

Elasticsearch has a steep learning curve. New developers often spend considerable time learning the Query DSL, understanding mapping concepts, and debugging relevance issues. The documentation, while comprehensive, reflects the platform's complexity with extensive pages covering hundreds of features.

Simple tasks often require understanding multiple interconnected systems. Adding a new field might require updating mappings, modifying ingest pipelines, adjusting analyzers, and potentially rebuilding indexes, though dynamic mapping can simplify some scenarios.

Source: Peerspot

Source: Peerspot

Pinecone offers a better initial experience. The API is straightforward, and getting started with vector search is quick. However, developers quickly hit walls when they need features outside Pinecone's focus. Want to add filters? You need to plan your metadata structure carefully upfront as it cannot be changed after index creation. Need complex traditional keyword search? While Pinecone offers sparse vectors and lexical search, the capabilities may be limited compared to dedicated full-text engines. The proprietary nature also means relying primarily on official documentation and support.

Meilisearch prioritizes developer happiness from the first interaction. The API is designed to be learned quickly and intuitively. Default configurations work well for most use cases. Error messages are clear and actionable. The documentation is concise but complete, with practical examples for common scenarios. Official SDKs for all major languages mean you're writing idiomatic code, not wrestling with HTTP requests.

The difference is dramatic in practice. A developer new to Meilisearch can implement production-ready search significantly faster than with Elasticsearch. The same task that might require extensive learning and experimentation in Elasticsearch becomes straightforward with Meilisearch. With Pinecone, you'd quickly have vector search working but may need additional time to handle comprehensive search requirements.

Pricing models expose different philosophies

How these platforms charge reveals their target markets and priorities.

Elasticsearch's pricing structure reflects its enterprise focus.

The cloud service starts at $99/month, but costs escalate quickly with data volume, query load, and feature requirements. The pricing calculator requires estimates for data nodes, machine learning nodes, master nodes, and coordinating nodes.

Many features like machine learning and advanced security require higher tiers. For self-hosted deployments, the infrastructure costs can be substantial given Elasticsearch's resource requirements. The single pricing model means you're locked into their consumption-based approach whether it fits your needs or not.

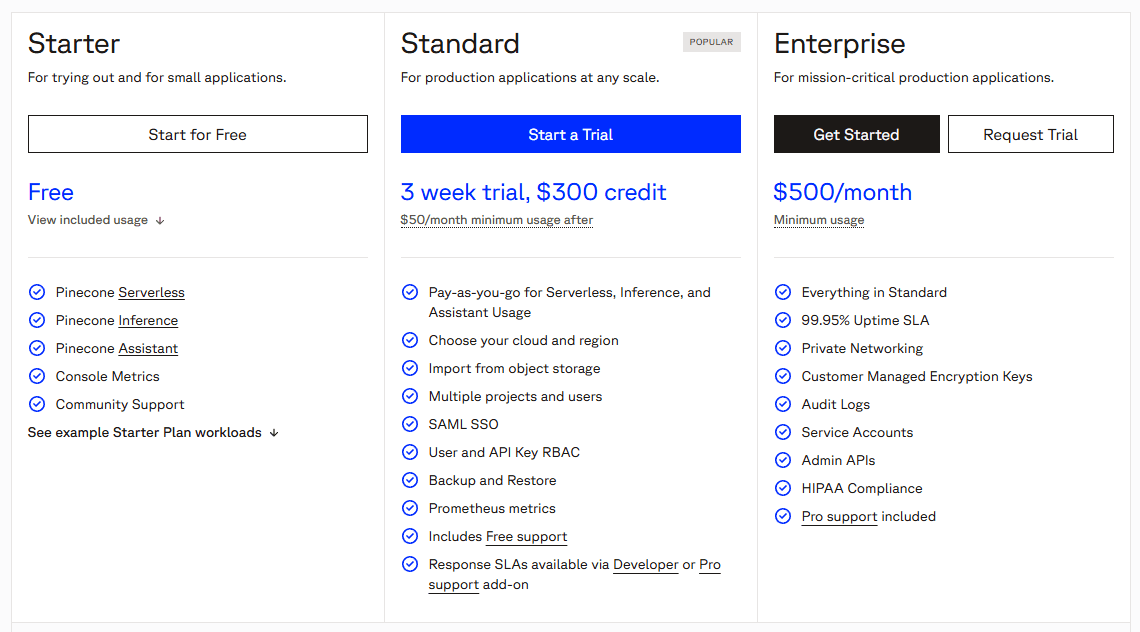

Pinecone uses a consumption-based model with both free and paid tiers.

The free Starter tier allows experimentation, while the Standard tier has a $50/month minimum. Beyond that, you pay for storage, reads, and writes. The serverless pricing promises to reduce costs by only charging for actual usage, but users report that costs can be unpredictable, with some experiencing unexpected charges from operations they didn't anticipate.

The lack of a self-hosting option means you're locked into their pricing model regardless of scale. Like Elasticsearch, Pinecone offers only one pricing approach, which may not suit all customer needs.

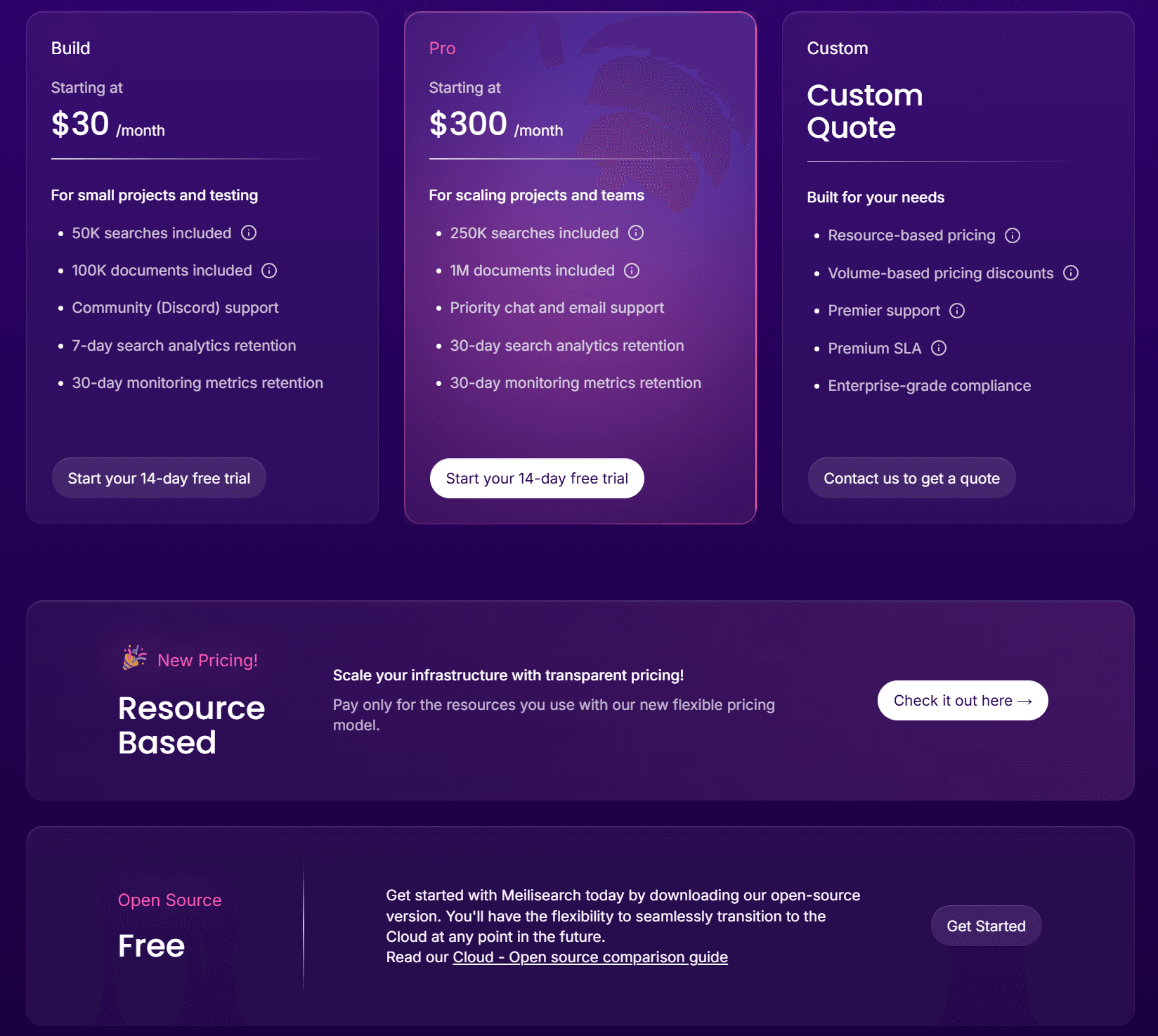

Meilisearch offers refreshing simplicity and unique flexibility with dual pricing models.

The open-source version is completely free under the MIT license, allowing for unlimited self-hosted deployments. The cloud service starts at just $30/month with both subscription-based and resource-based pricing options available. This dual approach provides customers with flexibility that single-model competitors cannot match.

The subscription model offers predictable monthly costs, while the resource-based option allows you to pay based on actual consumption, solving the overage unpredictability issue that larger customers often face with other platforms. You know exactly what you're paying for: documents stored and searches performed, with the ability to choose the pricing model that best fits your usage patterns and scale.

This pricing transparency and flexibility extend to scaling. With Meilisearch, you can start with the open-source version, move to the Cloud when you need managed hosting, and choose between subscription or resource-based pricing as your needs evolve.

The resource-based pricing particularly benefits larger customers who need cost predictability at scale, eliminating surprise overages common with other platforms. If costs become a concern, you can always move back to self-hosting. This flexibility is impossible with Pinecone and limited with Elasticsearch's dual licensing model.

Performance and scalability considerations

Performance benchmarks tell only part of the story. Real-world performance depends on your specific use case, data, and requirements.

Elasticsearch can achieve impressive performance with appropriate configuration.

While default settings work acceptably for many smaller workloads, larger deployments benefit from optimization: tuning shard sizing, configuring appropriate heap memory, adjusting merge policies, and potentially implementing hot-warm-cold architectures for time-series data. The distributed architecture enables massive scale, though coordination overhead can impact latency for smaller deployments. Many teams find that achieving optimal performance requires dedicated expertise.

Pinecone delivers consistent low-latency performance for vector operations.

The managed service handles optimization automatically, and the serverless architecture scales smoothly. However, performance is optimized primarily for vector search operations. When combining vector search with complex metadata filters, performance can degrade significantly as documented in their technical guides. The platform focuses on similarity search rather than complex query operations common in traditional search engines.

Meilisearch provides exceptional performance by default. The promise of sub-50ms search results isn't marketing; it's architectural.

The single-node design eliminates network coordination overhead. The Rust implementation ensures efficient memory usage. Intelligent caching accelerates repeated queries. While this design has scale limits compared to distributed systems, Meilisearch can technically handle hundreds of millions of documents on a single server, though performance at this scale is still being optimized. For most applications, this is more than sufficient.

The scalability trade-offs are important to understand. Elasticsearch scales to massive deployments through its distributed architecture, with documented cases handling hundreds of billions of documents. Pinecone's serverless model handles scaling automatically but at increasing cost. Meilisearch's single-node architecture has clear limits but offers simplicity and predictable performance within those limits, with experimental sharding features available for enterprise users needing additional scale.

Elasticsearch vs Pinecone vs Meilisearch: Which should you choose?

The choice between these platforms depends on your specific requirements, team capabilities, and growth trajectory.

Choose Elasticsearch if:

- You need a complete search and analytics platform beyond just search

- Your dataset is truly massive (billions of documents)

- You have a dedicated team with search expertise

- You require complex aggregations and analytics

- You need fine-grained control over every aspect of text analysis

- Your use case extends to log analytics, security monitoring, or observability

Start using Elasticsearch to scale your search and analytics across massive datasets.

Choose Pinecone if:

- You're building AI-first applications with semantic search

- Vector search is your primary requirement

- You prefer fully managed services over self-hosting

- Your team is familiar with AI/ML workflows

- You're implementing RAG for Large Language Models

- You can accept vendor lock-in for reduced operational overhead

Build AI-powered apps with fast and reliable vector search using Pinecone.

Choose Meilisearch if:

- You want powerful search without operational complexity

- Speed and relevance are critical for user experience

- You need both traditional and AI-powered search capabilities

- Budget and cost predictability matter

- You value open-source flexibility

- Your team wants to ship features quickly without extensive learning

- You're building search for websites, e-commerce, or SaaS applications

Ready to see how simple powerful search can be? Start your free 14-day trial of Meilisearch Cloud or download the open-source version today.

The search landscape has evolved beyond the false choice between traditional and AI-powered search. While Elasticsearch offers unmatched power for those who need it, and Pinecone provides specialized vector search for AI applications, Meilisearch delivers what most teams actually need: fast, relevant search that's easy to implement and affordable to run.

In a world where user expectations for search are higher than ever, you need a solution that delivers exceptional results from day one and grows with your needs.

Stop choosing between simplicity and power

Meilisearch delivers hybrid search with both keyword precision and semantic understanding in one developer-friendly package. With sub-50ms performance out of the box, flexible pricing options, and no operational overhead, you can ship exceptional search experiences without the complexity or costs of enterprise platforms.